RSM in large markets

Market-halving scheme

When the market is large, the following general scheme can be used: [1] :341–344

- The buyers are asked to reveal their valuations.

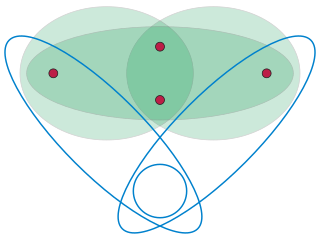

- The buyers are split to two sub-markets, ("left") and ("right"), using simple random sampling: each buyer goes to one of the sides by tossing a fair coin.

- In each sub-market , an empirical distribution function is calculated.

- The Bayesian-optimal mechanism (Myerson's mechanism) is applied in sub-market with distribution , and in with .

This scheme is called "Random-Sampling Empirical Myerson" (RSEM).

The declaration of each buyer has no effect on the price he has to pay; the price is determined by the buyers in the other sub-market. Hence, it is a dominant strategy for the buyers to reveal their true valuation. In other words, this is a truthful mechanism.

Intuitively, by the law of large numbers, if the market is sufficiently large then the empirical distributions are sufficiently similar to the real distributions, so we expect the RSEM to attain near-optimal profit. However, this is not necessarily true in all cases. It has been proved to be true in some special cases.

The simplest case is digital goods auction. There, step 4 is simple and consists only of calculating the optimal price in each sub-market. The optimal price in is applied to and vice versa. Hence, the mechanism is called "Random-Sampling Optimal Price" (RSOP). This case is simple because it always calculates feasible allocations. I.e, it is always possible to apply the price calculated in one side to the other side. This is not necessarily the case with physical goods.

Even in a digital goods auction, RSOP does not necessarily converge to the optimal profit. It converges only under the bounded valuations assumption: for each buyer, the valuation of the item is between 1 and , where is some constant. The convergence rate of RSOP to optimality depends on . The convergence rate also depends on the number of possible "offers" considered by the mechanism. [2]

To understand what an "offer" is, consider a digital goods auction in which the valuations of the buyers, in dollars, are known to be bounded in . If the mechanism uses only whole dollar prices, then there are only possible offers.

In general, the optimization problem may involve much more than just a single price. For example, we may want to sell several different digital goods, each of which may have a different price. So instead of a "price", we talk on an "offer". We assume that there is a global set of possible offers. For every offer and agent , is the amount that agent pays when presented with the offer . In the digital-goods example, is the set of possible prices. For every possible price , there is a function such that is either 0 (if ) or (if ).

For every set of agents, the profit of the mechanism from presenting the offer to the agents in is:

and the optimal profit of the mechanism is:

The RSM calculates, for each sub-market , an optimal offer , calculated as follows:

The offer is applied to the buyers in , i.e.: each buyer who said that receives the offered allocation and pays ; each buyer in who said that do not receive and do not pay anything. The offer is applied to the buyers in in a similar way.

Profit-oracle scheme

Profit oracle is another RSM scheme that can be used in large markets. [3] It is useful when we do not have direct access to agents' valuations (e.g. due to privacy reasons). All we can do is run an auction and watch its expected profit. In a single-item auction, where there are bidders, and for each bidder there are at most possible values (selected at random with unknown probabilities), the maximum-revenue auction can be learned using:

calls to the oracle-profit.