Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, textures, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

In 3D computer graphics, radiosity is an application of the finite element method to solving the rendering equation for scenes with surfaces that reflect light diffusely. Unlike rendering methods that use Monte Carlo algorithms, which handle all types of light paths, typical radiosity only account for paths which leave a light source and are reflected diffusely some number of times before hitting the eye. Radiosity is a global illumination algorithm in the sense that the illumination arriving on a surface comes not just directly from the light sources, but also from other surfaces reflecting light. Radiosity is viewpoint independent, which increases the calculations involved, but makes them useful for all viewpoints.

In 3D computer graphics, ray tracing is a technique for modeling light transport for use in a wide variety of rendering algorithms for generating digital images.

In computer graphics, rasterisation or rasterization is the task of taking an image described in a vector graphics format (shapes) and converting it into a raster image. The rasterized image may then be displayed on a computer display, video display or printer, or stored in a bitmap file format. Rasterization may refer to the technique of drawing 3D models, or to the conversion of 2D rendering primitives, such as polygons and line segments, into a rasterized format.

In computer graphics, photon mapping is a two-pass global illumination rendering algorithm developed by Henrik Wann Jensen between 1995 and 2001 that approximately solves the rendering equation for integrating light radiance at a given point in space. Rays from the light source and rays from the camera are traced independently until some termination criterion is met, then they are connected in a second step to produce a radiance value. The algorithm is used to realistically simulate the interaction of light with different types of objects. Specifically, it is capable of simulating the refraction of light through a transparent substance such as glass or water, diffuse interreflection between illuminated objects, the subsurface scattering of light in translucent materials, and some of the effects caused by particulate matter such as smoke or water vapor. Photon mapping can also be extended to more accurate simulations of light, such as spectral rendering. Progressive photon mapping (PPM) starts with ray tracing and then adds more and more photon mapping passes to provide a progressively more accurate render.

Ray casting is the methodological basis for 3D CAD/CAM solid modeling and image rendering. It is essentially the same as ray tracing for computer graphics where virtual light rays are "cast" or "traced" on their path from the focal point of a camera through each pixel in the camera sensor to determine what is visible along the ray in the 3D scene. The term "Ray Casting" was introduced by Scott Roth while at the General Motors Research Labs from 1978–1980. His paper, "Ray Casting for Modeling Solids", describes modeled solid objects by combining primitive solids, such as blocks and cylinders, using the set operators union (+), intersection (&), and difference (-). The general idea of using these binary operators for solid modeling is largely due to Voelcker and Requicha's geometric modelling group at the University of Rochester. See solid modeling for a broad overview of solid modeling methods. This figure on the right shows a U-Joint modeled from cylinders and blocks in a binary tree using Roth's ray casting system in 1979.

In computer graphics, a shader is a computer program that calculates the appropriate levels of light, darkness, and color during the rendering of a 3D scene—a process known as shading. Shaders have evolved to perform a variety of specialized functions in computer graphics special effects and video post-processing, as well as general-purpose computing on graphics processing units.

Reyes rendering is a computer software architecture used in 3D computer graphics to render photo-realistic images. It was developed in the mid-1980s by Loren Carpenter and Robert L. Cook at Lucasfilm's Computer Graphics Research Group, which is now Pixar. It was first used in 1982 to render images for the Genesis effect sequence in the movie Star Trek II: The Wrath of Khan. Pixar's RenderMan was an implementation of the Reyes algorithm, It has been deprecated as of 2016 and removed as of RenderMan 21. According to the original paper describing the algorithm, the Reyes image rendering system is "An architecture for fast high-quality rendering of complex images." Reyes was proposed as a collection of algorithms and data processing systems. However, the terms "algorithm" and "architecture" have come to be used synonymously in this context and are used interchangeably in this article.

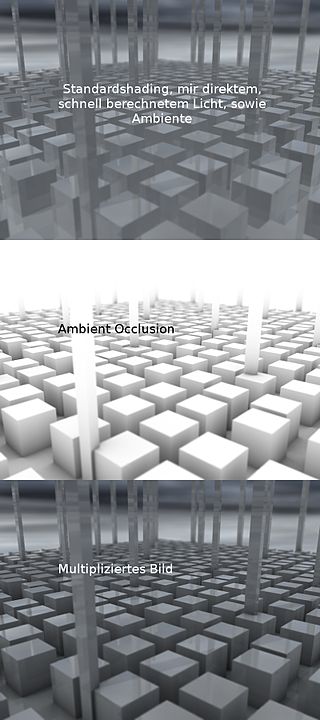

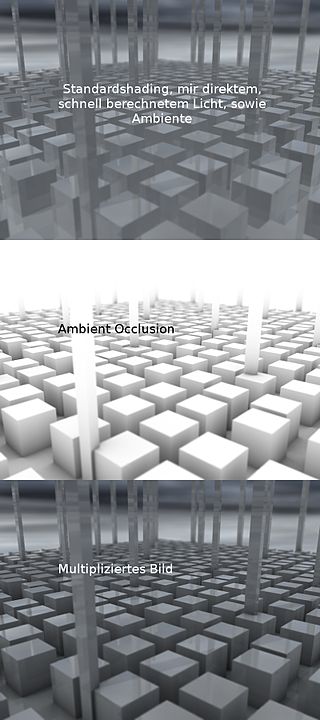

In 3D computer graphics, modeling, and animation, ambient occlusion is a shading and rendering technique used to calculate how exposed each point in a scene is to ambient lighting. For example, the interior of a tube is typically more occluded than the exposed outer surfaces, and becomes darker the deeper inside the tube one goes.

Real-time computer graphics or real-time rendering is the sub-field of computer graphics focused on producing and analyzing images in real time. The term can refer to anything from rendering an application's graphical user interface (GUI) to real-time image analysis, but is most often used in reference to interactive 3D computer graphics, typically using a graphics processing unit (GPU). One example of this concept is a video game that rapidly renders changing 3D environments to produce an illusion of motion.

Subsurface scattering (SSS), also known as subsurface light transport (SSLT), is a mechanism of light transport in which light that penetrates the surface of a translucent object is scattered by interacting with the material and exits the surface potentially at a different point. Light generally penetrates the surface and gets scattered a number of times at irregular angles inside the material before passing back out of the material at a different angle than it would have had if it had been reflected directly off the surface.

In 3D computer graphics, polygonal modeling is an approach for modeling objects by representing or approximating their surfaces using polygon meshes. Polygonal modeling is well suited to scanline rendering and is therefore the method of choice for real-time computer graphics. Alternate methods of representing 3D objects include NURBS surfaces, subdivision surfaces, and equation-based representations used in ray tracers.

Volume ray casting, sometimes called volumetric ray casting, volumetric ray tracing, or volume ray marching, is an image-based volume rendering technique. It computes 2D images from 3D volumetric data sets. Volume ray casting, which processes volume data, must not be mistaken with ray casting in the sense used in ray tracing, which processes surface data. In the volumetric variant, the computation doesn't stop at the surface but "pushes through" the object, sampling the object along the ray. Unlike ray tracing, volume ray casting does not spawn secondary rays. When the context/application is clear, some authors simply call it ray casting. Because ray marching does not necessarily require an exact solution to ray intersection and collisions, it is suitable for real time computing for many applications for which ray tracing is unsuitable.

In mathematics and its applications, the signed distance function is the orthogonal distance of a given point x to the boundary of a set Ω in a metric space, with the sign determined by whether or not x is in the interior of Ω. The function has positive values at points x inside Ω, it decreases in value as x approaches the boundary of Ω where the signed distance function is zero, and it takes negative values outside of Ω. However, the alternative convention is also sometimes taken instead.

Computer graphics lighting is the collection of techniques used to simulate light in computer graphics scenes. While lighting techniques offer flexibility in the level of detail and functionality available, they also operate at different levels of computational demand and complexity. Graphics artists can choose from a variety of light sources, models, shading techniques, and effects to suit the needs of each application.

Fractal-generating software is any type of graphics software that generates images of fractals. There are many fractal generating programs available, both free and commercial. Mobile apps are available to play or tinker with fractals. Some programmers create fractal software for themselves because of the novelty and because of the challenge in understanding the related mathematics. The generation of fractals has led to some very large problems for pure mathematics.

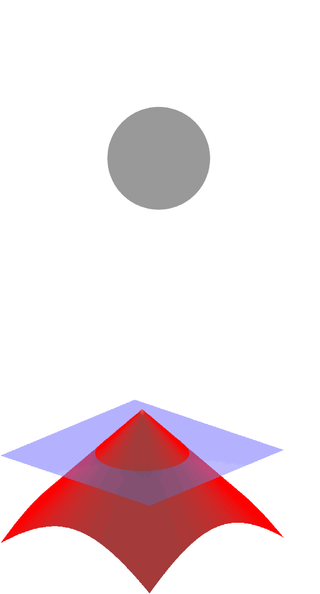

In mathematics, an orbit trap is a method of colouring fractal images based upon how close an iterative function, used to create the fractal, approaches a geometric shape, called a "trap". Typical traps are points, lines, circles, flower shapes and even raster images. Orbit traps are typically used to colour two dimensional fractals representing the complex plane.

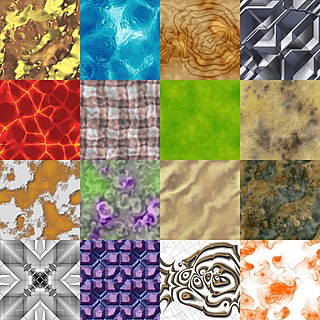

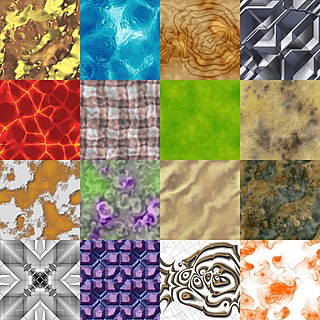

In computer graphics, a procedural texture is a texture created using a mathematical description rather than directly stored data. The advantage of this approach is low storage cost, unlimited texture resolution and easy texture mapping. These kinds of textures are often used to model surface or volumetric representations of natural elements such as wood, marble, granite, metal, stone, and others.

This is a glossary of terms relating to computer graphics.

Shadertoy is an online community and tool for creating and sharing shaders through WebGL, used for both learning and teaching 3D computer graphics in a web browser.