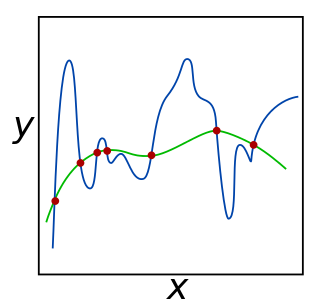

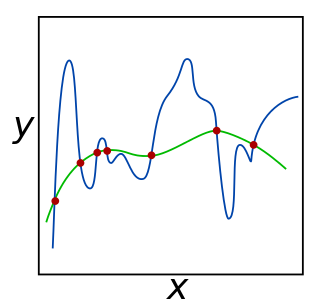

In mathematics, statistics, finance, computer science, particularly in machine learning and inverse problems, regularization is a process that changes the result answer to be "simpler". It is often used to obtain results for ill-posed problems or to prevent overfitting.

The Frank–Wolfe algorithm is an iterative first-order optimization algorithm for constrained convex optimization. Also known as the conditional gradient method, reduced gradient algorithm and the convex combination algorithm, the method was originally proposed by Marguerite Frank and Philip Wolfe in 1956. In each iteration, the Frank–Wolfe algorithm considers a linear approximation of the objective function, and moves towards a minimizer of this linear function.

Matching pursuit (MP) is a sparse approximation algorithm which finds the "best matching" projections of multidimensional data onto the span of an over-complete dictionary . The basic idea is to approximately represent a signal from Hilbert space as a weighted sum of finitely many functions taken from . An approximation with atoms has the form

Limited-memory BFGS is an optimization algorithm in the family of quasi-Newton methods that approximates the Broyden–Fletcher–Goldfarb–Shanno algorithm (BFGS) using a limited amount of computer memory. It is a popular algorithm for parameter estimation in machine learning. The algorithm's target problem is to minimize over unconstrained values of the real-vector where is a differentiable scalar function.

Stochastic approximation methods are a family of iterative methods typically used for root-finding problems or for optimization problems. The recursive update rules of stochastic approximation methods can be used, among other things, for solving linear systems when the collected data is corrupted by noise, or for approximating extreme values of functions which cannot be computed directly, but only estimated via noisy observations.

In mathematics, the Grothendieck inequality states that there is a universal constant with the following property. If Mij is an n × n matrix with

Compressed sensing is a signal processing technique for efficiently acquiring and reconstructing a signal, by finding solutions to underdetermined linear systems. This is based on the principle that, through optimization, the sparsity of a signal can be exploited to recover it from far fewer samples than required by the Nyquist–Shannon sampling theorem. There are two conditions under which recovery is possible. The first one is sparsity, which requires the signal to be sparse in some domain. The second one is incoherence, which is applied through the isometric property, which is sufficient for sparse signals.

In linear algebra, the coherence or mutual coherence of a matrix A is defined as the maximum absolute value of the cross-correlations between the columns of A.

The Bregman method is an iterative algorithm to solve certain convex optimization problems involving regularization. The original version is due to Lev M. Bregman, who published it in 1967.

In statistics and machine learning, lasso is a regression analysis method that performs both variable selection and regularization in order to enhance the prediction accuracy and interpretability of the resulting statistical model. It was originally introduced in geophysics, and later by Robert Tibshirani, who coined the term.

In applied mathematics and statistics, basis pursuit denoising (BPDN) refers to a mathematical optimization problem of the form

The in-crowd algorithm is a numerical method for solving basis pursuit denoising quickly; faster than any other algorithm for large, sparse problems. This algorithm is an active set method, which minimizes iteratively sub-problems of the global basis pursuit denoising:

This article is about Compressed sensing in speech signals.

In applied mathematics, k-SVD is a dictionary learning algorithm for creating a dictionary for sparse representations, via a singular value decomposition approach. k-SVD is a generalization of the k-means clustering method, and it works by iteratively alternating between sparse coding the input data based on the current dictionary, and updating the atoms in the dictionary to better fit the data. It is structurally related to the expectation maximization (EM) algorithm. k-SVD can be found widely in use in applications such as image processing, audio processing, biology, and document analysis.

Proximal gradientmethods for learning is an area of research in optimization and statistical learning theory which studies algorithms for a general class of convex regularization problems where the regularization penalty may not be differentiable. One such example is regularization of the form

In machine learning, Manifold regularization is a technique for using the shape of a dataset to constrain the functions that should be learned on that dataset. In many machine learning problems, the data to be learned do not cover the entire input space. For example, a facial recognition system may not need to classify any possible image, but only the subset of images that contain faces. The technique of manifold learning assumes that the relevant subset of data comes from a manifold, a mathematical structure with useful properties. The technique also assumes that the function to be learned is smooth: data with different labels are not likely to be close together, and so the labeling function should not change quickly in areas where there are likely to be many data points. Because of this assumption, a manifold regularization algorithm can use unlabeled data to inform where the learned function is allowed to change quickly and where it is not, using an extension of the technique of Tikhonov regularization. Manifold regularization algorithms can extend supervised learning algorithms in semi-supervised learning and transductive learning settings, where unlabeled data are available. The technique has been used for applications including medical imaging, geographical imaging, and object recognition.

Sparse coding is a representation learning method which aims at finding a sparse representation of the input data in the form of a linear combination of basic elements as well as those basic elements themselves. These elements are called atoms and they compose a dictionary. Atoms in the dictionary are not required to be orthogonal, and they may be an over-complete spanning set. This problem setup also allows the dimensionality of the signals being represented to be higher than the one of the signals being observed. The above two properties lead to having seemingly redundant atoms that allow multiple representations of the same signal but also provide an improvement in sparsity and flexibility of the representation.

Structured sparsity regularization is a class of methods, and an area of research in statistical learning theory, that extend and generalize sparsity regularization learning methods. Both sparsity and structured sparsity regularization methods seek to exploit the assumption that the output variable to be learned can be described by a reduced number of variables in the input space . Sparsity regularization methods focus on selecting the input variables that best describe the output. Structured sparsity regularization methods generalize and extend sparsity regularization methods, by allowing for optimal selection over structures like groups or networks of input variables in .

In compressed sensing, the nullspace property gives necessary and sufficient conditions on the reconstruction of sparse signals using the techniques of -relaxation. The term "nullspace property" originates from Cohen, Dahmen, and DeVore. The nullspace property is often difficult to check in practice, and the restricted isometry property is a more modern condition in the field of compressed sensing.

The convolutional sparse coding paradigm is an extension of the global sparse coding model, in which a redundant dictionary is modeled as a concatenation of circulant matrices. While the global sparsity constraint describes signal as a linear combination of a few atoms in the redundant dictionary , usually expressed as for a sparse vector , the alternative dictionary structure adopted by the convolutional sparse coding model allows the sparsity prior to be applied locally instead of globally: independent patches of are generated by "local" dictionaries operating over stripes of .