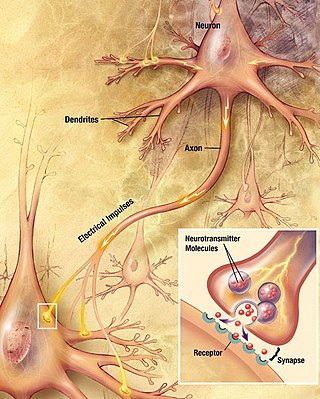

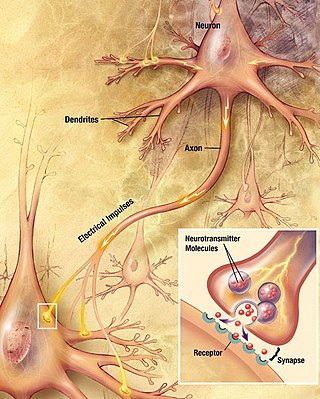

Chemical synapses are biological junctions through which neurons' signals can be sent to each other and to non-neuronal cells such as those in muscles or glands. Chemical synapses allow neurons to form circuits within the central nervous system. They are crucial to the biological computations that underlie perception and thought. They allow the nervous system to connect to and control other systems of the body.

In neuroscience, long-term potentiation (LTP) is a persistent strengthening of synapses based on recent patterns of activity. These are patterns of synaptic activity that produce a long-lasting increase in signal transmission between two neurons. The opposite of LTP is long-term depression, which produces a long-lasting decrease in synaptic strength.

Hebbian theory is a neuropsychological theory claiming that an increase in synaptic efficacy arises from a presynaptic cell's repeated and persistent stimulation of a postsynaptic cell. It is an attempt to explain synaptic plasticity, the adaptation of brain neurons during the learning process. It was introduced by Donald Hebb in his 1949 book The Organization of Behavior. The theory is also called Hebb's rule, Hebb's postulate, and cell assembly theory. Hebb states it as follows:

Let us assume that the persistence or repetition of a reverberatory activity tends to induce lasting cellular changes that add to its stability. ... When an axon of cell A is near enough to excite a cell B and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A’s efficiency, as one of the cells firing B, is increased.

In neuroscience, synaptic plasticity is the ability of synapses to strengthen or weaken over time, in response to increases or decreases in their activity. Since memories are postulated to be represented by vastly interconnected neural circuits in the brain, synaptic plasticity is one of the important neurochemical foundations of learning and memory.

An inhibitory postsynaptic potential (IPSP) is a kind of synaptic potential that makes a postsynaptic neuron less likely to generate an action potential. The opposite of an inhibitory postsynaptic potential is an excitatory postsynaptic potential (EPSP), which is a synaptic potential that makes a postsynaptic neuron more likely to generate an action potential. IPSPs can take place at all chemical synapses, which use the secretion of neurotransmitters to create cell-to-cell signalling. EPSPs and IPSPs compete with each other at numerous synapses of a neuron. This determines whether an action potential occurring at the presynaptic terminal produces an action potential at the postsynaptic membrane. Some common neurotransmitters involved in IPSPs are GABA and glycine.

In neurophysiology, long-term depression (LTD) is an activity-dependent reduction in the efficacy of neuronal synapses lasting hours or longer following a long patterned stimulus. LTD occurs in many areas of the CNS with varying mechanisms depending upon brain region and developmental progress.

In neuroscience, a silent synapse is an excitatory glutamatergic synapse whose postsynaptic membrane contains NMDA-type glutamate receptors but no AMPA-type glutamate receptors. These synapses are named "silent" because normal AMPA receptor-mediated signaling is not present, rendering the synapse inactive under typical conditions. Silent synapses are typically considered to be immature glutamatergic synapses. As the brain matures, the relative number of silent synapses decreases. However, recent research on hippocampal silent synapses shows that while they may indeed be a developmental landmark in the formation of a synapse, that synapses can be "silenced" by activity, even once they have acquired AMPA receptors. Thus, silence may be a state that synapses can visit many times during their lifetimes.

A neural circuit is a population of neurons interconnected by synapses to carry out a specific function when activated. Multiple neural circuits interconnect with one another to form large scale brain networks.

Schaffer collaterals are axon collaterals given off by CA3 pyramidal cells in the hippocampus. These collaterals project to area CA1 of the hippocampus and are an integral part of memory formation and the emotional network of the Papez circuit, and of the hippocampal trisynaptic loop. It is one of the most studied synapses in the world and named after the Hungarian anatomist-neurologist Károly Schaffer.

In neuroscience, Golgi cells are the most abundant inhibitory interneurons found within the granular layer of the cerebellum. Golgi cells can be found in the granular layer at various layers. The Golgi cell is essential for controlling the activity of the granular layer. They were first identified as inhibitory in 1964. It was also the first example of an inhibitory feedback network in which the inhibitory interneuron was identified anatomically. Golgi cells produce a wide lateral inhibition that reaches beyond the afferent synaptic field and inhibit granule cells via feedforward and feedback inhibitory loops. These cells synapse onto the dendrite of granule cells and unipolar brush cells. They receive excitatory input from mossy fibres, also synapsing on granule cells, and parallel fibers, which are long granule cell axons. Thereby this circuitry allows for feed-forward and feed-back inhibition of granule cells.

Bursting, or burst firing, is an extremely diverse general phenomenon of the activation patterns of neurons in the central nervous system and spinal cord where periods of rapid action potential spiking are followed by quiescent periods much longer than typical inter-spike intervals. Bursting is thought to be important in the operation of robust central pattern generators, the transmission of neural codes, and some neuropathologies such as epilepsy. The study of bursting both directly and in how it takes part in other neural phenomena has been very popular since the beginnings of cellular neuroscience and is closely tied to the fields of neural synchronization, neural coding, plasticity, and attention.

BCM theory, BCM synaptic modification, or the BCM rule, named for Elie Bienenstock, Leon Cooper, and Paul Munro, is a physical theory of learning in the visual cortex developed in 1981. The BCM model proposes a sliding threshold for long-term potentiation (LTP) or long-term depression (LTD) induction, and states that synaptic plasticity is stabilized by a dynamic adaptation of the time-averaged postsynaptic activity. According to the BCM model, when a pre-synaptic neuron fires, the post-synaptic neurons will tend to undergo LTP if it is in a high-activity state, or LTD if it is in a lower-activity state. This theory is often used to explain how cortical neurons can undergo both LTP or LTD depending on different conditioning stimulus protocols applied to pre-synaptic neurons.

Neural backpropagation is the phenomenon in which, after the action potential of a neuron creates a voltage spike down the axon, another impulse is generated from the soma and propagates towards the apical portions of the dendritic arbor or dendrites. In addition to active backpropagation of the action potential, there is also passive electrotonic spread. While there is ample evidence to prove the existence of backpropagating action potentials, the function of such action potentials and the extent to which they invade the most distal dendrites remain highly controversial.

Coincidence detection is a neuronal process in which a neural circuit encodes information by detecting the occurrence of temporally close but spatially distributed input signals. Coincidence detectors influence neuronal information processing by reducing temporal jitter and spontaneous activity, allowing the creation of variable associations between separate neural events in memory. The study of coincidence detectors has been crucial in neuroscience with regards to understanding the formation of computational maps in the brain.

In neurophysiology, a dendritic spike refers to an action potential generated in the dendrite of a neuron. Dendrites are branched extensions of a neuron. They receive electrical signals emitted from projecting neurons and transfer these signals to the cell body, or soma. Dendritic signaling has traditionally been viewed as a passive mode of electrical signaling. Unlike its axon counterpart which can generate signals through action potentials, dendrites were believed to only have the ability to propagate electrical signals by physical means: changes in conductance, length, cross sectional area, etc. However, the existence of dendritic spikes was proposed and demonstrated by W. Alden Spencer, Eric Kandel, Rodolfo Llinás and coworkers in the 1960s and a large body of evidence now makes it clear that dendrites are active neuronal structures. Dendrites contain voltage-gated ion channels giving them the ability to generate action potentials. Dendritic spikes have been recorded in numerous types of neurons in the brain and are thought to have great implications in neuronal communication, memory, and learning. They are one of the major factors in long-term potentiation.

Nonsynaptic plasticity is a form of neuroplasticity that involves modification of ion channel function in the axon, dendrites, and cell body that results in specific changes in the integration of excitatory postsynaptic potentials and inhibitory postsynaptic potentials. Nonsynaptic plasticity is a modification of the intrinsic excitability of the neuron. It interacts with synaptic plasticity, but it is considered a separate entity from synaptic plasticity. Intrinsic modification of the electrical properties of neurons plays a role in many aspects of plasticity from homeostatic plasticity to learning and memory itself. Nonsynaptic plasticity affects synaptic integration, subthreshold propagation, spike generation, and other fundamental mechanisms of neurons at the cellular level. These individual neuronal alterations can result in changes in higher brain function, especially learning and memory. However, as an emerging field in neuroscience, much of the knowledge about nonsynaptic plasticity is uncertain and still requires further investigation to better define its role in brain function and behavior.

A Bayesian Confidence Propagation Neural Network (BCPNN) is an artificial neural network inspired by Bayes' theorem, which regards neural computation and processing as probabilistic inference. Neural unit activations represent probability ("confidence") in the presence of input features or categories, synaptic weights are based on estimated correlations and the spread of activation corresponds to calculating posterior probabilities. It was originally proposed by Anders Lansner and Örjan Ekeberg at KTH Royal Institute of Technology. This probabilistic neural network model can also be run in generative mode to produce spontaneous activations and temporal sequences.

In neuroscience, synaptic scaling is a form of homeostatic plasticity, in which the brain responds to chronically elevated activity in a neural circuit with negative feedback, allowing individual neurons to reduce their overall action potential firing rate. Where Hebbian plasticity mechanisms modify neural synaptic connections selectively, synaptic scaling normalizes all neural synaptic connections by decreasing the strength of each synapse by the same factor, so that the relative synaptic weighting of each synapse is preserved.

Homosynaptic plasticity is one type of synaptic plasticity. Homosynaptic plasticity is input-specific, meaning changes in synapse strength occur only at post-synaptic targets specifically stimulated by a pre-synaptic target. Therefore, the spread of the signal from the pre-synaptic cell is localized.

The Tempotron is a supervised synaptic learning algorithm which is applied when the information is encoded in spatiotemporal spiking patterns. This is an advancement of the perceptron which does not incorporate a spike timing framework.