In the mathematical field of representation theory, group representations describe abstract groups in terms of bijective linear transformations of a vector space to itself ; in particular, they can be used to represent group elements as invertible matrices so that the group operation can be represented by matrix multiplication.

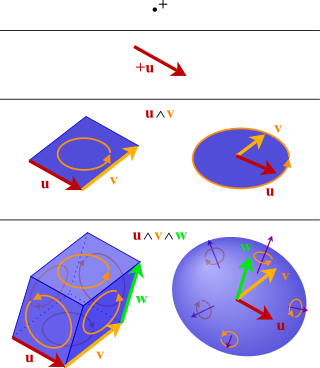

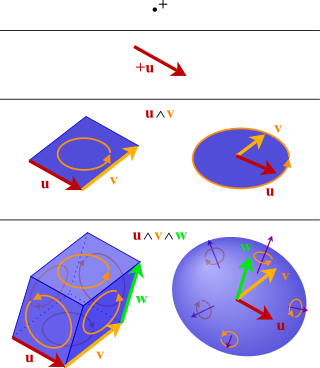

In mathematics, the exterior algebra, or Grassmann algebra, named after Hermann Grassmann, is an algebra that uses the exterior product or wedge product as its multiplication. In mathematics, the exterior product or wedge product of vectors is an algebraic construction used in geometry to study areas, volumes, and their higher-dimensional analogues. The exterior product of two vectors and denoted by is called a bivector and lives in a space called the exterior square, a vector space that is distinct from the original space of vectors. The magnitude of can be interpreted as the area of the parallelogram with sides and which in three dimensions can also be computed using the cross product of the two vectors. More generally, all parallel plane surfaces with the same orientation and area have the same bivector as a measure of their oriented area. Like the cross product, the exterior product is anticommutative, meaning that for all vectors and but, unlike the cross product, the exterior product is associative.

In mathematics, anticommutativity is a specific property of some non-commutative mathematical operations. Swapping the position of two arguments of an antisymmetric operation yields a result which is the inverse of the result with unswapped arguments. The notion inverse refers to a group structure on the operation's codomain, possibly with another operation. Subtraction is an anticommutative operation because commuting the operands of a − b gives b − a = −(a − b); for example, 2 − 10 = −(10 − 2) = −8. Another prominent example of an anticommutative operation is the Lie bracket.

Second quantization, also referred to as occupation number representation, is a formalism used to describe and analyze quantum many-body systems. In quantum field theory, it is known as canonical quantization, in which the fields are thought of as field operators, in a manner similar to how the physical quantities are thought of as operators in first quantization. The key ideas of this method were introduced in 1927 by Paul Dirac, and were later developed, most notably, by Pascual Jordan and Vladimir Fock. In this approach, the quantum many-body states are represented in the Fock state basis, which are constructed by filling up each single-particle state with a certain number of identical particles. The second quantization formalism introduces the creation and annihilation operators to construct and handle the Fock states, providing useful tools to the study of the quantum many-body theory.

In mathematics, a bilinear form is a bilinear map V × V → K on a vector space V over a field K. In other words, a bilinear form is a function B : V × V → K that is linear in each argument separately:

In mathematics and theoretical physics, a tensor is antisymmetric onan index subset if it alternates sign (+/−) when any two indices of the subset are interchanged. The index subset must generally either be all covariant or all contravariant.

In abstract algebra and multilinear algebra, a multilinear form on a vector space over a field is a map

In mathematics, a sesquilinear form is a generalization of a bilinear form that, in turn, is a generalization of the concept of the dot product of Euclidean space. A bilinear form is linear in each of its arguments, but a sesquilinear form allows one of the arguments to be "twisted" in a semilinear manner, thus the name; which originates from the Latin numerical prefix sesqui- meaning "one and a half". The basic concept of the dot product – producing a scalar from a pair of vectors – can be generalized by allowing a broader range of scalar values and, perhaps simultaneously, by widening the definition of a vector.

In mathematics, a symmetric bilinear form on a vector space is a bilinear map from two copies of the vector space to the field of scalars such that the order of the two vectors does not affect the value of the map. In other words, it is a bilinear function that maps every pair of elements of the vector space to the underlying field such that for every and in . They are also referred to more briefly as just symmetric forms when "bilinear" is understood.

Symmetry occurs not only in geometry, but also in other branches of mathematics. Symmetry is a type of invariance: the property that a mathematical object remains unchanged under a set of operations or transformations.

In mathematics, Schur polynomials, named after Issai Schur, are certain symmetric polynomials in n variables, indexed by partitions, that generalize the elementary symmetric polynomials and the complete homogeneous symmetric polynomials. In representation theory they are the characters of polynomial irreducible representations of the general linear groups. The Schur polynomials form a linear basis for the space of all symmetric polynomials. Any product of Schur polynomials can be written as a linear combination of Schur polynomials with non-negative integral coefficients; the values of these coefficients is given combinatorially by the Littlewood–Richardson rule. More generally, skew Schur polynomials are associated with pairs of partitions and have similar properties to Schur polynomials.

In mathematics, the algebraic bracket or Nijenhuis–Richardson bracket is a graded Lie algebra structure on the space of alternating multilinear forms of a vector space to itself, introduced by A. Nijenhuis and R. W. Richardson, Jr. It is related to but not the same as the Frölicher–Nijenhuis bracket and the Schouten–Nijenhuis bracket.

In mathematics, more specifically in multilinear algebra, an alternating multilinear map is a multilinear map with all arguments belonging to the same vector space that is zero whenever any pair of arguments is equal. More generally, the vector space may be a module over a commutative ring.

In quantum mechanics, an antisymmetrizer is a linear operator that makes a wave function of N identical fermions antisymmetric under the exchange of the coordinates of any pair of fermions. After application of the wave function satisfies the Pauli exclusion principle. Since is a projection operator, application of the antisymmetrizer to a wave function that is already totally antisymmetric has no effect, acting as the identity operator.

In algebra, a multilinear polynomial is a multivariate polynomial that is linear in each of its variables separately, but not necessarily simultaneously. It is a polynomial in which no variable occurs to a power of 2 or higher; that is, each monomial is a constant times a product of distinct variables. For example f(x,y,z) = 3xy + 2.5 y - 7z is a multilinear polynomial of degree 2 whereas f(x,y,z) = x² +4y is not. The degree of a multilinear polynomial is the maximum number of distinct variables occurring in any monomial.

In mathematics, a function of variables is symmetric if its value is the same no matter the order of its arguments. For example, a function of two arguments is a symmetric function if and only if for all and such that and are in the domain of The most commonly encountered symmetric functions are polynomial functions, which are given by the symmetric polynomials.

In algebra and in particular in algebraic combinatorics, a quasisymmetric function is any element in the ring of quasisymmetric functions which is in turn a subring of the formal power series ring with a countable number of variables. This ring generalizes the ring of symmetric functions. This ring can be realized as a specific limit of the rings of quasisymmetric polynomials in n variables, as n goes to infinity. This ring serves as universal structure in which relations between quasisymmetric polynomials can be expressed in a way independent of the number n of variables.

A geometric stable distribution or geo-stable distribution is a type of leptokurtic probability distribution. Geometric stable distributions were introduced in Klebanov, L. B., Maniya, G. M., and Melamed, I. A. (1985). A problem of Zolotarev and analogs of infinitely divisible and stable distributions in a scheme for summing a random number of random variables. These distributions are analogues for stable distributions for the case when the number of summands is random, independent of the distribution of summand, and having geometric distribution. The geometric stable distribution may be symmetric or asymmetric. A symmetric geometric stable distribution is also referred to as a Linnik distribution. The Laplace distribution and asymmetric Laplace distribution are special cases of the geometric stable distribution. The Mittag-Leffler distribution is also a special case of a geometric stable distribution.

In mathematics, Ricci calculus constitutes the rules of index notation and manipulation for tensors and tensor fields on a differentiable manifold, with or without a metric tensor or connection. It is also the modern name for what used to be called the absolute differential calculus, developed by Gregorio Ricci-Curbastro in 1887–1896, and subsequently popularized in a paper written with his pupil Tullio Levi-Civita in 1900. Jan Arnoldus Schouten developed the modern notation and formalism for this mathematical framework, and made contributions to the theory, during its applications to general relativity and differential geometry in the early twentieth century.

This is a glossary of representation theory in mathematics.