This article includes a list of general references, but it lacks sufficient corresponding inline citations .(September 2015) |

In mathematics, the two-sided Laplace transform or bilateral Laplace transform is an integral transform equivalent to probability's moment-generating function. Two-sided Laplace transforms are closely related to the Fourier transform, the Mellin transform, the Z-transform and the ordinary or one-sided Laplace transform. If f(t) is a real- or complex-valued function of the real variable t defined for all real numbers, then the two-sided Laplace transform is defined by the integral

Contents

- Relationship to the Fourier transform

- Relationship to other integral transforms

- Properties

- Parseval's theorem and Plancherel's theorem

- Uniqueness

- Region of convergence

- Causality

- Table of selected bilateral Laplace transforms

- See also

- References

The integral is most commonly understood as an improper integral, which converges if and only if both integrals

exist. There seems to be no generally accepted notation for the two-sided transform; the used here recalls "bilateral". The two-sided transform used by some authors is

In pure mathematics the argument t can be any variable, and Laplace transforms are used to study how differential operators transform the function.

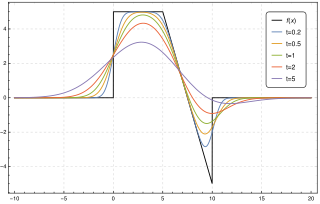

In science and engineering applications, the argument t often represents time (in seconds), and the function f(t) often represents a signal or waveform that varies with time. In these cases, the signals are transformed by filters, that work like a mathematical operator, but with a restriction. They have to be causal, which means that the output in a given time t cannot depend on an output which is a higher value of t. In population ecology, the argument t often represents spatial displacement in a dispersal kernel.

When working with functions of time, f(t) is called the time domain representation of the signal, while F(s) is called the s-domain (or Laplace domain) representation. The inverse transformation then represents a synthesis of the signal as the sum of its frequency components taken over all frequencies, whereas the forward transformation represents the analysis of the signal into its frequency components.