Related Research Articles

A relational database is a database based on the relational model of data, as proposed by E. F. Codd in 1970. A system used to maintain relational databases is a relational database management system (RDBMS). Many relational database systems are equipped with the option of using SQL for querying and updating the database.

The relational model (RM) is an approach to managing data using a structure and language consistent with first-order predicate logic, first described in 1969 by English computer scientist Edgar F. Codd, where all data is represented in terms of tuples, grouped into relations. A database organized in terms of the relational model is a relational database.

Geostatistics is a branch of statistics focusing on spatial or spatiotemporal datasets. Developed originally to predict probability distributions of ore grades for mining operations, it is currently applied in diverse disciplines including petroleum geology, hydrogeology, hydrology, meteorology, oceanography, geochemistry, geometallurgy, geography, forestry, environmental control, landscape ecology, soil science, and agriculture. Geostatistics is applied in varied branches of geography, particularly those involving the spread of diseases (epidemiology), the practice of commerce and military planning (logistics), and the development of efficient spatial networks. Geostatistical algorithms are incorporated in many places, including geographic information systems (GIS).

Uncertainty or Incertitude refers to epistemic situations involving imperfect or unknown information. It applies to predictions of future events, to physical measurements that are already made, or to the unknown. Uncertainty arises in partially observable or stochastic environments, as well as due to ignorance, indolence, or both. It arises in any number of fields, including insurance, philosophy, physics, statistics, economics, finance, medicine, psychology, sociology, engineering, metrology, meteorology, ecology and information science.

Simultaneous localization and mapping (SLAM) is the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an agent's location within it. While this initially appears to be a chicken or the egg problem, there are several algorithms known to solve it in, at least approximately, tractable time for certain environments. Popular approximate solution methods include the particle filter, extended Kalman filter, covariance intersection, and GraphSLAM. SLAM algorithms are based on concepts in computational geometry and computer vision, and are used in robot navigation, robotic mapping and odometry for virtual reality or augmented reality.

The curse of dimensionality refers to various phenomena that arise when analyzing and organizing data in high-dimensional spaces that do not occur in low-dimensional settings such as the three-dimensional physical space of everyday experience. The expression was coined by Richard E. Bellman when considering problems in dynamic programming. The curse generally refers to issues that arise when the number of datapoints is small relative to the intrinsic dimension of the data.

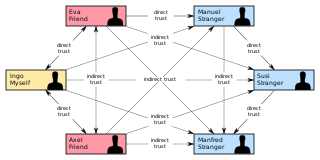

In psychology and sociology, a trust metric is a measurement or metric of the degree to which one social actor trusts another social actor. Trust metrics may be abstracted in a manner that can be implemented on computers, making them of interest for the study and engineering of virtual communities, such as Friendster and LiveJournal.

In metrology, measurement uncertainty is the expression of the statistical dispersion of the values attributed to a measured quantity. All measurements are subject to uncertainty and a measurement result is complete only when it is accompanied by a statement of the associated uncertainty, such as the standard deviation. By international agreement, this uncertainty has a probabilistic basis and reflects incomplete knowledge of the quantity value. It is a non-negative parameter.

Organizational intelligence (OI) is the capability of an organization to comprehend and create knowledge relevant to its purpose; in other words, it is the intellectual capacity of the entire organization. With relevant organizational intelligence comes great potential value for companies and organizations to figure out where their strengths and weaknesses lie in responding to change and complexity.

Monte Carlo localization (MCL), also known as particle filter localization, is an algorithm for robots to localize using a particle filter. Given a map of the environment, the algorithm estimates the position and orientation of a robot as it moves and senses the environment. The algorithm uses a particle filter to represent the distribution of likely states, with each particle representing a possible state, i.e., a hypothesis of where the robot is. The algorithm typically starts with a uniform random distribution of particles over the configuration space, meaning the robot has no information about where it is and assumes it is equally likely to be at any point in space. Whenever the robot moves, it shifts the particles to predict its new state after the movement. Whenever the robot senses something, the particles are resampled based on recursive Bayesian estimation, i.e., how well the actual sensed data correlate with the predicted state. Ultimately, the particles should converge towards the actual position of the robot.

Data fusion is the process of integrating multiple data sources to produce more consistent, accurate, and useful information than that provided by any individual data source.

The layered hidden Markov model (LHMM) is a statistical model derived from the hidden Markov model (HMM). A layered hidden Markov model (LHMM) consists of N levels of HMMs, where the HMMs on level i + 1 correspond to observation symbols or probability generators at level i. Every level i of the LHMM consists of Ki HMMs running in parallel.

Subjective logic is a type of probabilistic logic that explicitly takes epistemic uncertainty and source trust into account. In general, subjective logic is suitable for modeling and analysing situations involving uncertainty and relatively unreliable sources. For example, it can be used for modeling and analysing trust networks and Bayesian networks.

In decision theory, the expected value of sample information (EVSI) is the expected increase in utility that a decision-maker could obtain from gaining access to a sample of additional observations before making a decision. The additional information obtained from the sample may allow them to make a more informed, and thus better, decision, thus resulting in an increase in expected utility. EVSI attempts to estimate what this improvement would be before seeing actual sample data; hence, EVSI is a form of what is known as preposterior analysis. The use of EVSI in decision theory was popularized by Robert Schlaifer and Howard Raiffa in the 1960s.

Most real databases contain data whose correctness is uncertain. In order to work with such data, there is a need to quantify the integrity of the data. This is achieved by using probabilistic databases.

In database theory, a relation, as originally defined by E. F. Codd, is a set of tuples (d1, d2, ..., dn), where each element dj is a member of Dj, a data domain. Codd's original definition notwithstanding, and contrary to the usual definition in mathematics, there is no ordering to the elements of the tuples of a relation. Instead, each element is termed an attribute value. An attribute is a name paired with a domain. An attribute value is an attribute name paired with an element of that attribute's domain, and a tuple is a set of attribute values in which no two distinct elements have the same name. Thus, in some accounts, a tuple is described as a function, mapping names to values.

In decision theory and quantitative policy analysis, the expected value of including uncertainty (EVIU) is the expected difference in the value of a decision based on a probabilistic analysis versus a decision based on an analysis that ignores uncertainty.

In mathematics and telecommunications, stochastic geometry models of wireless networks refer to mathematical models based on stochastic geometry that are designed to represent aspects of wireless networks. The related research consists of analyzing these models with the aim of better understanding wireless communication networks in order to predict and control various network performance metrics. The models require using techniques from stochastic geometry and related fields including point processes, spatial statistics, geometric probability, percolation theory, as well as methods from more general mathematical disciplines such as geometry, probability theory, stochastic processes, queueing theory, information theory, and Fourier analysis.

Relational dependency networks (RDNs) are graphical models which extend dependency networks to account for relational data. Relational data is data organized into one or more tables, which are cross-related through standard fields. A relational database is a canonical example of a system that serves to maintain relational data. A relational dependency network can be used to characterize the knowledge contained in a database.

A copula is a mathematical function that provides a relationship between marginal distributions of random variables and their joint distributions. Copulas are important because it represents a dependence structure without using marginal distributions. Copulas have been widely used in the field of finance, but their use in signal processing is relatively new. Copulas have been employed in the field of wireless communication for classifying radar signals, change detection in remote sensing applications, and EEG signal processing in medicine. In this article, a short introduction to copulas is presented, followed by a mathematical derivation to obtain copula density functions, and then a section with a list of copula density functions with applications in signal processing.

References

- ↑ Global Technology Outlook (PDF) (Report). 2012.

- 1 2 3 Prabhakar, Sunil. "ORION: Managing Uncertain (Sensor) Data" (PDF). Computer Science.

- Habich Volk; Clemens Utzny; Ralf Dittmann; Wolfgang Lehner. "Error-Aware Density-Based Clustering of Imprecise Measurement Values". Seventh IEEE International Conference on Data Mining Workshops, 2007. ICDM Workshops 2007. IEEE.

- Volk Rosentahl; Martin Hahmann; Dirk Habich; Wolfgang Lehner. "Clustering Uncertain Data With Possible Worlds". Proceedings of the 1st Workshop on Management and mining Of Uncertain Data in conjunction with the 25th International Conference on Data Engineering, 2009. IEEE.