A computer worm is a standalone malware computer program that replicates itself in order to spread to other computers. It often uses a computer network to spread itself, relying on security failures on the target computer to access it. It will use this machine as a host to scan and infect other computers. When these new worm-invaded computers are controlled, the worm will continue to scan and infect other computers using these computers as hosts, and this behaviour will continue. Computer worms use recursive methods to copy themselves without host programs and distribute themselves based on exploiting the advantages of exponential growth, thus controlling and infecting more and more computers in a short time. Worms almost always cause at least some harm to the network, even if only by consuming bandwidth, whereas viruses almost always corrupt or modify files on a targeted computer.

Computer security, cybersecurity, digital security or information technology security is the protection of computer systems and networks from attacks by malicious actors that may result in unauthorized information disclosure, theft of, or damage to hardware, software, or data, as well as from the disruption or misdirection of the services they provide.

In the field of computer security, independent researchers often discover flaws in software that can be abused to cause unintended behaviour; these flaws are called vulnerabilities. The process by which the analysis of these vulnerabilities is shared with third parties is the subject of much debate, and is referred to as the researcher's disclosure policy. Full disclosure is the practice of publishing analysis of software vulnerabilities as early as possible, making the data accessible to everyone without restriction. The primary purpose of widely disseminating information about vulnerabilities is so that potential victims are as knowledgeable as those who attack them.

Malware is any software intentionally designed to cause disruption to a computer, server, client, or computer network, leak private information, gain unauthorized access to information or systems, deprive access to information, or which unknowingly interferes with the user's computer security and privacy. Researchers tend to classify malware into one or more sub-types.

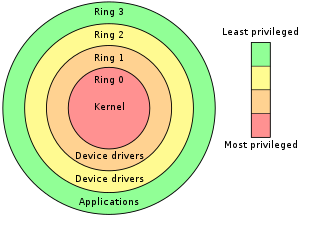

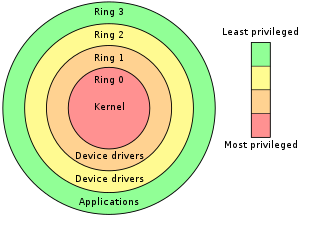

A rootkit is a collection of computer software, typically malicious, designed to enable access to a computer or an area of its software that is not otherwise allowed and often masks its existence or the existence of other software. The term rootkit is a compound of "root" and the word "kit". The term "rootkit" has negative connotations through its association with malware.

A patch is a set of changes to a computer program or its supporting data designed to update or repair it. This includes bugfixes or bug fixes to remove security vulnerabilities and correct bugs (errors). Patches are often written to improve the functionality, usability, or performance of a program. The majority of patches are provided by software vendors for operating system and application updates.

The Windows Metafile vulnerability—also called the Metafile Image Code Execution and abbreviated MICE—is a security vulnerability in the way some versions of the Microsoft Windows operating system handled images in the Windows Metafile format. It permits arbitrary code to be executed on affected computers without the permission of their users. It was discovered on December 27, 2005, and the first reports of affected computers were announced within 24 hours. Microsoft released a high-priority update to eliminate this vulnerability via Windows Update on January 5, 2006. Attacks using this vulnerability are known as WMF exploits.

A data breach, also known as data leakage, is "the unauthorized exposure, disclosure, or loss of personal information".

A zero-day is a vulnerability or security hole in a computer system unknown to its owners, developers or anyone capable of mitigating it. Until the vulnerability is remedied, threat actors can exploit it in a zero-day exploit, or zero-day attack.

A supply chain attack is a cyber-attack that seeks to damage an organization by targeting less secure elements in the supply chain. A supply chain attack can occur in any industry, from the financial sector, oil industry, to a government sector. A supply chain attack can happen in software or hardware. Cybercriminals typically tamper with the manufacturing or distribution of a product by installing malware or hardware-based spying components. Symantec's 2019 Internet Security Threat Report states that supply chain attacks increased by 78 percent in 2018.

Mobile security, or mobile device security, is the protection of smartphones, tablets, and laptops from threats associated with wireless computing. It has become increasingly important in mobile computing. The security of personal and business information now stored on smartphones is of particular concern.

Cyberweapons are commonly defined as malware agents employed for military, paramilitary, or intelligence objectives as part of a cyberattack. This includes computer viruses, trojans, spyware, and worms that can introduce malicious code into existing software, causing a computer to perform actions or processes unintended by its operator.

The Intel Management Engine (ME), also known as the Intel Manageability Engine, is an autonomous subsystem that has been incorporated in virtually all of Intel's processor chipsets since 2008. It is located in the Platform Controller Hub of modern Intel motherboards.

Project Zero is a team of security analysts employed by Google tasked with finding zero-day vulnerabilities. It was announced on 15 July 2014.

Intel Software Guard Extensions (SGX) is a set of instruction codes implementing trusted execution environment that are built into some Intel central processing units (CPUs). They allow user-level and operating system code to define protected private regions of memory, called enclaves. SGX is designed to be useful for implementing secure remote computation, secure web browsing, and digital rights management (DRM). Other applications include concealment of proprietary algorithms and of encryption keys.

Endpoint security or endpoint protection is an approach to the protection of computer networks that are remotely bridged to client devices. The connection of endpoint devices such as laptops, tablets, mobile phones, Internet-of-things devices, and other wireless devices to corporate networks creates attack paths for security threats. Endpoint security attempts to ensure that such devices follow a definite level of compliance to standards.

The market for zero-day exploits is commercial activity related to the trafficking of software exploits.

Operational technology (OT) is hardware and software that detects or causes a change, through the direct monitoring and/or control of industrial equipment, assets, processes and events. The term has become established to demonstrate the technological and functional differences between traditional information technology (IT) systems and industrial control systems environment, the so-called "IT in the non-carpeted areas".

Meltdown is one of the two original transient execution CPU vulnerabilities. Meltdown affects Intel x86 microprocessors, IBM POWER processors, and some ARM-based microprocessors. It allows a rogue process to read all memory, even when it is not authorized to do so.

Spectre is one of the two original transient execution CPU vulnerabilities, which involve microarchitectural timing side-channel attacks. These affect modern microprocessors that perform branch prediction and other forms of speculation. On most processors, the speculative execution resulting from a branch misprediction may leave observable side effects that may reveal private data to attackers. For example, if the pattern of memory accesses performed by such speculative execution depends on private data, the resulting state of the data cache constitutes a side channel through which an attacker may be able to extract information about the private data using a timing attack.