Corpus linguistics is the study of a language as that language is expressed in its text corpus, its body of "real world" text. Corpus linguistics proposes that a reliable analysis of a language is more feasible with corpora collected in the field—the natural context ("realia") of that language—with minimal experimental interference. The large collections of text allow linguists to run quantitative analyses on linguistic concepts, otherwise harder to quantify.

In linguistics and natural language processing, a corpus or text corpus is a dataset, consisting of natively digital and older, digitalized, language resources, either annotated or unannotated.

Word-sense disambiguation (WSD) is the process of identifying which sense of a word is meant in a sentence or other segment of context. In human language processing and cognition, it is usually subconscious/automatic but can often come to conscious attention when ambiguity impairs clarity of communication, given the pervasive polysemy in natural language. In computational linguistics, it is an open problem that affects other computer-related writing, such as discourse, improving relevance of search engines, anaphora resolution, coherence, and inference.

A vocabulary is a set of words, typically the set in a language or the set known to an individual. The word vocabulary originated from the Latin vocabulum, meaning "a word, name". It forms an essential component of language and communication, helping convey thoughts, ideas, emotions, and information. Vocabulary can be oral, written, or signed and can be categorized into two main types: active vocabulary and passive vocabulary. An individual's vocabulary continually evolves through various methods, including direct instruction, independent reading, and natural language exposure, but it can also shrink due to forgetting, trauma, or disease. Furthermore, vocabulary is a significant focus of study across various disciplines, like linguistics, education, psychology, and artificial intelligence. Vocabulary is not limited to single words; it also encompasses multi-word units known as collocations, idioms, and other types of phraseology. Acquiring an adequate vocabulary is one of the largest challenges in learning a second language.

The Brown University Standard Corpus of Present-Day American English, better known as simply the Brown Corpus, is an electronic collection of text samples of American English, the first major structured corpus of varied genres. This corpus first set the bar for the scientific study of the frequency and distribution of word categories in everyday language use. Compiled by Henry Kučera and W. Nelson Francis at Brown University, in Rhode Island, it is a general language corpus containing 500 samples of English, totaling roughly one million words, compiled from works published in the United States in 1961.

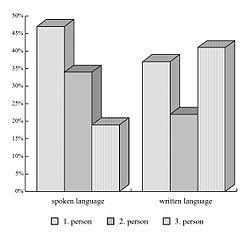

In corpus linguistics a key word is a word which occurs in a text more often than we would expect to occur by chance alone. Key words are calculated by carrying out a statistical test which compares the word frequencies in a text against their expected frequencies derived in a much larger corpus, which acts as a reference for general language use. Keyness is then the quality a word or phrase has of being "key" in its context. Combinations of nouns with parts of speech that human readers would not likely notice, such as prepositions, time adverbs, and pronouns can be a relevant part of keyness. Even separate pronouns can constitute keywords.

A concordance is an alphabetical list of the principal words used in a book or body of work, listing every instance of each word with its immediate context. Historically, concordances have been compiled only for works of special importance, such as the Vedas, Bible, Qur'an or the works of Shakespeare, James Joyce or classical Latin and Greek authors, because of the time, difficulty, and expense involved in creating a concordance in the pre-computer era.

In linguistics, the term lexis designates the complete set of all possible words in a language, or a particular subset of words that are grouped by some specific linguistic criteria. For example, the general term English lexis refers to all words of the English language, while more specific term English religious lexis refers to a particular subset within English lexis, encompassing only words that are semantically related to the religious sphere of life.

Studies that estimate and rank the most common words in English examine texts written in English. Perhaps the most comprehensive such analysis is one that was conducted against the Oxford English Corpus (OEC), a massive text corpus that is written in the English language.

In linguistics, statistical semantics applies the methods of statistics to the problem of determining the meaning of words or phrases, ideally through unsupervised learning, to a degree of precision at least sufficient for the purpose of information retrieval.

The Academic Word List (AWL) is a word list of 570 English word families which appear with great frequency in a broad range of academic texts. The target readership is English as a second or foreign language students intending to enter English-medium higher education, and teachers of such students. The AWL was developed by Averil Coxhead at the School of Linguistics and Applied Language Studies at Victoria University of Wellington, New Zealand. This list replaced the previously widely used University Word List, developed by Xue and Nation in 1986. The words included in the AWL were selected based on their range, frequency, and dispersion, and were divided into ten sublists, each containing 1000 words in decreasing order of frequency. The AWL excludes words from the General Service List. Many words in the AWL are general vocabulary not restricted to an academic domain, such as the words area, approach, create, similar, and occur, found in Sublist One, and the AWL only accounts for a small percentage of the actual word occurrences in academic texts.

The General Service List (GSL) is a list of roughly 2,000 words published by Michael West in 1953. The words were selected to represent the most frequent words of English and were taken from a corpus of written English. The target audience was English language learners and ESL teachers. To maximize the utility of the list, some frequent words that overlapped broadly in meaning with words already on the list were omitted. In the original publication the relative frequencies of various senses of the words were also included.

Corpus-assisted discourse studies is related historically and methodologically to the discipline of corpus linguistics. The principal endeavor of corpus-assisted discourse studies is the investigation, and comparison of features of particular discourse types, integrating into the analysis the techniques and tools developed within corpus linguistics. These include the compilation of specialised corpora and analyses of word and word-cluster frequency lists, comparative keyword lists and, above all, concordances.

Macmillan English Dictionary for Advanced Learners, also known as MEDAL, is an advanced learner's dictionary first published in 2002 by Macmillan Education. It shares most of the features of this type of dictionary: it provides definitions in simple language, using a controlled defining vocabulary; most words have example sentences to illustrate how they are typically used; and information is given about how words combine grammatically or in collocations. MEDAL also introduced a number of innovations. These include:

Mark E. Davies is an American linguist. He specializes in corpus linguistics and language variation and change. He is the creator of most of the text corpora from English-Corpora.org as well as the Corpus del español and the Corpus do português. He has also created large datasets of word frequency, collocates, and n-grams data, which have been used by many large companies in the fields of technology and also language learning.

The Google Ngram Viewer or Google Books Ngram Viewer is an online search engine that charts the frequencies of any set of search strings using a yearly count of n-grams found in printed sources published between 1500 and 2019 in Google's text corpora in English, Chinese (simplified), French, German, Hebrew, Italian, Russian, or Spanish. There are also some specialized English corpora, such as American English, British English, and English Fiction.

Sketch Engine is a corpus manager and text analysis software developed by Lexical Computing CZ s.r.o. since 2003. Its purpose is to enable people studying language behaviour to search large text collections according to complex and linguistically motivated queries. Sketch Engine gained its name after one of the key features, word sketches: one-page, automatic, corpus-derived summaries of a word's grammatical and collocational behaviour. Currently, it supports and provides corpora in 90+ languages.

Word2vec is a technique in natural language processing (NLP) for obtaining vector representations of words. These vectors capture information about the meaning of the word based on the surrounding words. The word2vec algorithm estimates these representations by modeling text in a large corpus. Once trained, such a model can detect synonymous words or suggest additional words for a partial sentence. Word2vec was developed by Tomáš Mikolov and colleagues at Google and published in 2013.

Age of acquisition is a psycholinguistic variable referring to the age at which a word is typically learned. For example, the word 'penguin' is typically learned at a younger age than the word 'albatross'. Studies in psycholinguistics suggest that age of acquisition has an effect on the speed of reading words. The findings have demonstrated that early-acquired words are processed more quickly than later-acquired words. It is a particularly strong variable in predicting the speed of picture naming. It has been generally found that words that are more frequent, shorter, more familiar and refer to concrete concepts are learned earlier than the counterparts in simple words and compound words, together with their respective lexemes. In addition, the AoA effect has been demonstrated in several languages and in bilingual speakers.