Binaural recording is a method of recording sound that uses two microphones, arranged with the intent to create a 3-D stereo sound sensation for the listener of actually being in the room with the performers or instruments. This effect is often created using a technique known as dummy head recording, wherein a mannequin head is fitted with a microphone in each ear. Binaural recording is intended for replay using headphones and will not translate properly over stereo speakers. This idea of a three-dimensional or "internal" form of sound has also translated into useful advancement of technology in many things such as stethoscopes creating "in-head" acoustics and IMAX movies being able to create a three-dimensional acoustic experience.

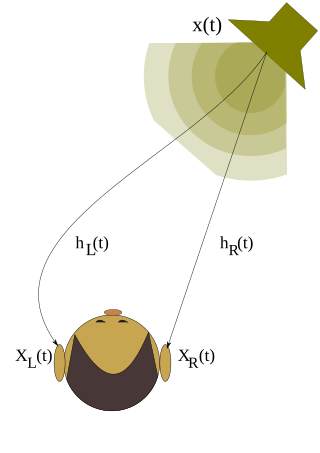

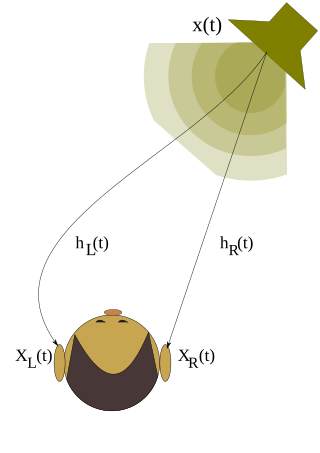

A head-related transfer function (HRTF), also known as a head shadow, is a response that characterizes how an ear receives a sound from a point in space. As sound strikes the listener, the size and shape of the head, ears, ear canal, density of the head, size and shape of nasal and oral cavities, all transform the sound and affect how it is perceived, boosting some frequencies and attenuating others. Generally speaking, the HRTF boosts frequencies from 2–5 kHz with a primary resonance of +17 dB at 2,700 Hz. But the response curve is more complex than a single bump, affects a broad frequency spectrum, and varies significantly from person to person.

Ambisonics is a full-sphere surround sound format: in addition to the horizontal plane, it covers sound sources above and below the listener.

Surround sound is a technique for enriching the fidelity and depth of sound reproduction by using multiple audio channels from speakers that surround the listener. Its first application was in movie theaters. Prior to surround sound, theater sound systems commonly had three screen channels of sound that played from three loudspeakers located in front of the audience. Surround sound adds one or more channels from loudspeakers to the side or behind the listener that are able to create the sensation of sound coming from any horizontal direction around the listener.

Acoustical engineering is the branch of engineering dealing with sound and vibration. It includes the application of acoustics, the science of sound and vibration, in technology. Acoustical engineers are typically concerned with the design, analysis and control of sound.

Synthesis or synthesize may refer to:

3D audio effects are a group of sound effects that manipulate the sound produced by stereo speakers, surround-sound speakers, speaker-arrays, or headphones. This frequently involves the virtual placement of sound sources anywhere in three-dimensional space, including behind, above or below the listener.

Sound localization is a listener's ability to identify the location or origin of a detected sound in direction and distance.

Wave field synthesis (WFS) is a spatial audio rendering technique, characterized by creation of virtual acoustic environments. It produces artificial wavefronts synthesized by a large number of individually driven loudspeakers from elementary waves. Such wavefronts seem to originate from a virtual starting point, the virtual sound source. Contrary to traditional phantom sound sources, the localization of WFS established virtual sound sources does not depend on the listener's position. Like as a genuine sound source the virtual source remains at fixed starting point.

Acoustic location is a method of determining the position of an object or sound source by using sound waves. Location can take place in gases, liquids, and in solids.

This page focusses on decoding of classic first-order Ambisonics. Other relevant information is available on the Ambisonic reproduction systems page.

Ambiophonics is a method in the public domain that employs digital signal processing (DSP) and two loudspeakers directly in front of the listener in order to improve reproduction of stereophonic and 5.1 surround sound for music, movies, and games in home theaters, gaming PCs, workstations, or studio monitoring applications. First implemented using mechanical means in 1986, today a number of hardware and VST plug-in makers offer Ambiophonic DSP. Ambiophonics eliminates crosstalk inherent in the conventional stereo triangle speaker placement, and thereby generates a speaker-binaural soundfield that emulates headphone-binaural sound, and creates for the listener improved perception of reality of recorded auditory scenes. A second speaker pair can be added in back in order to enable 360° surround sound reproduction. Additional surround speakers may be used for hall ambience, including height, if desired.

LARES is an electronic sound enhancement system that uses microprocessors to control multiple loudspeakers and microphones placed around a performance space for the purpose of providing active acoustic treatment. LARES was invented in Massachusetts in 1988, by Dr David Griesinger and Steve Barbar who were working at Lexicon, Inc. LARES was given its own company division in 1990, and LARES Associates was formed in 1995 as a separate corporation. Since then, hundreds of LARES systems have been used in concert halls, opera houses performance venues, and houses of worship from outdoor music festivals to permanent indoor symphony halls.

A parabolic loudspeaker is a loudspeaker which seeks to focus its sound in coherent plane waves either by reflecting sound output from a speaker driver to a parabolic reflector aimed at the target audience, or by arraying drivers on a parabolic surface. The resulting beam of sound travels farther, with less dissipation in air, than horn loudspeakers, and can be more focused than line array loudspeakers allowing sound to be sent to isolated audience targets. The parabolic loudspeaker has been used for such diverse purposes as directing sound at faraway targets in performing arts centers and stadia, for industrial testing, for intimate listening at museum exhibits, and as a sonic weapon.

The sweet spot is a term used by audiophiles and recording engineers to describe the focal point between two speakers, where an individual is fully capable of hearing the stereo audio mix the way it was intended to be heard by the mixer. The sweet spot is the location which creates an equilateral triangle together with the stereo loudspeakers, the stereo triangle. In the case of surround sound, this is the focal point between four or more speakers, i.e., the location at which all wave fronts arrive simultaneously. In international recommendations the sweet spot is referred to as reference listening point.

Auralization is a procedure designed to model and simulate the experience of acoustic phenomena rendered as a soundfield in a virtualized space. This is useful in configuring the soundscape of architectural structures, concert venues, and public spaces, as well as in making coherent sound environments within virtual immersion systems.

3D sound localization refers to an acoustic technology that is used to locate the source of a sound in a three-dimensional space. The source location is usually determined by the direction of the incoming sound waves and the distance between the source and sensors. It involves the structure arrangement design of the sensors and signal processing techniques.

Perceptual-based 3D sound localization is the application of knowledge of the human auditory system to develop 3D sound localization technology.

3D sound reconstruction is the application of reconstruction techniques to 3D sound localization technology. These methods of reconstructing three-dimensional sound are used to recreate sounds to match natural environments and provide spatial cues of the sound source. They also see applications in creating 3D visualizations on a sound field to include physical aspects of sound waves including direction, pressure, and intensity. This technology is used in entertainment to reproduce a live performance through computer speakers. The technology is also used in military applications to determine location of sound sources. Reconstructing sound fields is also applicable to medical imaging to measure points in ultrasound.

Apparent source width (ASW) is the audible impression of a spatially extended sound source. This psychoacoustic impression results from the sound radiation characteristics of the source and the properties of the acoustic space into which it is radiating. Wide source widths are desired by listeners of music because these are associated with the sound of acoustic music, opera, classical music, and historically informed performance. Research concerning ASW comes from the field of room acoustics, architectural acoustics and auralization, as well as musical acoustics, psychoacoustics and systematic musicology.