In probability theory and statistics, the exponential distribution or negative exponential distribution is the probability distribution of the distance between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate; the distance parameter could be any meaningful mono-dimensional measure of the process, such as time between production errors, or length along a roll of fabric in the weaving manufacturing process. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson point processes it is found in various other contexts.

In calculus, Taylor's theorem gives an approximation of a -times differentiable function around a given point by a polynomial of degree , called the -th-order Taylor polynomial. For a smooth function, the Taylor polynomial is the truncation at the order of the Taylor series of the function. The first-order Taylor polynomial is the linear approximation of the function, and the second-order Taylor polynomial is often referred to as the quadratic approximation. There are several versions of Taylor's theorem, some giving explicit estimates of the approximation error of the function by its Taylor polynomial.

The Pareto distribution, named after the Italian civil engineer, economist, and sociologist Vilfredo Pareto, is a power-law probability distribution that is used in description of social, quality control, scientific, geophysical, actuarial, and many other types of observable phenomena; the principle originally applied to describing the distribution of wealth in a society, fitting the trend that a large portion of wealth is held by a small fraction of the population. The Pareto principle or "80-20 rule" stating that 80% of outcomes are due to 20% of causes was named in honour of Pareto, but the concepts are distinct, and only Pareto distributions with shape value of log45 ≈ 1.16 precisely reflect it. Empirical observation has shown that this 80-20 distribution fits a wide range of cases, including natural phenomena and human activities.

In computer science, denotational semantics is an approach of formalizing the meanings of programming languages by constructing mathematical objects that describe the meanings of expressions from the languages. Other approaches providing formal semantics of programming languages include axiomatic semantics and operational semantics.

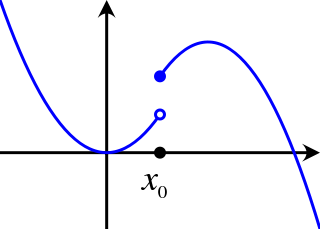

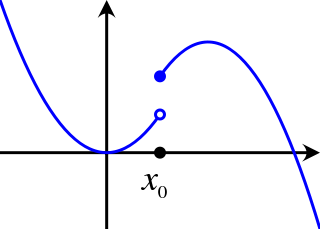

In mathematical analysis, semicontinuity is a property of extended real-valued functions that is weaker than continuity. An extended real-valued function is uppersemicontinuous at a point if, roughly speaking, the function values for arguments near are not much higher than

In probability theory and statistics, the chi-squared distribution with degrees of freedom is the distribution of a sum of the squares of independent standard normal random variables. The chi-squared distribution is a special case of the gamma distribution and is one of the most widely used probability distributions in inferential statistics, notably in hypothesis testing and in construction of confidence intervals. This distribution is sometimes called the central chi-squared distribution, a special case of the more general noncentral chi-squared distribution.

In programming language theory and proof theory, the Curry–Howard correspondence is the direct relationship between computer programs and mathematical proofs.

In complex analysis, a branch of mathematics, Morera's theorem, named after Giacinto Morera, gives an important criterion for proving that a function is holomorphic.

In probability theory and statistics, the Lévy distribution, named after Paul Lévy, is a continuous probability distribution for a non-negative random variable. In spectroscopy, this distribution, with frequency as the dependent variable, is known as a van der Waals profile. It is a special case of the inverse-gamma distribution. It is a stable distribution.

The simply typed lambda calculus, a form of type theory, is a typed interpretation of the lambda calculus with only one type constructor that builds function types. It is the canonical and simplest example of a typed lambda calculus. The simply typed lambda calculus was originally introduced by Alonzo Church in 1940 as an attempt to avoid paradoxical use of the untyped lambda calculus.

In probability and statistics, the Kumaraswamy's double bounded distribution is a family of continuous probability distributions defined on the interval (0,1). It is similar to the beta distribution, but much simpler to use especially in simulation studies since its probability density function, cumulative distribution function and quantile functions can be expressed in closed form. This distribution was originally proposed by Poondi Kumaraswamy for variables that are lower and upper bounded with a zero-inflation. This was extended to inflations at both extremes [0,1] in later work with S. G. Fletcher.

In computer science, Programming Computable Functions (PCF) is a typed functional language introduced by Gordon Plotkin in 1977, based on previous unpublished material by Dana Scott. It can be considered to be an extended version of the typed lambda calculus or a simplified version of modern typed functional languages such as ML or Haskell.

String diagrams are a formal graphical language for representing morphisms in monoidal categories, or more generally 2-cells in 2-categories. They are a prominent tool in applied category theory. When interpreted in the monoidal category of vector spaces and linear maps with the tensor product, string diagrams are called tensor networks or Penrose graphical notation. This has led to the development of categorical quantum mechanics where the axioms of quantum theory are expressed in the language of monoidal categories.

In mathematics – specifically, in operator theory – a densely defined operator or partially defined operator is a type of partially defined function. In a topological sense, it is a linear operator that is defined "almost everywhere". Densely defined operators often arise in functional analysis as operations that one would like to apply to a larger class of objects than those for which they a priori "make sense".

The uncertainty theory invented by Baoding Liu is a branch of mathematics based on normality, monotonicity, self-duality, countable subadditivity, and product measure axioms.

In mathematics, a limit is the value that a function approaches as the input approaches some value. Limits are essential to calculus and mathematical analysis, and are used to define continuity, derivatives, and integrals.

A product distribution is a probability distribution constructed as the distribution of the product of random variables having two other known distributions. Given two statistically independent random variables X and Y, the distribution of the random variable Z that is formed as the product is a product distribution.

Computable topology is a discipline in mathematics that studies the topological and algebraic structure of computation. Computable topology is not to be confused with algorithmic or computational topology, which studies the application of computation to topology.

Radhia Cousot was a French computer scientist known for inventing abstract interpretation.

In computer science, especially model checking and abstract interpretation, widening refers to at least two different techniques in the analysis of abstract transition systems where infinite progressions of abstract states are replaced by a least fixed point. The use of the term in model checking is closely related to acceleration techniques, some authors reserving acceleration for exact computations.