Related Research Articles

In computer science, formal methods are mathematically rigorous techniques for the specification, development, analysis, and verification of software and hardware systems. The use of formal methods for software and hardware design is motivated by the expectation that, as in other engineering disciplines, performing appropriate mathematical analysis can contribute to the reliability and robustness of a design.

In computer science, program analysis is the process of automatically analyzing the behavior of computer programs regarding a property such as correctness, robustness, safety and liveness. Program analysis focuses on two major areas: program optimization and program correctness. The first focuses on improving the program’s performance while reducing the resource usage while the latter focuses on ensuring that the program does what it is supposed to do.

In the context of hardware and software systems, formal verification is the act of proving or disproving the correctness of a system with respect to a certain formal specification or property, using formal methods of mathematics. Formal verification is a key incentive for formal specification of systems, and is at the core of formal methods. It represents an important dimension of analysis and verification in electronic design automation and is one approach to software verification. The use of formal verification enables the highest Evaluation Assurance Level (EAL7) in the framework of common criteria for computer security certification.

Code review is a software quality assurance activity in which one or more people check a program, mainly by viewing and reading parts of its source code, either after implementation or as an interruption of implementation. At least one of the persons must not have authored the code. The persons performing the checking, excluding the author, are called "reviewers".

In computer science, an abstract state machine (ASM) is a state machine operating on states that are arbitrary data structures.

Software assurance (SwA) is a critical process in software development that ensures the reliability, safety, and security of software products. It involves a variety of activities, including requirements analysis, design reviews, code inspections, testing, and formal verification. One crucial component of software assurance is secure coding practices, which follow industry-accepted standards and best practices, such as those outlined by the Software Engineering Institute (SEI) in their CERT Secure Coding Standards (SCS).

Parasoft is an independent software vendor specializing in automated software testing and application security with headquarters in Monrovia, California. It was founded in 1987 by four graduates of the California Institute of Technology who planned to commercialize the parallel computing software tools they had been working on for the Caltech Cosmic Cube, which was the first working hypercube computer built.

In computing, compiler correctness is the branch of computer science that deals with trying to show that a compiler behaves according to its language specification. Techniques include developing the compiler using formal methods and using rigorous testing on an existing compiler.

Frama-C stands for Framework for Modular Analysis of C programs. Frama-C is a set of interoperable program analyzers for C programs. Frama-C has been developed by the French Commissariat à l'Énergie Atomique et aux Énergies Alternatives (CEA-List) and Inria. It has also received funding from the Core Infrastructure Initiative. Frama-C, as a static analyzer, inspects programs without executing them. Despite its name, the software is not related to the French project Framasoft.

LDRA is a provider of software analysis, test, and requirements traceability tools for the Public and Private sectors. It is a pioneer in static and dynamic software analysis.

Polyspace is a static code analysis tool for large-scale analysis by abstract interpretation to detect, or prove the absence of, certain run-time errors in source code for the C, C++, and Ada programming languages. The tool also checks source code for adherence to appropriate code standards.

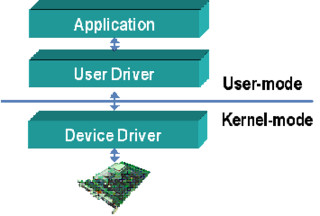

Device drivers are programs which allow software or higher-level computer programs to interact with a hardware device. These software components act as a link between the devices and the operating systems, communicating with each of these systems and executing commands. They provide an abstraction layer for the software above and also mediate the communication between the operating system kernel and the devices below.

MALPAS is a software toolset that provides a means of investigating and proving the correctness of software by applying a rigorous form of static program analysis. The tool uses directed graphs and regular algebra to represent the program under analysis. Using the automated tools in MALPAS an analyst can describe the structure of a program, classify the use made of data and provide the information relationships between input and output data. It also supports a formal proof that the code meets its specification.

Astrée is a static analyzer based on abstract interpretation. It analyzes programs written in the programming languages C and C++, and emits an exhaustive list of possible runtime errors and assertion violations. The defect classes covered include divisions by zero, buffer overflows, dereferences of null or dangling pointers, data races, deadlocks, etc. Astrée includes a static taint checker and helps finding cybersecurity vulnerabilities, such as Spectre. It is proprietary software written in the language OCaml.

Parasoft C/C++test is an integrated set of tools for testing C and C++ source code that software developers use to analyze, test, find defects, and measure the quality and security of their applications. It supports software development practices that are part of development testing, including static code analysis, dynamic code analysis, unit test case generation and execution, code coverage analysis, regression testing, runtime error detection, requirements traceability, and code review. It's a commercial tool that supports operation on Linux, Windows, and Solaris platforms as well as support for on-target embedded testing and cross compilers.

Development testing is a software development process that involves synchronized application of a broad spectrum of defect prevention and detection strategies in order to reduce software development risks, time, and costs.

AbsInt is a software-development tools vendor based in Saarbrücken, Germany. The company was founded in 1998 as a technology spin-off from the Department of Programming Languages and Compiler Construction of Prof. Reinhard Wilhelm at Saarland University. AbsInt specializes in software-verification tools based on abstract interpretation. Its tools are used worldwide by Fortune 500 companies, educational institutions, government agencies and startups.

ECLAIR is a commercial static code analysis tool developed by BUGSENG, LLC for automatic analysis, verification, testing and transformation of C and C++ programs.

CodeSonar is a static code analysis tool from CodeSecure, Inc. CodeSonar is used to find and fix bugs and security vulnerabilities in source and binary code. It performs whole-program, inter-procedural analysis with abstract interpretation on C, C++, C#, Java, as well as x86 and ARM binary executables and libraries. CodeSonar is typically used by teams developing or assessing software to track their quality or security weaknesses. CodeSonar supports Linux, BSD, FreeBSD, NetBSD, MacOS and Windows hosts and embedded operating systems and compilers.

References

- ↑ Wichmann, B. A.; Canning, A. A.; Clutterbuck, D. L.; Winsbarrow, L. A.; Ward, N. J.; Marsh, D. W. R. (Mar 1995). "Industrial Perspective on Static Analysis" (PDF). Software Engineering Journal. 10 (2): 69–75. doi:10.1049/sej.1995.0010. Archived from the original (PDF) on 2011-09-27.

- ↑ Egele, Manuel; Scholte, Theodoor; Kirda, Engin; Kruegel, Christopher (2008-03-05). "A survey on automated dynamic malware-analysis techniques and tools". ACM Computing Surveys. 44 (2): 6:1–6:42. doi:10.1145/2089125.2089126. ISSN 0360-0300. S2CID 1863333.

- ↑ Khatiwada, Saket; Tushev, Miroslav; Mahmoud, Anas (2018-01-01). "Just enough semantics: An information theoretic approach for IR-based software bug localization". Information and Software Technology. 93: 45–57. doi:10.1016/j.infsof.2017.08.012.

- ↑ "Software Quality Objectives for Source Code" Archived 2015-06-04 at the Wayback Machine (PDF). Proceedings: Embedded Real Time Software and Systems 2010 Conference, ERTS2010.org, Toulouse, France: Patrick Briand, Martin Brochet, Thierry Cambois, Emmanuel Coutenceau, Olivier Guetta, Daniel Mainberte, Frederic Mondot, Patrick Munier, Loic Noury, Philippe Spozio, Frederic Retailleau.

- ↑ Improving Software Security with Precise Static and Runtime Analysis Archived 2011-06-05 at the Wayback Machine (PDF), Benjamin Livshits, section 7.3 "Static Techniques for Security". Stanford doctoral thesis, 2006.

- ↑ FDA (2010-09-08). "Infusion Pump Software Safety Research at FDA". Food and Drug Administration. Archived from the original on 2010-09-01. Retrieved 2010-09-09.

- ↑ Computer based safety systems - technical guidance for assessing software aspects of digital computer based protection systems, "Computer based safety systems" (PDF). Archived from the original (PDF) on January 4, 2013. Retrieved May 15, 2013.

- ↑ Position Paper CAST-9. Considerations for Evaluating Safety Engineering Approaches to Software Assurance Archived 2013-10-06 at the Wayback Machine // FAA, Certification Authorities Software Team (CAST), January, 2002: "Verification. A combination of both static and dynamic analyses should be specified by the applicant/developer and applied to the software."

- ↑ VDC Research (2012-02-01). "Automated Defect Prevention for Embedded Software Quality". VDC Research. Archived from the original on 2012-04-11. Retrieved 2012-04-10.

- ↑ Prause, Christian R., René Reiners, and Silviya Dencheva. "Empirical study of tool support in highly distributed research projects." Global Software Engineering (ICGSE), 2010 5th IEEE International Conference on. IEEE, 2010 http://ieeexplore.ieee.org/ielx5/5581168/5581493/05581551.pdf

- ↑ M. Howard and S. Lipner. The Security Development Lifecycle: SDL: A Process for Developing Demonstrably More Secure Software. Microsoft Press, 2006. ISBN 978-0735622142

- ↑ Achim D. Brucker and Uwe Sodan. Deploying Static Application Security Testing on a Large Scale Archived 2014-10-21 at the Wayback Machine . In GI Sicherheit 2014. Lecture Notes in Informatics, 228, pages 91-101, GI, 2014.

- ↑ "OMG Whitepaper | CISQ - Consortium for Information & Software Quality" (PDF). Archived (PDF) from the original on 2013-12-28. Retrieved 2013-10-18.

- ↑ Vijay D’Silva; et al. (2008). "A Survey of Automated Techniques for Formal Software Verification" (PDF). Transactions On CAD. Archived (PDF) from the original on 2016-03-04. Retrieved 2015-05-11.

- ↑ Jones, Paul (2010-02-09). "A Formal Methods-based verification approach to medical device software analysis". Embedded Systems Design. Archived from the original on July 10, 2011. Retrieved 2010-09-09.

- 1 2 "Learning from other's mistakes: Data-driven code analysis". www.slideshare.net. 13 April 2015.

- ↑ Oh, Hakjoo; Yang, Hongseok; Yi, Kwangkeun (2015). "Learning a strategy for adapting a program analysis via bayesian optimisation". Proceedings of the 2015 ACM SIGPLAN International Conference on Object-Oriented Programming, Systems, Languages, and Applications - OOPSLA 2015. pp. 572–588. doi:10.1145/2814270.2814309. ISBN 9781450336895. S2CID 13940725.

- ↑ Logozzo, Francesco; Ball, Thomas (2012-11-15). "Modular and verified automatic program repair". ACM SIGPLAN Notices. 47 (10): 133–146. doi:10.1145/2398857.2384626. ISSN 0362-1340.