In computing, a compiler is a computer program that translates computer code written in one programming language into another language. The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a low-level programming language to create an executable program.

In computing, an optimizing compiler is a compiler that tries to minimize or maximize some attributes of an executable computer program. Common requirements are to minimize a program's execution time, memory footprint, storage size, and power consumption.

In computer science, program analysis is the process of automatically analyzing the behavior of computer programs regarding a property such as correctness, robustness, safety and liveness. Program analysis focuses on two major areas: program optimization and program correctness. The first focuses on improving the program’s performance while reducing the resource usage while the latter focuses on ensuring that the program does what it is supposed to do.

In computing, just-in-time (JIT) compilation is compilation during execution of a program rather than before execution. This may consist of source code translation but is more commonly bytecode translation to machine code, which is then executed directly. A system implementing a JIT compiler typically continuously analyses the code being executed and identifies parts of the code where the speedup gained from compilation or recompilation would outweigh the overhead of compiling that code.

Valgrind is a programming tool for memory debugging, memory leak detection, and profiling.

In compiler theory, dead-code elimination is a compiler optimization to remove dead code. Removing such code has several benefits: it shrinks program size, an important consideration in some contexts, it reduces resource usage such as the number of bytes to be transferred and it allows the running program to avoid executing irrelevant operations, which reduces its running time. It can also enable further optimizations by simplifying program structure. Dead code includes code that can never be executed, and code that only affects dead variables, that is, irrelevant to the program.

In compiler optimization, register allocation is the process of assigning local automatic variables and expression results to a limited number of processor registers.

SIGPLAN is the Association for Computing Machinery's Special Interest Group on programming languages.

Dynamic program analysis is analysis of computer software that involves executing the program in question. Dynamic program analysis includes familiar techniques from software engineering such as unit testing, debugging, and measuring code coverage, but also includes lesser-known techniques like program slicing and invariant inference. Dynamic program analysis is widely applied in security in the form of runtime memory error detection, fuzzing, dynamic symbolic execution, and taint tracking.

Thread Level Speculation (TLS), also known as Speculative Multi-threading, or Speculative Parallelization, is a technique to speculatively execute a section of computer code that is anticipated to be executed later in parallel with the normal execution on a separate independent thread. Such a speculative thread may need to make assumptions about the values of input variables. If these prove to be invalid, then the portions of the speculative thread that rely on these input variables will need to be discarded and squashed. If the assumptions are correct the program can complete in a shorter time provided the thread was able to be scheduled efficiently.

In the context of computer programming, instrumentation refers to the measure of a product's performance, in order to diagnose errors and to write trace information. Instrumentation can be of two types: source instrumentation and binary instrumentation.

Gprof is a performance analysis tool for Unix applications. It used a hybrid of instrumentation and sampling and was created as an extended version of the older "prof" tool. Unlike prof, gprof is capable of limited call graph collecting and printing.

Profile-guided optimization, also known as profile-directed feedback (PDF), and feedback-directed optimization (FDO) is a compiler optimization technique in computer programming that uses profiling to improve program runtime performance.

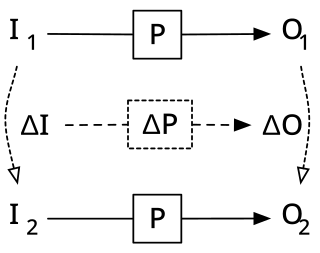

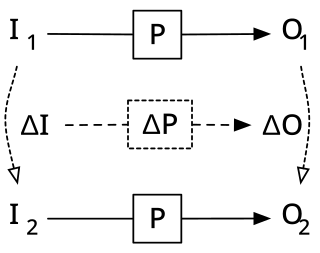

Incremental computing, also known as incremental computation, is a software feature which, whenever a piece of data changes, attempts to save time by only recomputing those outputs which depend on the changed data. When incremental computing is successful, it can be significantly faster than computing new outputs naively. For example, a spreadsheet software package might use incremental computation in its recalculation feature, to update only those cells containing formulas which depend on the changed cells.

In computer science, empirical algorithmics is the practice of using empirical methods to study the behavior of algorithms. The practice combines algorithm development and experimentation: algorithms are not just designed, but also implemented and tested in a variety of situations. In this process, an initial design of an algorithm is analyzed so that the algorithm may be developed in a stepwise manner.

In computer programming and software development, debugging is the process of finding and resolving bugs within computer programs, software, or systems.

Pin is a platform for creating analysis tools. A pin tool comprises instrumentation, analysis and callback routines. Instrumentation routines are called when code that has not yet been recompiled is about to be run, and enable the insertion of analysis routines. Analysis routines are called when the code associated with them is run. Callback routines are only called when specific conditions are met, or when a certain event has occurred. Pin provides an extensive application programming interface (API) for instrumentation at different abstraction levels, from one instruction to an entire binary module. It also supports callbacks for many events such as library loads, system calls, signals/exceptions and thread creation events.

Tracing just-in-time compilation is a technique used by virtual machines to optimize the execution of a program at runtime. This is done by recording a linear sequence of frequently executed operations, compiling them to native machine code and executing them. This is opposed to traditional just-in-time (JIT) compilers that work on a per-method basis.

DynamoRIO is a BSD-licensed dynamic binary instrumentation framework for the development of dynamic program analysis tools. DynamoRIO targets user space applications under the Android, Linux, and Windows operating systems running on the AArch32, IA-32, and x86-64 instruction set architectures.