Related Research Articles

In computer science, functional programming is a programming paradigm where programs are constructed by applying and composing functions. It is a declarative programming paradigm in which function definitions are trees of expressions that map values to other values, rather than a sequence of imperative statements which update the running state of the program.

Static program analysis is the analysis of computer software that is performed without actually executing programs, in contrast with dynamic analysis, which is analysis performed on programs while they are executing. In most cases the analysis is performed on some version of the source code, and in the other cases, some form of the object code.

In programming languages, a type system is a logical system comprising a set of rules that assigns a property called a type to the various constructs of a computer program, such as variables, expressions, functions or modules. These types formalize and enforce the otherwise implicit categories the programmer uses for algebraic data types, data structures, or other components. The main purpose of a type system is to reduce possibilities for bugs in computer programs by defining interfaces between different parts of a computer program, and then checking that the parts have been connected in a consistent way. This checking can happen statically, dynamically, or as a combination of both. Type systems have other purposes as well, such as expressing business rules, enabling certain compiler optimizations, allowing for multiple dispatch, providing a form of documentation, etc.

In programming languages and type theory, polymorphism is the provision of a single interface to entities of different types or the use of a single symbol to represent multiple different types.The concept is borrowed from a principle in biology where an organism or species can have many different forms or stages.

Type inference refers to the automatic detection of the type of an expression in a formal language. These include programming languages and mathematical type systems, but also natural languages in some branches of computer science and linguistics.

In computing, an effect system is a formal system which describes the computational effects of computer programs, such as side effects. An effect system can be used to provide a compile-time check of the possible effects of the program.

Matthias Felleisen is a German-American computer science professor and author. He grew up in Germany and immigrated to the US when he was 21 years old. He received his PhD from Indiana University under the direction of Daniel P. Friedman.

In programming languages and type theory, parametric polymorphism is a way to make a language more expressive, while still maintaining full static type-safety. Using parametric polymorphism, a function or a data type can be written generically so that it can handle values identically without depending on their type. Such functions and data types are called generic functions and generic datatypes respectively and form the basis of generic programming.

In computer science, a type class is a type system construct that supports ad hoc polymorphism. This is achieved by adding constraints to type variables in parametrically polymorphic types. Such a constraint typically involves a type class T and a type variable a, and means that a can only be instantiated to a type whose members support the overloaded operations associated with T.

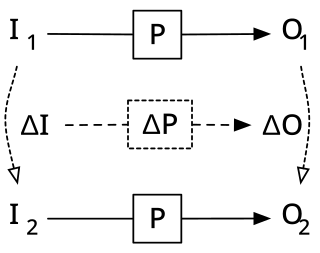

Incremental computing, also known as incremental computation, is a software feature which, whenever a piece of data changes, attempts to save time by only recomputing those outputs which depend on the changed data. When incremental computing is successful, it can be significantly faster than computing new outputs naively. For example, a spreadsheet software package might use incremental computation in its recalculation feature, to update only those cells containing formulas which depend on the changed cells.

In type theory, bounded quantification refers to universal or existential quantifiers which are restricted ("bounded") to range only over the subtypes of a particular type. Bounded quantification is an interaction of parametric polymorphism with subtyping. Bounded quantification has traditionally been studied in the functional setting of System F<:, but is available in modern object-oriented languages supporting parametric polymorphism (generics) such as Java, C# and Scala.

In computer science, polymorphic recursion refers to a recursive parametrically polymorphic function where the type parameter changes with each recursive invocation made, instead of staying constant. Type inference for polymorphic recursion is equivalent to semi-unification and therefore undecidable and requires the use of a semi-algorithm or programmer supplied type annotations.

In computing, algorithmic skeletons, or parallelism patterns, are a high-level parallel programming model for parallel and distributed computing.

In computer science, region-based memory management is a type of memory management in which each allocated object is assigned to a region. A region, also called a zone, arena, area, or memory context, is a collection of allocated objects that can be efficiently reallocated or deallocated all at once. Like stack allocation, regions facilitate allocation and deallocation of memory with low overhead; but they are more flexible, allowing objects to live longer than the stack frame in which they were allocated. In typical implementations, all objects in a region are allocated in a single contiguous range of memory addresses, similarly to how stack frames are typically allocated.

A distributed operating system is system software over a collection of independent, networked, communicating, and physically separate computational nodes. They handle jobs which are serviced by multiple CPUs. Each individual node holds a specific software subset of the global aggregate operating system. Each subset is a composite of two distinct service provisioners. The first is a ubiquitous minimal kernel, or microkernel, that directly controls that node's hardware. Second is a higher-level collection of system management components that coordinate the node's individual and collaborative activities. These components abstract microkernel functions and support user applications.

Haskell is a general-purpose, statically typed, purely functional programming language with type inference and lazy evaluation. Designed for teaching, research and industrial application, Haskell has pioneered a number of advanced programming language features such as type classes, which enable type-safe operator overloading. Haskell's main implementation is the Glasgow Haskell Compiler (GHC). It is named after logician Haskell Curry.

Extended static checking (ESC) is a collective name in computer science for a range of techniques for statically checking the correctness of various program constraints. ESC can be thought of as an extended form of type checking. As with type checking, ESC is performed automatically at compile time. This distinguishes it from more general approaches to the formal verification of software, which typically rely on human-generated proofs. Furthermore, it promotes practicality over soundness, in that it aims to dramatically reduce the number of false positives at the cost of introducing some false negatives. ESC can identify a range of errors which are currently outside the scope of a type checker, including division by zero, array out of bounds, integer overflow and null dereferences.

Gradual typing is a type system in which some variables and expressions may be given types and the correctness of the typing is checked at compile time and some expressions may be left untyped and eventual type errors are reported at runtime. Gradual typing allows software developers to choose either type paradigm as appropriate, from within a single language. In many cases gradual typing is added to an existing dynamic language, creating a derived language allowing but not requiring static typing to be used. In some cases a language uses gradual typing from the start.

Flix is a functional, imperative, and logic programming language developed at Aarhus University, with funding from the Independent Research Fund Denmark, and by a community of open source contributors. The Flix language supports algebraic data types, pattern matching, parametric polymorphism, currying, higher-order functions, extensible records, channel and process-based concurrency, and tail call elimination. Two notable features of Flix are its type and effect system and its support for first-class Datalog constraints.

References

- ↑ Jouvelot, P.; Gifford, D.K. (1988). "The FX-87 Interpreter". Proceedings. 1988 International Conference on Computer Languages. pp. 65–72. doi:10.1109/ICCL.1988.13044. ISBN 978-0-8186-0874-2. S2CID 27089757.

- ↑ Hammel, Todd; David K. Gifford (September 1988). "FX-87 Performance Measurements: Dataflow Implementation" (PDF). Laboratory for Computer Science, Massachusetts Institute of Technology. Retrieved 8 October 2013.