The RGB color model is an additive color model in which the red, green and blue primary colors of light are added together in various ways to reproduce a broad array of colors. The name of the model comes from the initials of the three additive primary colors, red, green, and blue.

Gamma correction or gamma is a nonlinear operation used to encode and decode luminance or tristimulus values in video or still image systems. Gamma correction is, in the simplest cases, defined by the following power-law expression:

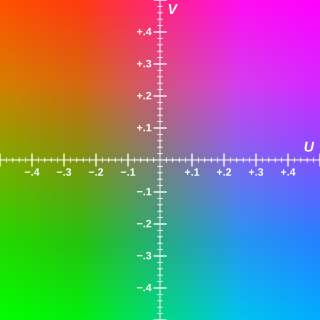

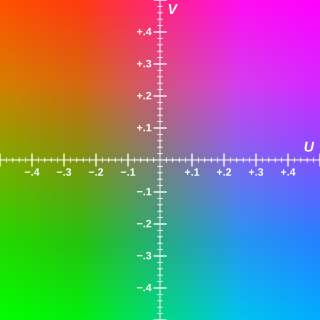

Y′UV, also written YUV, is the color model found in the PAL analogue color TV standard. A color is described as a Y′ component (luma) and two chroma components U and V. The prime symbol (') denotes that the luma is calculated from gamma-corrected RGB input and that it is different from true luminance. Today, the term YUV is commonly used in the computer industry to describe colorspaces that are encoded using YCbCr.

RGB color spaces are additive colorimetric color spaces specifying part of its absolute color space definition using the RGB color model.

Color management is the process of ensuring consistent and accurate colors across various devices, such as monitors, printers, and cameras. It involves the use of color profiles, which are standardized descriptions of how colors should be displayed or reproduced.

Digital Picture Exchange (DPX) is a common file format for digital intermediate and visual effects work and is a SMPTE standard. The file format is most commonly used to represent the density of each colour channel of a scanned negative film in an uncompressed "logarithmic" image where the gamma of the original camera negative is preserved as taken by a film scanner. For this reason, DPX is the worldwide-chosen format for still frames storage in most digital intermediate post-production facilities and film labs. Other common video formats are supported as well, from video to purely digital ones, making DPX a file format suitable for almost any raster digital imaging applications. DPX provides, in fact, a great deal of flexibility in storing colour information, colour spaces and colour planes for exchange between production facilities. Multiple forms of packing and alignment are possible. The DPX specification allows for a wide variety of metadata to further clarify information stored within each file.

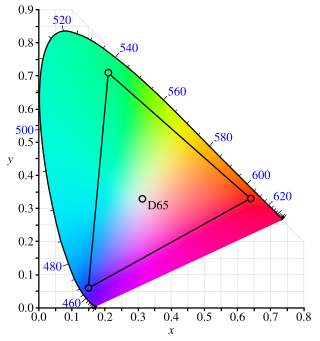

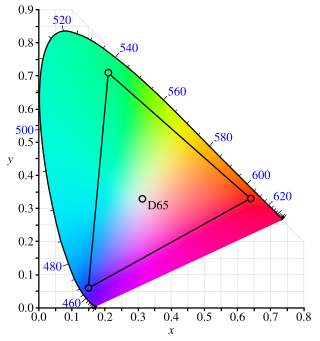

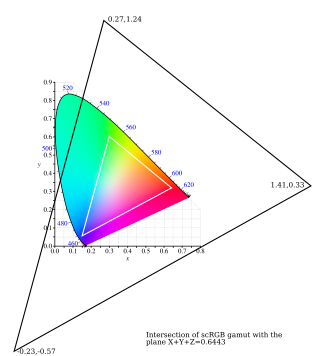

In color reproduction and colorimetry, a gamut, or color gamut, is a convex set containing the colors that can be accurately represented, i.e. reproduced by an output device or measured by an input device. Devices with a larger gamut can represent more colors. Similarly, gamut may also refer to the colors within a defined color space, which is not linked to a specific device. A trichromatic gamut is often visualized as a color triangle. A less common usage defines gamut as the subset of colors contained within an image, scene or video.

YCbCr, Y′CbCr, or Y Pb/Cb Pr/Cr, also written as YCBCR or Y′CBCR, is a family of color spaces used as a part of the color image pipeline in video and digital photography systems. Y′ is the luma component and CB and CR are the blue-difference and red-difference chroma components. Y′ is distinguished from Y, which is luminance, meaning that light intensity is nonlinearly encoded based on gamma corrected RGB primaries.

sRGB is a standard RGB color space that HP and Microsoft created cooperatively in 1996 to use on monitors, printers, and the World Wide Web. It was subsequently standardized by the International Electrotechnical Commission (IEC) as IEC 61966-2-1:1999. sRGB is the current defined standard colorspace for the web, and it is usually the assumed colorspace for images that are neither tagged for a colorspace nor have an embedded color profile.

The Adobe RGB (1998) color space or opRGB is a color space developed by Adobe Inc. in 1998. It was designed to encompass most of the colors achievable on CMYK color printers, but by using RGB primary colors on a device such as a computer display. The Adobe RGB (1998) color space encompasses roughly 30% of the visible colors specified by the CIELAB color space – improving upon the gamut of the sRGB color space, primarily in cyan-green hues. It was subsequently standardized by the IEC as IEC 61966-2-5:1999 with a name opRGB and is used in HDMI.

The ProPhoto RGB color space, also known as ROMM RGB, is an output referred RGB color space developed by Kodak. It offers an especially large gamut designed for use with photographic output in mind. The ProPhoto RGB color space encompasses over 90% of possible surface colors in the CIE L*a*b* color space, and 100% of likely occurring real-world surface colors documented by Michael Pointer in 1980, making ProPhoto even larger than the Wide-gamut RGB color space. The ProPhoto RGB primaries were also chosen in order to minimize hue rotations associated with non-linear tone scale operations. One of the downsides to this color space is that approximately 13% of the representable colors are imaginary colors that do not exist and are not visible colors.

The CIE 1931 color spaces are the first defined quantitative links between distributions of wavelengths in the electromagnetic visible spectrum, and physiologically perceived colors in human color vision. The mathematical relationships that define these color spaces are essential tools for color management, important when dealing with color inks, illuminated displays, and recording devices such as digital cameras. The system was designed in 1931 by the "Commission Internationale de l'éclairage", known in English as the International Commission on Illumination.

xvYCC or extended-gamut YCbCr is a color space that can be used in the video electronics of television sets to support a gamut 1.8 times as large as that of the sRGB color space. xvYCC was proposed by Sony, specified by the IEC in October 2005 and published in January 2006 as IEC 61966-2-4. xvYCC extends the ITU-R BT.709 tone curve by defining over-ranged values. xvYCC-encoded video retains the same color primaries and white point as BT.709, and uses either a BT.601 or BT.709 RGB-to-YCC conversion matrix and encoding. This allows it to travel through existing digital limited range YCC data paths, and any colors within the normal gamut will be compatible. It works by allowing negative RGB inputs and expanding the output chroma. These are used to encode more saturated colors by using a greater part of the RGB values that can be encoded in the YCbCr signal compared with those used in Broadcast Safe Level. The extra-gamut colors can then be displayed by a device whose underlying technology is not limited by the standard primaries.

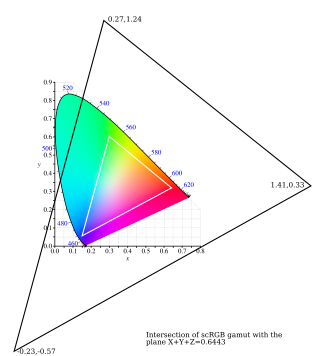

scRGB is a wide color gamut RGB color space created by Microsoft and HP that uses the same color primaries and white/black points as the sRGB color space but allows coordinates below zero and greater than one. The full range is −0.5 through just less than +7.5.

PGF is a wavelet-based bitmapped image format that employs lossless and lossy data compression. PGF was created to improve upon and replace the JPEG format. It was developed at the same time as JPEG 2000 but with a focus on speed over compression ratio.

Rec. 709, also known as Rec.709, BT.709, and ITU 709, is a standard developed by ITU-R for image encoding and signal characteristics of high-definition television.

A color space is a specific organization of colors. In combination with color profiling supported by various physical devices, it supports reproducible representations of color – whether such representation entails an analog or a digital representation. A color space may be arbitrary, i.e. with physically realized colors assigned to a set of physical color swatches with corresponding assigned color names, or structured with mathematical rigor. A "color space" is a useful conceptual tool for understanding the color capabilities of a particular device or digital file. When trying to reproduce color on another device, color spaces can show whether shadow/highlight detail and color saturation can be retained, and by how much either will be compromised.

DCI-P3 is a color space first defined in 2005 as part of the Digital Cinema Initiative, to be used for digital theatrical motion picture distribution (DCDM). Display P3 is a variant developed by Apple Inc. for wide-gamut displays.

ICTCP, ICtCp, or ITP is a color representation format specified in the Rec. ITU-R BT.2100 standard that is used as a part of the color image pipeline in video and digital photography systems for high dynamic range (HDR) and wide color gamut (WCG) imagery. It was developed by Dolby Laboratories from the IPT color space by Ebner and Fairchild. The format is derived from an associated RGB color space by a coordinate transformation that includes two matrix transformations and an intermediate nonlinear transfer function that is informally known as gamma pre-correction. The transformation produces three signals called I, CT, and CP. The ICTCP transformation can be used with RGB signals derived from either the perceptual quantizer (PQ) or hybrid log–gamma (HLG) nonlinearity functions, but is most commonly associated with the PQ function.