Related Research Articles

In software quality assurance, performance testing is in general a testing practice performed to determine how a system performs in terms of responsiveness and stability under a particular workload. It can also serve to investigate, measure, validate or verify other quality attributes of the system, such as scalability, reliability and resource usage.

An embedded system is a computer system—a combination of a computer processor, computer memory, and input/output peripheral devices—that has a dedicated function within a larger mechanical or electronic system. It is embedded as part of a complete device often including electrical or electronic hardware and mechanical parts. Because an embedded system typically controls physical operations of the machine that it is embedded within, it often has real-time computing constraints. Embedded systems control many devices in common use. In 2009, it was estimated that ninety-eight percent of all microprocessors manufactured were used in embedded systems.

In computing, overclocking is the practice of increasing the clock rate of a computer to exceed that certified by the manufacturer. Commonly, operating voltage is also increased to maintain a component's operational stability at accelerated speeds. Semiconductor devices operated at higher frequencies and voltages increase power consumption and heat. An overclocked device may be unreliable or fail completely if the additional heat load is not removed or power delivery components cannot meet increased power demands. Many device warranties state that overclocking or over-specification voids any warranty, but some manufacturers allow overclocking as long as it is done (relatively) safely.

Underclocking, also known as downclocking, is modifying a computer or electronic circuit's timing settings to run at a lower clock rate than is specified. Underclocking is used to reduce a computer's power consumption, increase battery life, reduce heat emission, and it may also increase the system's stability, lifespan/reliability and compatibility. Underclocking may be implemented by the factory, but many computers and components may be underclocked by the end user.

In computing, stress testing can be applied to either hardware or software. It is used to determine the maximum capability of a computer system and is often used for purposes such as scaling for production use and ensuring reliability and stability. Stress tests typically involve running a large amount of resource-intensive processes until the system either crashes or nearly does so.

In computing, the clock rate or clock speed typically refers to the frequency at which the clock generator of a processor can generate pulses, which are used to synchronize the operations of its components, and is used as an indicator of the processor's speed. It is measured in the SI unit of frequency hertz (Hz).

The K6-III was an x86 microprocessor line manufactured by AMD that launched on February 22, 1999. The launch consisted of both 400 and 450 MHz models and was based on the preceding K6-2 architecture. Its improved 256 KB on-chip L2 cache gave it significant improvements in system performance over its predecessor the K6-2. The K6-III was the last processor officially released for desktop Socket 7 systems, however later mobile K6-III+ and K6-2+ processors could be run unofficially in certain socket 7 motherboards if an updated BIOS was made available for a given board. The Pentium III processor from Intel launched 6 days later.

Load testing is the process of putting demand on a structure or system and measuring its response.

Reliability engineering is a sub-discipline of systems engineering that emphasizes the ability of equipment to function without failure. Reliability describes the ability of a system or component to function under stated conditions for a specified period of time. Reliability is closely related to availability, which is typically described as the ability of a component or system to function at a specified moment or interval of time.

In the fields of digital electronics and computer hardware, multi-channel memory architecture is a technology that increases the data transfer rate between the DRAM memory and the memory controller by adding more channels of communication between them. Theoretically, this multiplies the data rate by exactly the number of channels present. Dual-channel memory employs two channels. The technique goes back as far as the 1960s having been used in IBM System/360 Model 91 and in CDC 6600.

Super PI is a computer program that calculates pi to a specified number of digits after the decimal point—up to a maximum of 32 million. It uses Gauss–Legendre algorithm and is a Windows port of the program used by Yasumasa Kanada in 1995 to compute pi to 232 digits.

Sandy Bridge is the codename for Intel's 32 nm microarchitecture used in the second generation of the Intel Core processors. The Sandy Bridge microarchitecture is the successor to Nehalem and Westmere microarchitecture. Intel demonstrated a Sandy Bridge processor in 2009, and released first products based on the architecture in January 2011 under the Core brand.

Stress testing is a software testing activity that determines the robustness of software by testing beyond the limits of normal operation. Stress testing is particularly important for "mission critical" software, but is used for all types of software. Stress tests commonly put a greater emphasis on robustness, availability, and error handling under a heavy load, than on what would be considered correct behavior under normal circumstances.

In computing, computer performance is the amount of useful work accomplished by a computer system. Outside of specific contexts, computer performance is estimated in terms of accuracy, efficiency and speed of executing computer program instructions. When it comes to high computer performance, one or more of the following factors might be involved:

Haswell is the codename for a processor microarchitecture developed by Intel as the "fourth-generation core" successor to the Ivy Bridge. Intel officially announced CPUs based on this microarchitecture on June 4, 2013, at Computex Taipei 2013, while a working Haswell chip was demonstrated at the 2011 Intel Developer Forum. With Haswell, which uses a 22 nm process, Intel also introduced low-power processors designed for convertible or "hybrid" ultrabooks, designated by the "U" suffix.

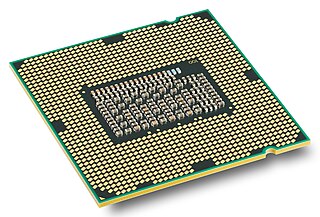

LGA 2011, also called Socket R, is a CPU socket by Intel released on November 14, 2011. It launched along with LGA 1356 to replace its predecessor, LGA 1366 and LGA 1567. While LGA 1356 was designed for dual-processor or low-end servers, LGA 2011 was designed for high-end desktops and high-performance servers. The socket has 2011 protruding pins that touch contact points on the underside of the processor.

The Intel X79 is a Platform Controller Hub (PCH) designed and manufactured by Intel for their LGA 2011 and LGA 2011-1.

Skylake is Intel's codename for its sixth generation Core microprocessor family that was launched on August 5, 2015, succeeding the Broadwell microarchitecture. Skylake is a microarchitecture redesign using the same 14 nm manufacturing process technology as its predecessor, serving as a tock in Intel's tick–tock manufacturing and design model. According to Intel, the redesign brings greater CPU and GPU performance and reduced power consumption. Skylake CPUs share their microarchitecture with Kaby Lake, Coffee Lake, Cannon Lake, Whiskey Lake, and Comet Lake CPUs.

Ivy Bridge is the codename for Intel's 22 nm microarchitecture used in the third generation of the Intel Core processors. Ivy Bridge is a die shrink to 22 nm process based on FinFET ("3D") Tri-Gate transistors, from the former generation's 32 nm Sandy Bridge microarchitecture—also known as tick–tock model. The name is also applied more broadly to the Xeon and Core i7 Ivy Bridge-E series of processors released in 2013.

Reliability verification or reliability testing is a method to evaluate the reliability of the product in all environments such as expected use, transportation, or storage during the specified lifespan. It is to expose the product to natural or artificial environmental conditions to undergo its action to evaluate the performance of the product under the environmental conditions of actual use, transportation, and storage, and to analyze and study the degree of influence of environmental factors and their mechanism of action. Through the use of various environmental test equipment to simulate the high temperature, low temperature, and high humidity, and temperature changes in the climate environment, to accelerate the reaction of the product in the use environment, to verify whether it reaches the expected quality in R&D, design, and manufacturing.

References

- ↑ Nelson, Wayne B., (2004), Accelerated Testing - Statistical Models, Test Plans, and Data Analysis, John Wiley & Sons, New York, ISBN 0-471-69736-2

- ↑ Sin0822 (2011-12-24). "Sandy Bridge E Overclocking Guide: Walk through, Explanations, and Support for all X79". overclock.net. Retrieved 2 February 2013.(some text condensed)

- ↑ Juan Jose Guerrero III - ASUS (2012-03-29). "Intel X79 Motherboard Overclocking Guide". benchmarkreviews.com. Retrieved 2 February 2013.

- ↑ Weber, Wolfgang; Tondok, Heidemarie; Bachmayer, Michael (2003). Anderson, Stuart; Felici, Massimo; Littlewood, Bev (eds.). "Enhancing Software Safety by Fault Trees: Experiences from an Application to Flight Critical SW". Computer Safety, Reliability, and Security. Lecture Notes in Computer Science. Berlin, Heidelberg: Springer. 2788: 289–302. doi:10.1007/978-3-540-39878-3_23. ISBN 978-3-540-39878-3.

- ↑ Jung, Byung C.; Shin, Yun-Ho; Lee, Sang Hyuk; Huh, Young Cheol; Oh, Hyunseok (January 2020). "A Response-Adaptive Method for Design of Validation Experiments in Computational Mechanics". Applied Sciences. 10 (2): 647. doi: 10.3390/app10020647 .

- ↑ Fan, A.; Wang, J.; Aptekar, V. (March 2019). "Advanced Circuit Reliability Verification for Robust Design". 2019 IEEE International Reliability Physics Symposium (IRPS). pp. 1–8. doi:10.1109/IRPS.2019.8720531. ISBN 978-1-5386-9504-3. S2CID 169037244.

- ↑ Halamay, D. A.; Starrett, M.; Brekken, T. K. A. (2019). "Hardware Testing of Electric Hot Water Heaters Providing Energy Storage and Demand Response Through Model Predictive Control". IEEE Access. 7: 139047–139057. doi: 10.1109/ACCESS.2019.2932978 . ISSN 2169-3536.