Definition, most common

The hyperoperation sequence is the sequence of binary operations

is the sequence of binary operations  , defined recursively as follows:

, defined recursively as follows:

(Note that for n = 0, the binary operation essentially reduces to a unary operation (successor function) by ignoring the first argument.)

For n = 0, 1, 2, 3, this definition reproduces the basic arithmetic operations of successor (which is a unary operation), addition, multiplication, and exponentiation, respectively, as

The  operations for n ≥ 3 can be written in Knuth's up-arrow notation.

operations for n ≥ 3 can be written in Knuth's up-arrow notation.

So what will be the next operation after exponentiation? We defined multiplication so that  and defined exponentiation so that

and defined exponentiation so that  so it seems logical to define the next operation, tetration, so that

so it seems logical to define the next operation, tetration, so that  with a tower of three 'a'. Analogously, the pentation of (a, 3) will be tetration(a, tetration(a, a)), with three "a" in it.

with a tower of three 'a'. Analogously, the pentation of (a, 3) will be tetration(a, tetration(a, a)), with three "a" in it.

Knuth's notation could be extended to negative indices ≥ −2 in such a way as to agree with the entire hyperoperation sequence, except for the lag in the indexing:

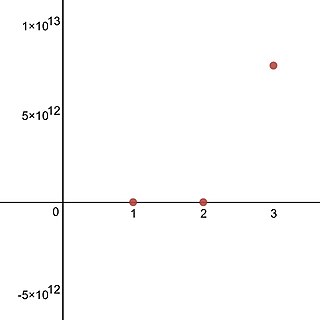

The hyperoperations can thus be seen as an answer to the question "what's next" in the sequence: successor, addition, multiplication, exponentiation, and so on. Noting that

the relationship between basic arithmetic operations is illustrated, allowing the higher operations to be defined naturally as above. The parameters of the hyperoperation hierarchy are sometimes referred to by their analogous exponentiation term; so a is the base, b is the exponent (or hyperexponent), and n is the rank (or grade), and moreover,  is read as "the bth n-ation of a", e.g.

is read as "the bth n-ation of a", e.g.  is read as "the 9th tetration of 7", and

is read as "the 9th tetration of 7", and  is read as "the 789th 123-ation of 456".

is read as "the 789th 123-ation of 456".

In common terms, the hyperoperations are ways of compounding numbers that increase in growth based on the iteration of the previous hyperoperation. The concepts of successor, addition, multiplication and exponentiation are all hyperoperations; the successor operation (producing x + 1 from x) is the most primitive, the addition operator specifies the number of times 1 is to be added to itself to produce a final value, multiplication specifies the number of times a number is to be added to itself, and exponentiation refers to the number of times a number is to be multiplied by itself.

Definition, using iteration

Define iteration of a function f of two variables as

The hyperoperation sequence can be defined in terms of iteration, as follows. For all integers  define

define

As iteration is associative, the last line can be replaced by