Computer animation is the process used for digitally generating animations. The more general term computer-generated imagery (CGI) encompasses both static scenes and dynamic images, while computer animation only refers to moving images. Modern computer animation usually uses 3D computer graphics. The animation's target is sometimes the computer itself, while other times it is film.

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder.

Digital video is an electronic representation of moving visual images (video) in the form of encoded digital data. This is in contrast to analog video, which represents moving visual images in the form of analog signals. Digital video comprises a series of digital images displayed in rapid succession, usually at 24, 30, or 60 frames per second. Digital video has many advantages such as easy copying, multicasting, sharing and storage.

The Graphics Interchange Format is a bitmap image format that was developed by a team at the online services provider CompuServe led by American computer scientist Steve Wilhite and released on June 15, 1987.

MPEG-1 is a standard for lossy compression of video and audio. It is designed to compress VHS-quality raw digital video and CD audio down to about 1.5 Mbit/s without excessive quality loss, making video CDs, digital cable/satellite TV and digital audio broadcasting (DAB) practical.

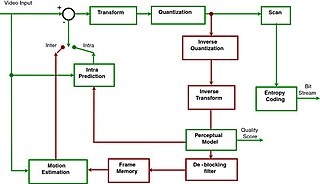

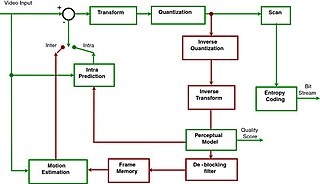

Motion compensation in computing is an algorithmic technique used to predict a frame in a video given the previous and/or future frames by accounting for motion of the camera and/or objects in the video. It is employed in the encoding of video data for video compression, for example in the generation of MPEG-2 files. Motion compensation describes a picture in terms of the transformation of a reference picture to the current picture. The reference picture may be previous in time or even from the future. When images can be accurately synthesized from previously transmitted/stored images, the compression efficiency can be improved.

Inbetweening, also known as tweening, is a process in animation that involves creating intermediate frames, called inbetweens, between two keyframes. The intended result is to create the illusion of movement by smoothly transitioning one image into another.

Motion JPEG is a video compression format in which each video frame or interlaced field of a digital video sequence is compressed separately as a JPEG image.

A compression artifact is a noticeable distortion of media caused by the application of lossy compression. Lossy data compression involves discarding some of the media's data so that it becomes small enough to be stored within the desired disk space or transmitted (streamed) within the available bandwidth. If the compressor cannot store enough data in the compressed version, the result is a loss of quality, or introduction of artifacts. The compression algorithm may not be intelligent enough to discriminate between distortions of little subjective importance and those objectionable to the user.

Advanced Video Coding (AVC), also referred to as H.264 or MPEG-4 Part 10, is a video compression standard based on block-oriented, motion-compensated coding. It is by far the most commonly used format for the recording, compression, and distribution of video content, used by 91% of video industry developers as of September 2019. It supports a maximum resolution of 8K UHD.

QuickDraw was the 2D graphics library and associated application programming interface (API) which is a core part of classic Mac OS. It was initially written by Bill Atkinson and Andy Hertzfeld. QuickDraw still existed as part of the libraries of macOS, but had been largely superseded by the more modern Quartz graphics system. In Mac OS X Tiger, QuickDraw has been officially deprecated. In Mac OS X Leopard applications using QuickDraw cannot make use of the added 64-bit support. In OS X Mountain Lion, QuickDraw header support was removed from the operating system. Applications using QuickDraw still ran under OS X Mountain Lion to macOS High Sierra; however, the current versions of Xcode and the macOS SDK do not contain the header files to compile such programmes.

ANIM is a file format, used to store digital movies and computer generated animations, and is a variation of the ILBM format, which is a subformat of Interchange File Format.

In the field of video compression a video frame is compressed using different algorithms with different advantages and disadvantages, centered mainly around amount of data compression. These different algorithms for video frames are called picture types or frame types. The three major picture types used in the different video algorithms are I, P and B. They are different in the following characteristics:

Animated Portable Network Graphics (APNG) is a file format which extends the Portable Network Graphics (PNG) specification to permit animated images that work similarly to animated GIF files, while supporting 24-bit images and 8-bit transparency not available for GIFs. It also retains backward compatibility with non-animated PNG files.

An inter frame is a frame in a video compression stream which is expressed in terms of one or more neighboring frames. The "inter" part of the term refers to the use of Inter frame prediction. This kind of prediction tries to take advantage from temporal redundancy between neighboring frames enabling higher compression rates.

H.262 or MPEG-2 Part 2 is a video coding format standardised and jointly maintained by ITU-T Study Group 16 Video Coding Experts Group (VCEG) and ISO/IEC Moving Picture Experts Group (MPEG), and developed with the involvement of many companies. It is the second part of the ISO/IEC MPEG-2 standard. The ITU-T Recommendation H.262 and ISO/IEC 13818-2 documents are identical.

Flash Video is a container file format used to deliver digital video content over the Internet using Adobe Flash Player version 6 and newer. Flash Video content may also be embedded within SWF files. There are two different Flash Video file formats: FLV and F4V. The audio and video data within FLV files are encoded in the same way as SWF files. The F4V file format is based on the ISO base media file format, starting with Flash Player 9 update 3. Both formats are supported in Adobe Flash Player and developed by Adobe Systems. FLV was originally developed by Macromedia. In the early 2000s, Flash Video was the de facto standard for web-based streaming video. Users include Hulu, VEVO, Yahoo! Video, metacafe, Reuters.com, and many other news providers.

An image file format is a file format for a digital image. There are many formats that can be used, such as JPEG, PNG, and GIF. Most formats up until 2022 were for storing 2D images, not 3D ones. The data stored in an image file format may be compressed or uncompressed. If the data is compressed, it may be done so using lossy compression or lossless compression. For graphic design applications, vector formats are often used. Some image file formats support transparency.

Α video codec is software or a device that provides encoding and decoding for digital video, and which may or may not include the use of video compression and/or decompression. Most codecs are typically implementations of video coding formats.

ARINC 818: Avionics Digital Video Bus (ADVB) is a video interface and protocol standard developed for high bandwidth, low-latency, uncompressed digital video transmission in avionics systems. The standard, which was released in January 2007, has been advanced by ARINC and the aerospace community to meet the stringent needs of high performance digital video. The specification was updated and ARINC 818-2 was released in December 2013, adding a number of new features, including link rates up to 32X fibre channel rates, channel-bonding, switching, field sequential color, bi-directional control and data-only links.