Related Research Articles

In computing, a data warehouse, also known as an enterprise data warehouse (EDW), is a system used for reporting and data analysis and is considered a core component of business intelligence. Data warehouses are central repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating reports. This is beneficial for companies as it enables them to interrogate and draw insights from their data and make decisions.

In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and analyze the data. The DBMS additionally encompasses the core facilities provided to administer the database. The sum total of the database, the DBMS and the associated applications can be referred to as a database system. Often the term "database" is also used loosely to refer to any of the DBMS, the database system or an application associated with the database.

Db2 is a family of data management products, including database servers, developed by IBM. It initially supported the relational model, but was extended to support object–relational features and non-relational structures like JSON and XML. The brand name was originally styled as DB2 until 2017, when it changed to its present form.

In computing, online analytical processing, or OLAP, is an approach to quickly answer multi-dimensional analytical (MDA) queries. OLAP is part of the broader category of business intelligence, which also encompasses relational databases, report writing and data mining. Typical applications of OLAP include business reporting for sales, marketing, management reporting, business process management (BPM), budgeting and forecasting, financial reporting and similar areas, with new applications emerging, such as agriculture.

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted from an input source, transformed, and loaded into an output data container. The data can be collated from one or more sources and it can also be output to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on recurring schedules either as single jobs or aggregated into a batch of jobs.

Enterprise information integration (EII) is the ability to support a unified view of data and information for an entire organization. In a data virtualization application of EII, a process of information integration, using data abstraction to provide a unified interface for viewing all the data within an organization, and a single set of structures and naming conventions to represent this data; the goal of EII is to get a large set of heterogeneous data sources to appear to a user or system as a single, homogeneous data source.

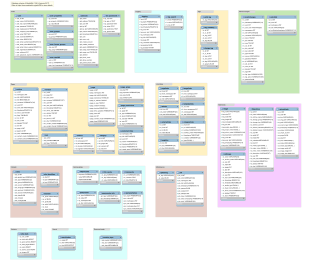

Database design is the organization of data according to a database model. The designer determines what data must be stored and how the data elements interrelate. With this information, they can begin to fit the data to the database model. A database management system manages the data accordingly.

A dimension is a structure that categorizes facts and measures in order to enable users to answer business questions. Commonly used dimensions are people, products, place and time.

In databases, change data capture (CDC) is a set of software design patterns used to determine and track the data that has changed so that action can be taken using the changed data. The result is a delta-driven dataset.

In computing, data transformation is the process of converting data from one format or structure into another format or structure. It is a fundamental aspect of most data integration and data management tasks such as data wrangling, data warehousing, data integration and application integration.

Data integration involves combining data residing in different sources and providing users with a unified view of them. This process becomes significant in a variety of situations, which include both commercial and scientific domains. Data integration appears with increasing frequency as the volume, complexity and the need to share existing data explodes. It has become the focus of extensive theoretical work, and numerous open problems remain unsolved. Data integration encourages collaboration between internal as well as external users. The data being integrated must be received from a heterogeneous database system and transformed to a single coherent data store that provides synchronous data across a network of files for clients. A common use of data integration is in data mining when analyzing and extracting information from existing databases that can be useful for Business information.

Bigtable is a fully managed wide-column and key-value NoSQL database service for large analytical and operational workloads as part of the Google Cloud portfolio.

An entity–attribute–value model (EAV) is a data model optimized for the space-efficient storage of sparse—or ad-hoc—property or data values, intended for situations where runtime usage patterns are arbitrary, subject to user variation, or otherwise unforeseeable using a fixed design. The use-case targets applications which offer a large or rich system of defined property types, which are in turn appropriate to a wide set of entities, but where typically only a small, specific selection of these are instantiated for a given entity. Therefore, this type of data model relates to the mathematical notion of a sparse matrix. EAV is also known as object–attribute–value model, vertical database model, and open schema.

Dimensional modeling (DM) is part of the Business Dimensional Lifecycle methodology developed by Ralph Kimball which includes a set of methods, techniques and concepts for use in data warehouse design. The approach focuses on identifying the key business processes within a business and modelling and implementing these first before adding additional business processes, as a bottom-up approach. An alternative approach from Inmon advocates a top down design of the model of all the enterprise data using tools such as entity-relationship modeling (ER).

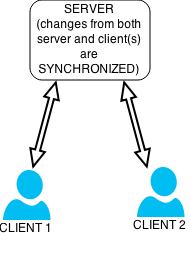

Data synchronization is the process of establishing consistency between source and target data stores, and the continuous harmonization of the data over time. It is fundamental to a wide variety of applications, including file synchronization and mobile device synchronization. Data synchronization can also be useful in encryption for synchronizing public key servers.

A database model is a type of data model that determines the logical structure of a database. It fundamentally determines in which manner data can be stored, organized and manipulated. The most popular example of a database model is the relational model, which uses a table-based format.

Datavault or data vault modeling is a database modeling method that is designed to provide long-term historical storage of data coming in from multiple operational systems. It is also a method of looking at historical data that deals with issues such as auditing, tracing of data, loading speed and resilience to change as well as emphasizing the need to trace where all the data in the database came from. This means that every row in a data vault must be accompanied by record source and load date attributes, enabling an auditor to trace values back to the source. The concept was published in 2000 by Dan Linstedt.

Innovative Routines International (IRI), Inc. is an American software company first known for bringing mainframe sort merge functionality into open systems. IRI was the first vendor to develop a commercial replacement for the Unix sort command, and combine data transformation and reporting in Unix batch processing environments. In 2007, IRI's coroutine sort ("CoSort") became the first product to collate and convert multi-gigabyte XML and LDIF files, join and lookup across multiple files, and apply role-based data privacy functions for fields within sensitive files.

Data virtualization is an approach to data management that allows an application to retrieve and manipulate data without requiring technical details about the data, such as how it is formatted at source, or where it is physically located, and can provide a single customer view of the overall data.

The following is provided as an overview of and topical guide to databases:

References

- ↑ Oracle 9i Data Warehousing Guide, Data Warehousing Concepts, Oracle Corp.

- ↑ "Persistent Staging". Data Warehouse Automation - Dimodelo Solutions. Retrieved 2023-04-22.

- 1 2 3 4 5 Data Warehousing Fundamentals: A Comprehensive Guide for IT Professionals, p. 137-138, Paulraj Ponniah, 2001.

- 1 2 3 BI Experts: Big Data and Your Data Warehouse's Data Staging Area, The Data Warehousing Institute, Phillip Russom, 2012.

- ↑ Is Data Staging Relational? Archived 2013-12-26 at the Wayback Machine , Ralph Kimball, 1998.

- ↑ Master Data Management in Practice: Achieving True Customer MDM, Dalton Cervo and Mark Allen, 2011.