In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder.

Image compression is a type of data compression applied to digital images, to reduce their cost for storage or transmission. Algorithms may take advantage of visual perception and the statistical properties of image data to provide superior results compared with generic data compression methods which are used for other digital data.

A discrete cosine transform (DCT) expresses a finite sequence of data points in terms of a sum of cosine functions oscillating at different frequencies. The DCT, first proposed by Nasir Ahmed in 1972, is a widely used transformation technique in signal processing and data compression. It is used in most digital media, including digital images, digital video, digital audio, digital television, digital radio, and speech coding. DCTs are also important to numerous other applications in science and engineering, such as digital signal processing, telecommunication devices, reducing network bandwidth usage, and spectral methods for the numerical solution of partial differential equations.

Pattern recognition is the task of assigning a class to an observation based on patterns extracted from data. While similar, pattern recognition (PR) is not to be confused with pattern machines (PM) which may possess (PR) capabilities but their primary function is to distinguish and create emergent pattern. PR has applications in statistical data analysis, signal processing, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Pattern recognition has its origins in statistics and engineering; some modern approaches to pattern recognition include the use of machine learning, due to the increased availability of big data and a new abundance of processing power.

A compression artifact is a noticeable distortion of media caused by the application of lossy compression. Lossy data compression involves discarding some of the media's data so that it becomes small enough to be stored within the desired disk space or transmitted (streamed) within the available bandwidth. If the compressor cannot store enough data in the compressed version, the result is a loss of quality, or introduction of artifacts. The compression algorithm may not be intelligent enough to discriminate between distortions of little subjective importance and those objectionable to the user.

Rate–distortion theory is a major branch of information theory which provides the theoretical foundations for lossy data compression; it addresses the problem of determining the minimal number of bits per symbol, as measured by the rate R, that should be communicated over a channel, so that the source can be approximately reconstructed at the receiver without exceeding an expected distortion D.

Quantization, in mathematics and digital signal processing, is the process of mapping input values from a large set to output values in a (countable) smaller set, often with a finite number of elements. Rounding and truncation are typical examples of quantization processes. Quantization is involved to some degree in nearly all digital signal processing, as the process of representing a signal in digital form ordinarily involves rounding. Quantization also forms the core of essentially all lossy compression algorithms.

In time series analysis, dynamic time warping (DTW) is an algorithm for measuring similarity between two temporal sequences, which may vary in speed. For instance, similarities in walking could be detected using DTW, even if one person was walking faster than the other, or if there were accelerations and decelerations during the course of an observation. DTW has been applied to temporal sequences of video, audio, and graphics data — indeed, any data that can be turned into a one-dimensional sequence can be analyzed with DTW. A well-known application has been automatic speech recognition, to cope with different speaking speeds. Other applications include speaker recognition and online signature recognition. It can also be used in partial shape matching applications.

Cluster analysis or clustering is the task of grouping a set of objects in such a way that objects in the same group are more similar to each other than to those in other groups (clusters). It is a main task of exploratory data analysis, and a common technique for statistical data analysis, used in many fields, including pattern recognition, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning.

Cinepak is a lossy video codec developed by Peter Barrett at SuperMac Technologies, and released in 1991 with the Video Spigot, and then in 1992 as part of Apple Computer's QuickTime video suite. One of the first video compression tools to achieve full motion video on CD-ROM, it was designed to encode 320×240 resolution video at 1× CD-ROM transfer rates. The original name of this codec was Compact Video, which is why its FourCC identifier is CVID. The codec was ported to Microsoft Windows in 1993. It was also used on fourth- and fifth-generation game consoles, such as the Atari Jaguar CD, Sega CD, Sega Saturn, and 3DO. libavcodec includes a Cinepak decoder and an encoder, both licensed under the terms of the LGPL.

Code-excited linear prediction (CELP) is a linear predictive speech coding algorithm originally proposed by Manfred R. Schroeder and Bishnu S. Atal in 1985. At the time, it provided significantly better quality than existing low bit-rate algorithms, such as residual-excited linear prediction (RELP) and linear predictive coding (LPC) vocoders. Along with its variants, such as algebraic CELP, relaxed CELP, low-delay CELP and vector sum excited linear prediction, it is currently the most widely used speech coding algorithm. It is also used in MPEG-4 Audio speech coding. CELP is commonly used as a generic term for a class of algorithms and not for a particular codec.

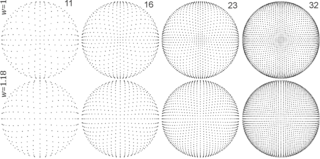

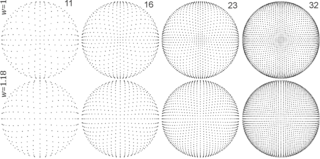

The Linde–Buzo–Gray algorithm is an iterative vector quantization algorithm to improve a small set of vectors (codebook) to represent a larger set of vectors, such that it will be locally optimal. It combines Lloyd's Algorithm with a splitting technique in which larger codebooks are built from smaller codebooks by splitting each code vector in two. The core idea of the algorithm is that by splitting the codebook such that all code vectors from the previous codebook are present, the new codebook must be as good as the previous one or better.

k-means clustering is a method of vector quantization, originally from signal processing, that aims to partition n observations into k clusters in which each observation belongs to the cluster with the nearest mean, serving as a prototype of the cluster. This results in a partitioning of the data space into Voronoi cells. k-means clustering minimizes within-cluster variances, but not regular Euclidean distances, which would be the more difficult Weber problem: the mean optimizes squared errors, whereas only the geometric median minimizes Euclidean distances. For instance, better Euclidean solutions can be found using k-medians and k-medoids.

Fuzzy clustering is a form of clustering in which each data point can belong to more than one cluster.

In electrical engineering and computer science, Lloyd's algorithm, also known as Voronoi iteration or relaxation, is an algorithm named after Stuart P. Lloyd for finding evenly spaced sets of points in subsets of Euclidean spaces and partitions of these subsets into well-shaped and uniformly sized convex cells. Like the closely related k-means clustering algorithm, it repeatedly finds the centroid of each set in the partition and then re-partitions the input according to which of these centroids is closest. In this setting, the mean operation is an integral over a region of space, and the nearest centroid operation results in Voronoi diagrams.

Microsoft Video 1 or MS-CRAM is an early lossy video compression and decompression algorithm (codec) that was released with version 1.0 of Microsoft's Video for Windows in November 1992. It is based on MotiVE, a vector quantization codec which Microsoft licensed from Media Vision. In 1993, Media Vision marketed the Pro Movie Spectrum, an ISA board that captured video in both raw and MSV1 formats.

Yasuo Matsuyama is a Japanese researcher in machine learning and human-aware information processing.

Apple Video is a lossy video compression and decompression algorithm (codec) developed by Apple Inc. and first released as part of QuickTime 1.0 in 1991. The codec is also known as QuickTime Video, by its FourCC RPZA and the name Road Pizza. When used in the AVI container, the FourCC AZPR is also used.

Pyramid vector quantization (PVQ) is a method used in audio and video codecs to quantize and transmit unit vectors, i.e. vectors whose magnitudes are known to the decoder but whose directions are unknown. PVQ may also be used as part of a gain/shape quantization scheme, whereby the magnitude and direction of a vector are quantized separately from each other. PVQ was initially described in 1986 in the paper "A Pyramid Vector Quantizer" by Thomas R. Fischer.