Related Research Articles

Multivariate statistics is a subdivision of statistics encompassing the simultaneous observation and analysis of more than one outcome variable, i.e., multivariate random variables. Multivariate statistics concerns understanding the different aims and background of each of the different forms of multivariate analysis, and how they relate to each other. The practical application of multivariate statistics to a particular problem may involve several types of univariate and multivariate analyses in order to understand the relationships between variables and their relevance to the problem being studied.

In statistics, exploratory data analysis (EDA) is an approach of analyzing data sets to summarize their main characteristics, often using statistical graphics and other data visualization methods. A statistical model can be used or not, but primarily EDA is for seeing what the data can tell us beyond the formal modeling and thereby contrasts traditional hypothesis testing. Exploratory data analysis has been promoted by John Tukey since 1970 to encourage statisticians to explore the data, and possibly formulate hypotheses that could lead to new data collection and experiments. EDA is different from initial data analysis (IDA), which focuses more narrowly on checking assumptions required for model fitting and hypothesis testing, and handling missing values and making transformations of variables as needed. EDA encompasses IDA.

In the statistical analysis of time series, autoregressive–moving-average (ARMA) models provide a parsimonious description of a (weakly) stationary stochastic process in terms of two polynomials, one for the autoregression (AR) and the second for the moving average (MA). The general ARMA model was described in the 1951 thesis of Peter Whittle, Hypothesis testing in time series analysis, and it was popularized in the 1970 book by George E. P. Box and Gwilym Jenkins.

A tolerance interval (TI) is a statistical interval within which, with some confidence level, a specified sampled proportion of a population falls. "More specifically, a 100×p%/100×(1−α) tolerance interval provides limits within which at least a certain proportion (p) of the population falls with a given level of confidence (1−α)." "A (p, 1−α) tolerance interval (TI) based on a sample is constructed so that it would include at least a proportion p of the sampled population with confidence 1−α; such a TI is usually referred to as p-content − (1−α) coverage TI." "A (p, 1−α) upper tolerance limit (TL) is simply a 1−α upper confidence limit for the 100 p percentile of the population."

SAS is a statistical software suite developed by SAS Institute for data management, advanced analytics, multivariate analysis, business intelligence, criminal investigation, and predictive analytics.

JMP is a suite of computer programs for statistical analysis developed by JMP, a subsidiary of SAS Institute. It was launched in 1989 to take advantage of the graphical user interface introduced by the Macintosh operating systems. It has since been significantly rewritten and made available also for the Windows operating system. JMP is used in applications such as Six Sigma, quality control, and engineering, design of experiments, as well as for research in science, engineering, and social sciences.

Spatial analysis is any of the formal techniques which studies entities using their topological, geometric, or geographic properties. Spatial analysis includes a variety of techniques using different analytic approaches, especially spatial statistics. It may be applied in fields as diverse as astronomy, with its studies of the placement of galaxies in the cosmos, or to chip fabrication engineering, with its use of "place and route" algorithms to build complex wiring structures. In a more restricted sense, spatial analysis is geospatial analysis, the technique applied to structures at the human scale, most notably in the analysis of geographic data. It may also be applied to genomics, as in transcriptomics data.

In statistics, the Durbin–Watson statistic is a test statistic used to detect the presence of autocorrelation at lag 1 in the residuals from a regression analysis. It is named after James Durbin and Geoffrey Watson. The small sample distribution of this ratio was derived by John von Neumann. Durbin and Watson applied this statistic to the residuals from least squares regressions, and developed bounds tests for the null hypothesis that the errors are serially uncorrelated against the alternative that they follow a first order autoregressive process. Note that the distribution of this test statistic does not depend on the estimated regression coefficients and the variance of the errors.

The Unistat computer program is a statistical data analysis tool featuring two modes of operation: The stand-alone user interface is a complete workbench for data input, analysis and visualization while the Microsoft Excel add-in mode extends the features of the mainstream spreadsheet application with powerful analytical capabilities.

Anscombe's quartet comprises four data sets that have nearly identical simple descriptive statistics, yet have very different distributions and appear very different when graphed. Each dataset consists of eleven (x,y) points. They were constructed in 1973 by the statistician Francis Anscombe to demonstrate both the importance of graphing data when analyzing it, and the effect of outliers and other influential observations on statistical properties. He described the article as being intended to counter the impression among statisticians that "numerical calculations are exact, but graphs are rough."

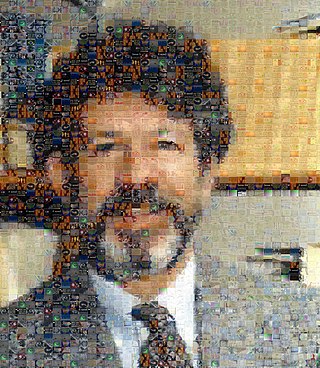

Michael Louis Friendly is an American-Canadian psychologist, Professor of Psychology at York University in Ontario, Canada, and director of its Statistical Consulting Service, especially known for his contributions to graphical methods for categorical and multivariate data, and on the history of data and information visualisation.

Peter J. Rousseeuw is a statistician known for his work on robust statistics and cluster analysis. He obtained his PhD in 1981 at the Vrije Universiteit Brussel, following research carried out at the ETH in Zurich, which led to a book on influence functions. Later he was professor at the Delft University of Technology, The Netherlands, at the University of Fribourg, Switzerland, and at the University of Antwerp, Belgium. Next he was a senior researcher at Renaissance Technologies. He then returned to Belgium as professor at KU Leuven, until becoming emeritus in 2022. His former PhD students include Annick Leroy, Hendrik Lopuhaä, Geert Molenberghs, Christophe Croux, Mia Hubert, Stefan Van Aelst, Tim Verdonck and Jakob Raymaekers.

In non-parametric statistics, the Theil–Sen estimator is a method for robustly fitting a line to sample points in the plane by choosing the median of the slopes of all lines through pairs of points. It has also been called Sen's slope estimator, slope selection, the single median method, the Kendall robust line-fit method, and the Kendall–Theil robust line. It is named after Henri Theil and Pranab K. Sen, who published papers on this method in 1950 and 1968 respectively, and after Maurice Kendall because of its relation to the Kendall tau rank correlation coefficient.

ggplot2 is an open-source data visualization package for the statistical programming language R. Created by Hadley Wickham in 2005, ggplot2 is an implementation of Leland Wilkinson's Grammar of Graphics—a general scheme for data visualization which breaks up graphs into semantic components such as scales and layers. ggplot2 can serve as a replacement for the base graphics in R and contains a number of defaults for web and print display of common scales. Since 2005, ggplot2 has grown in use to become one of the most popular R packages.

Optimization Toolbox is an optimization software package developed by MathWorks. It is an add-on product to MATLAB, and provides a library of solvers that can be used from the MATLAB environment. The toolbox was first released for MATLAB in 1990.

Hadley Alexander Wickham is a New Zealand statistician known for his work on open-source software for the R statistical programming environment. He is the chief scientist at Posit, PBC and an adjunct professor of statistics at the University of Auckland, Stanford University, and Rice University. His work includes the data visualisation system ggplot2 and the tidyverse, a collection of R packages for data science based on the concept of tidy data.

William Swain Cleveland II is an American computer scientist and Professor of Statistics and Professor of Computer Science at Purdue University, known for his work on data visualization, particularly on nonparametric regression and local regression.

Luke Tierney is an American statistician and computer scientist. A fellow of the Institute of Mathematical Statistics since 1988 and of the American Statistical Association since 1991, Tierney is currently a professor of statistics at the University of Iowa. Through his past work on programming languages such as R and Lisp, Tierney now holds a position on the developing team known as the R Core.

References

- ↑ "XLS is a preliminary minimal version of XLISP-STAT for MSDOS" . Retrieved 2023-09-07.

- ↑ "Index of /~luke/XLS/Xlispstat/Old/Amiga".

- ↑ Badsberg, J. H. (1992). "Model Search in Contingency Tables by CoCo". Computational Statistics: Volume 1: Proceedings of the 10th Symposium on Computational Statistics. pp. 251–256. doi:10.1007/978-3-662-26811-7_33.

- ↑ R. Dennis Cook; Sanford Weisberg (25 September 2009) [1994]. An Introduction to Regression Graphics. John Wiley & Sons. ISBN 978-0-470-31770-9.

- ↑ G. Jogesh Babu; Eric D. Feigelson (6 December 2012). Statistical Challenges in Modern Astronomy II. Springer Science & Business Media. p. 193. ISBN 978-1-4612-1968-2.

- ↑ Michael Worboys (21 April 1994). Innovations In GIS. CRC Press. p. 186. ISBN 978-0-7484-0141-3.

- ↑ J. Harrington; S. Cassidy (6 December 2012). Techniques in Speech Acoustics. Springer Science & Business Media. p. x. ISBN 978-94-011-4657-9.

- ↑ John E. Floyd (4 December 2009). Interest Rates, Exchange Rates and World Monetary Policy. Springer Science & Business Media. p. 5. ISBN 978-3-642-10280-6.

- ↑ Mitchell H. Gail (2 November 2000). Encyclopedia of Epidemiologic Methods. John Wiley & Sons. p. 855. ISBN 978-0-471-86641-1.

- ↑ Forrest W. Young; Pedro M. Valero-Mora; Michael Friendly (15 September 2011). Visual Statistics: Seeing Data with Dynamic Interactive Graphics. John Wiley & Sons. p. 25. ISBN 978-1-118-16541-6.

XLisp-Stat... has had considerable impact on the development of statistical visualization systems.

- ↑ Luke Tierney (25 September 2009) [1990]. LISP-STAT: An Object-Oriented Environment for Statistical Computing and Dynamic Graphics. John Wiley & Sons. ISBN 978-0-470-31756-3.

- ↑ de Leeuw, Jan (February 2005). "On Abandoning XLISP-STAT" (PDF). Journal of Statistical Software. 13 (7). ISSN 1548-7660 . Retrieved 2017-05-30.

- ↑ File "COPYING" in archive at ftp://ftp.stat.umn.edu/pub/xlispstat/current/xlispstat-3-52-20.tar.gz