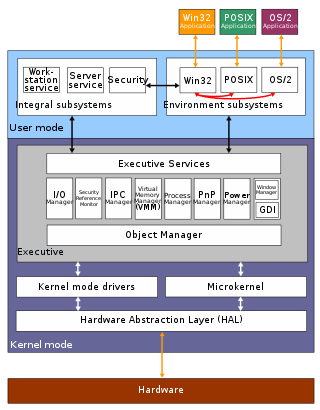

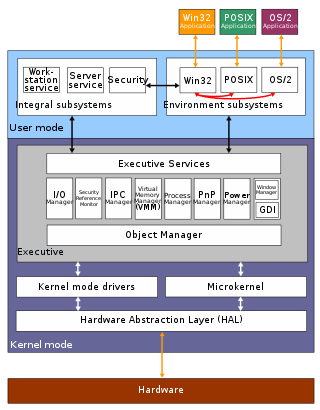

The Graphics Device Interface (GDI) is a legacy component of Microsoft Windows responsible for representing graphical objects and transmitting them to output devices such as monitors and printers. It was superseded by DirectDraw API and later Direct2D API. Windows apps use Windows API to interact with GDI, for such tasks as drawing lines and curves, rendering fonts, and handling palettes. The Windows USER subsystem uses GDI to render such UI elements as window frames and menus. Other systems have components that are similar to GDI; for example: Mac OS has QuickDraw, and Linux and Unix have X Window System core protocol.

In computing, a windowing system is a software suite that manages separately different parts of display screens. It is a type of graphical user interface (GUI) which implements the WIMP paradigm for a user interface.

A graphics processing unit (GPU) is a specialized electronic circuit initially designed to accelerate computer graphics and image processing. After their initial design, GPUs were found to be useful for non-graphic calculations involving embarrassingly parallel problems due to their parallel structure. Other non-graphical uses include the training of neural networks and cryptocurrency mining.

DirectShow, codename Quartz, is a multimedia framework and API produced by Microsoft for software developers to perform various operations with media files or streams. It is the replacement for Microsoft's earlier Video for Windows technology. Based on the Microsoft Windows Component Object Model (COM) framework, DirectShow provides a common interface for media across various programming languages, and is an extensible, filter-based framework that can render or record media files on demand at the request of the user or developer. The DirectShow development tools and documentation were originally distributed as part of the DirectX SDK. Currently, they are distributed as part of the Windows SDK.

The S3 ViRGE (Video and Rendering Graphics Engine) graphics chipset was one of the first 2D/3D accelerators designed for the mass market.

The Direct Rendering Manager (DRM) is a subsystem of the Linux kernel responsible for interfacing with GPUs of modern video cards. DRM exposes an API that user-space programs can use to send commands and data to the GPU and perform operations such as configuring the mode setting of the display. DRM was first developed as the kernel-space component of the X Server Direct Rendering Infrastructure, but since then it has been used by other graphic stack alternatives such as Wayland and standalone applications and libraries such as SDL2 and Kodi.

Xgl is an obsolete display server implementation supporting the X Window System protocol designed to take advantage of modern graphics cards via their OpenGL drivers, layered on top of OpenGL. It supports hardware acceleration of all X, OpenGL and XVideo applications and graphical effects by a compositing window manager such as Compiz or Beryl. The project was started by David Reveman of Novell and first released on January 2, 2006. It was removed from the X.org server in favor of AIGLX on June 12, 2008.

Clipping, in the context of computer graphics, is a method to selectively enable or disable rendering operations within a defined region of interest. Mathematically, clipping can be described using the terminology of constructive geometry. A rendering algorithm only draws pixels in the intersection between the clip region and the scene model. Lines and surfaces outside the view volume are removed.

In computing, hardware overlay, a type of video overlay, provides a method of rendering an image to a display screen with a dedicated memory buffer inside computer video hardware. The technique aims to improve the display of a fast-moving video image — such as a computer game, a DVD, or the signal from a TV card. Most video cards manufactured since about 1998 and most media players support hardware overlay.

DirectX Video Acceleration (DXVA) is a Microsoft API specification for the Microsoft Windows and Xbox 360 platforms that allows video decoding to be hardware-accelerated. The pipeline allows certain CPU-intensive operations such as iDCT, motion compensation and deinterlacing to be offloaded to the GPU. DXVA 2.0 allows more operations, including video capturing and processing operations, to be hardware-accelerated as well.

Desktop Window Manager is the compositing window manager in Microsoft Windows since Windows Vista that enables the use of hardware acceleration to render the graphical user interface of Windows.

X-Video Motion Compensation (XvMC), is an extension of the X video extension (Xv) for the X Window System. The XvMC API allows video programs to offload portions of the video decoding process to the GPU video-hardware. In theory this process should also reduce bus bandwidth requirements. Currently, the supported portions to be offloaded by XvMC onto the GPU are motion compensation and inverse discrete cosine transform (iDCT) for MPEG-2 video. XvMC also supports offloading decoding of mo comp, iDCT, and VLD for not only MPEG-2 but also MPEG-4 ASP video on VIA Unichrome hardware.

A compositing manager, or compositor, is software that provides applications with an off-screen buffer for each window. The compositing manager composites the window buffers into an image representing the screen and writes the result into the display memory. A compositing window manager is a window manager that is also a compositing manager.

Accelerated Indirect GLX ("AIGLX") is an open source project founded by Red Hat and the Fedora community, led by Kristian Høgsberg, to allow accelerated indirect GLX rendering capabilities to the X.Org Server and DRI drivers. This allows remote X clients to get fully hardware accelerated rendering over the GLX protocol; coincidentally, this development was required for OpenGL compositing window managers to function with hardware acceleration.

ATI Avivo is a set of hardware and low level software features present on the ATI Radeon R520 family of GPUs and all later ATI Radeon products. ATI Avivo was designed to offload video decoding, encoding, and post-processing from a computer's CPU to a compatible GPU. ATI Avivo compatible GPUs have lower CPU usage when a player and decoder software that support ATI Avivo is used. ATI Avivo has been long superseded by Unified Video Decoder (UVD) and Video Coding Engine (VCE).

VirtualGL is an open-source software package that redirects the 3D rendering commands from Unix and Linux OpenGL applications to 3D accelerator hardware in a dedicated server and sends the rendered output to a (thin) client located elsewhere on the network. On the server side, VirtualGL consists of a library that handles the redirection and a wrapper program that instructs applications to use this library. Clients can connect to the server either using a remote X11 connection or using an X11 proxy such as a VNC server. In case of an X11 connection some client-side VirtualGL software is also needed to receive the rendered graphics output separately from the X11 stream. In case of a VNC connection no specific client-side software is needed other than the VNC client itself.

Windows Display Driver Model (WDDM) is the graphic driver architecture for video card drivers running Microsoft Windows versions beginning with Windows Vista.

A video wall is a special multi-monitor setup that consists of multiple computer monitors, video projectors, or television sets tiled together contiguously or overlapped in order to form one large screen. Typical display technologies include LCD panels, Direct View LED arrays, blended projection screens, Laser Phosphor Displays, and rear projection cubes. Jumbotron technology was also previously used. Diamond Vision was historically similar to Jumbotron in that they both used cathode-ray tube (CRT) technology, but with slight differences between the two. Early Diamond vision displays used separate flood gun CRTs, one per subpixel. Later Diamond vision displays and all Jumbotrons used field-replaceable modules containing several flood gun CRTs each, one per subpixel, that had common connections shared across all CRTs in a module; the module was connected through a single weather-sealed connector.

Unified Video Decoder is the name given to AMD's dedicated video decoding ASIC. There are multiple versions implementing a multitude of video codecs, such as H.264 and VC-1.

The Evergreen series is a family of GPUs developed by Advanced Micro Devices for its Radeon line under the ATI brand name. It was employed in Radeon HD 5000 graphics card series and competed directly with Nvidia's GeForce 400 series.