In philosophy, systems theory, science, and art, emergence occurs when an entity is observed to have properties its parts do not have on their own, properties or behaviors which emerge only when the parts interact in a wider whole.

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to generate high-quality solutions to optimization and search problems by relying on biologically inspired operators such as mutation, crossover and selection.

In computational intelligence (CI), an evolutionary algorithm (EA) is a subset of evolutionary computation, a generic population-based metaheuristic optimization algorithm. An EA uses mechanisms inspired by biological evolution, such as reproduction, mutation, recombination, and selection. Candidate solutions to the optimization problem play the role of individuals in a population, and the fitness function determines the quality of the solutions. Evolution of the population then takes place after the repeated application of the above operators.

In computer science, evolutionary computation is a family of algorithms for global optimization inspired by biological evolution, and the subfield of artificial intelligence and soft computing studying these algorithms. In technical terms, they are a family of population-based trial and error problem solvers with a metaheuristic or stochastic optimization character.

In evolutionary biology, fitness landscapes or adaptive landscapes are used to visualize the relationship between genotypes and reproductive success. It is assumed that every genotype has a well-defined replication rate. This fitness is the "height" of the landscape. Genotypes which are similar are said to be "close" to each other, while those that are very different are "far" from each other. The set of all possible genotypes, their degree of similarity, and their related fitness values is then called a fitness landscape. The idea of a fitness landscape is a metaphor to help explain flawed forms in evolution by natural selection, including exploits and glitches in animals like their reactions to supernormal stimuli.

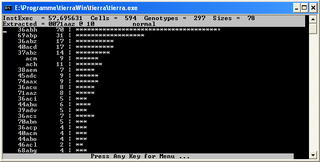

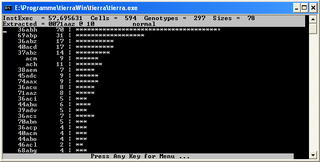

Tierra is a computer simulation developed by ecologist Thomas S. Ray in the early 1990s in which computer programs compete for time and space. In this context, the computer programs in Tierra are considered to be evolvable and can mutate, self-replicate and recombine. Tierra's virtual machine is written in C. It operates on a custom instruction set designed to facilitate code changes and reordering, including features such as jump to template.

Avida is an artificial life software platform to study the evolutionary biology of self-replicating and evolving computer programs. Avida is under active development by Charles Ofria's Digital Evolution Lab at Michigan State University; the first version of Avida was designed in 1993 by Ofria, Chris Adami and C. Titus Brown at Caltech, and has been fully reengineered by Ofria on multiple occasions since then. The software was originally inspired by the Tierra system.

In philosophy, emergentism is the belief in emergence, particularly as it involves consciousness and the philosophy of mind, and as it contrasts with and also does not contrast with reductionism. A property of a system is said to be emergent if it is a new outcome of some other properties of the system and their interaction, while it is itself different from them. Emergent properties are not identical with, reducible to, or deducible from the other properties. The different ways in which this independence requirement can be satisfied lead to variant types of emergence.

In natural evolution and artificial evolution the fitness of a schema is rescaled to give its effective fitness which takes into account crossover and mutation.

The learnable evolution model (LEM) is a non-Darwinian methodology for evolutionary computation that employs machine learning to guide the generation of new individuals. Unlike standard, Darwinian-type evolutionary computation methods that use random or semi-random operators for generating new individuals, LEM employs hypothesis generation and instantiation operators.

The idea of human artifacts being given life has fascinated humankind for as long as people have been recording their myths and stories. Whether Pygmalion or Frankenstein, humanity has been fascinated with the idea of artificial life.

Biological organization is the hierarchy of complex biological structures and systems that define life using a reductionistic approach. The traditional hierarchy, as detailed below, extends from atoms to biospheres. The higher levels of this scheme are often referred to as an ecological organization concept, or as the field, hierarchical ecology.

John von Neumann's universal constructor is a self-replicating machine in a cellular automata (CA) environment. It was designed in the 1940s, without the use of a computer. The fundamental details of the machine were published in von Neumann's book Theory of Self-Reproducing Automata, completed in 1966 by Arthur W. Burks after von Neumann's death. While typically not as well-known as von Neumann's other work, it is regarded as foundational for automata theory, complex systems, and artificial life. Indeed, Nobel Laureate Sydney Brenner considered Von Neumann's work on self-reproducing automata central to biological theory as well, allowing us to "discipline our thoughts about machines, both natural and artificial."

Chance and Necessity: Essay on the Natural Philosophy of Modern Biology is a 1970 book by Nobel Prize winner Jacques Monod, interpreting the processes of evolution to show that life is only the result of natural processes by "pure chance". The basic tenet of this book is that systems in nature with molecular biology, such as enzymatic biofeedback loops, can be explained without having to invoke final causality.

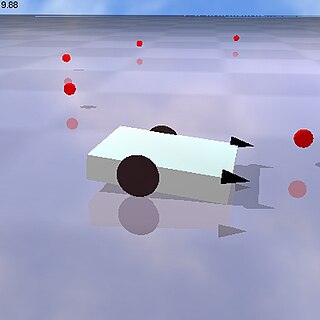

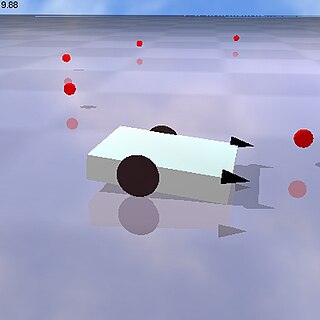

Framsticks is a 3D freeware Artificial Life simulator. Organisms consisting of physical structures ("bodies") and control structures ("brains") evolve over time against a user's predefined fitness landscape, or spontaneously coevolve in a complex environment. Evolution of organisms occurs primarily through artificial selection, where an intelligent selector chooses the selection parameters and mutation rates. Also the organisms rate of crossing-over can be chosen thus reflecting the sharing of genes by mating in nature. The simulated organisms have genetic scripts inspired by DNA found in living organisms in nature. A user can isolate a particular organism in the gene pool and edit its genotype. Framsticks allows users to design organisms or manually edit the living genetic code of an organism. Users have the ability to seed the environment with energy orbs that the organisms convert to energy and material. Depending on how the organism does in its lifespan determines the future of the virtual gene pool. Gene pools can be exported and shared.

Universal Darwinism refers to a variety of approaches that extend the theory of Darwinism beyond its original domain of biological evolution on Earth. Universal Darwinism aims to formulate a generalized version of the mechanisms of variation, selection and heredity proposed by Charles Darwin, so that they can apply to explain evolution in a wide variety of other domains, including psychology, economics, culture, medicine, computer science and physics.

Natural computing, also called natural computation, is a terminology introduced to encompass three classes of methods: 1) those that take inspiration from nature for the development of novel problem-solving techniques; 2) those that are based on the use of computers to synthesize natural phenomena; and 3) those that employ natural materials to compute. The main fields of research that compose these three branches are artificial neural networks, evolutionary algorithms, swarm intelligence, artificial immune systems, fractal geometry, artificial life, DNA computing, and quantum computing, among others.

Artificial life is a field of study wherein researchers examine systems related to natural life, its processes, and its evolution, through the use of simulations with computer models, robotics, and biochemistry. The discipline was named by Christopher Langton, an American theoretical biologist, in 1986. In 1987 Langton organized the first conference on the field, in Los Alamos, New Mexico. There are three main kinds of alife, named for their approaches: soft, from software; hard, from hardware; and wet, from biochemistry. Artificial life researchers study traditional biology by trying to recreate aspects of biological phenomena.

Evolving digital ecological networks are webs of interacting, self-replicating, and evolving computer programs that experience the same major ecological interactions as biological organisms. Despite being computational, these programs evolve quickly in an open-ended way, and starting from only one or two ancestral organisms, the formation of ecological networks can be observed in real-time by tracking interactions between the constantly evolving organism phenotypes. These phenotypes may be defined by combinations of logical computations that digital organisms perform and by expressed behaviors that have evolved. The types and outcomes of interactions between phenotypes are determined by task overlap for logic-defined phenotypes and by responses to encounters in the case of behavioral phenotypes. Biologists use these evolving networks to study active and fundamental topics within evolutionary ecology.

The following outline is provided as an overview of and topical guide to evolution: