An aviation accident is defined by the Convention on International Civil Aviation Annex 13 as an occurrence associated with the operation of an aircraft, which takes place from the time any person boards the aircraft with the intention of flight until all such persons have disembarked, and in which (a) a person is fatally or seriously injured, (b) the aircraft sustains significant damage or structural failure, or (c) the aircraft goes missing or becomes completely inaccessible. Annex 13 defines an aviation incident as an occurrence, other than an accident, associated with the operation of an aircraft that affects or could affect the safety of operation.

In aviation, a controlled flight into terrain is an accident in which an airworthy aircraft, fully under pilot control, is unintentionally flown into the ground, a mountain, a body of water or an obstacle. In a typical CFIT scenario, the crew is unaware of the impending disaster until it is too late. The term was coined by engineers at Boeing in the late 1970s.

Aviation safety is the study and practice of managing risks in aviation. This includes preventing aviation accidents and incidents through research, educating air travel personnel, passengers and the general public, as well as the design of aircraft and aviation infrastructure. The aviation industry is subject to significant regulation and oversight.

Crew resource management or cockpit resource management (CRM) is a set of training procedures for use in environments where human error can have devastating effects. CRM is primarily used for improving aviation safety and focuses on interpersonal communication, leadership, and decision making in aircraft cockpits. Its founder is David Beaty, a former Royal Air Force and a BOAC pilot who wrote "The Human Factor in Aircraft Accidents" (1969). Despite the considerable development of electronic aids since then, many principles he developed continue to prove effective.

Human reliability is related to the field of human factors and ergonomics, and refers to the reliability of humans in fields including manufacturing, medicine and nuclear power. Human performance can be affected by many factors such as age, state of mind, physical health, attitude, emotions, propensity for certain common mistakes, errors and cognitive biases, etc.

Pilot error generally refers to an accident in which an action or decision made by the pilot was the cause or a contributing factor that led to the accident, but also includes the pilot's failure to make a correct decision or take proper action. Errors are intentional actions that fail to achieve their intended outcomes. The Chicago Convention defines the term "accident" as "an occurrence associated with the operation of an aircraft [...] in which [...] a person is fatally or seriously injured [...] except when the injuries are [...] inflicted by other persons." Hence the definition of "pilot error" does not include deliberate crashing.

Gulf Air Flight 072 (GF072/GFA072) was a scheduled international passenger flight from Cairo International Airport in Egypt to Bahrain International Airport in Bahrain, operated by Gulf Air. On 23 August 2000 at 19:30 Arabia Standard Time (UTC+3), the Airbus A320 crashed minutes after executing a go-around upon failed attempt to land on Runway 12. The flight crew suffered from spatial disorientation during the go-around and crashed into the shallow waters of the Persian Gulf 2 km (1 nmi) from the airport. All 143 people on board the aircraft were killed.

A near miss, near death, near hit or close call is an unplanned event that has the potential to cause, but does not actually result in human injury, environmental or equipment damage, or an interruption to normal operation.

A system accident is an "unanticipated interaction of multiple failures" in a complex system. This complexity can either be of technology or of human organizations and is frequently both. A system accident can be easy to see in hindsight, but extremely difficult in foresight because there are simply too many action pathways to seriously consider all of them. Charles Perrow first developed these ideas in the mid-1980s. Safety systems themselves are sometimes the added complexity which leads to this type of accident.

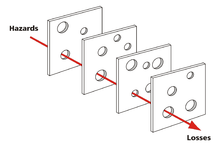

The Swiss cheese model of accident causation is a model used in risk analysis and risk management, including aviation safety, engineering, healthcare, emergency service organizations, and as the principle behind layered security, as used in computer security and defense in depth. It likens human systems to multiple slices of Swiss cheese, which has randomly placed and sized holes in each slice, stacked side by side, in which the risk of a threat becoming a reality is mitigated by the differing layers and types of defenses which are "layered" behind each other. Therefore, in theory, lapses and weaknesses in one defense do not allow a risk to materialize, since other defenses also exist, to prevent a single point of failure. The model was originally formally propounded by James T. Reason of the University of Manchester, and has since gained widespread acceptance. It is sometimes called the "cumulative act effect".

In accident analysis, a chain of events consists of the contributing factors leading to an undesired outcome.

The Human Factors Analysis and Classification System (HFACS) identifies the human causes of an accident and offers tools for analysis as a way to plan preventive training. It was developed by Dr Scott Shappell of the Civil Aviation Medical Institute and Dr Doug Wiegmann of the University of Illinois at Urbana-Campaign in response to a trend that showed some form of human error was a primary causal factor in 80% of all flight accidents in the Navy and Marine Corps.

Latent human error is a term used in safety work and accident prevention, especially in aviation, to describe human errors which are likely to be made due to systems or routines that are formed in such a way that humans are disposed to making these errors. Latent human errors are frequently components in causes of accidents. The error is latent and may not materialize immediately, thus, latent human error does not cause immediate or obvious damage. Discovering latent errors is therefore difficult and requires a systematic approach. Latent human error is often discussed in aviation incident investigation, and contributes to over 70% of the accidents.

The healthcare error proliferation model is an adaptation of James Reason’s Swiss Cheese Model designed to illustrate the complexity inherent in the contemporary healthcare delivery system and the attribution of human error within these systems. The healthcare error proliferation model explains the etiology of error and the sequence of events typically leading to adverse outcomes. This model emphasizes the role organizational and external cultures contribute to error identification, prevention, mitigation, and defense construction.

In aeronautics, loss of control (LOC) is the unintended departure of an aircraft from controlled flight, and is a significant factor in several aviation accidents worldwide. In 2015 it was the leading cause of general aviation accidents. Loss of control may be the result of mechanical failure, external disturbances, aircraft upset conditions, or inappropriate crew actions or responses.

Human factors are the physical or cognitive properties of individuals, or social behavior which is specific to humans, and influence functioning of technological systems as well as human-environment equilibria. The safety of underwater diving operations can be improved by reducing the frequency of human error and the consequences when it does occur. Human error can be defined as an individual's deviation from acceptable or desirable practice which culminates in undesirable or unexpected results.

Dive safety is primarily a function of four factors: the environment, equipment, individual diver performance and dive team performance. The water is a harsh and alien environment which can impose severe physical and psychological stress on a diver. The remaining factors must be controlled and coordinated so the diver can overcome the stresses imposed by the underwater environment and work safely. Diving equipment is crucial because it provides life support to the diver, but the majority of dive accidents are caused by individual diver panic and an associated degradation of the individual diver's performance. - M.A. Blumenberg, 1996

Maritime resource management (MRM) or bridge resource management (BRM) is a set of human factors and soft skills training aimed at the maritime industry. The MRM training programme was launched in 1993 – at that time under the name bridge resource management – and aims at preventing accidents at sea caused by human error.

On 23 August 2013, a Eurocopter AS332 Super Puma helicopter belonging to CHC Helicopters crashed into the sea 2 nautical miles from Sumburgh in the Shetland Islands, Scotland, while en route from the Borgsten Dolphin drilling rig. The accident killed four passengers; twelve other passengers and two crew were rescued with injuries. A further passenger killed himself in 2017 as a result of PTSD caused by the crash. An investigation by the UK's Air Accident Investigation Branch concluded in 2016 that the accident was primarily caused by pilot error in failing to monitor instruments during approach. The public inquiry concluded in October 2020 that the crash was primarily caused by pilot error.

The International Civil Aviation Organization (ICAO) defines fatigue as "A physiological state of reduced mental or physical performance capability resulting from sleep loss or extended wakefulness, circadian phase, or workload." The phenomenon places great risk on the crew and passengers of an airplane because it significantly increases the chance of pilot error. Fatigue is particularly prevalent among pilots because of "unpredictable work hours, long duty periods, circadian disruption, and insufficient sleep". These factors can occur together to produce a combination of sleep deprivation, circadian rhythm effects, and 'time-on task' fatigue. Regulators attempt to mitigate fatigue by limiting the number of hours pilots are allowed to fly over varying periods of time.