Related Research Articles

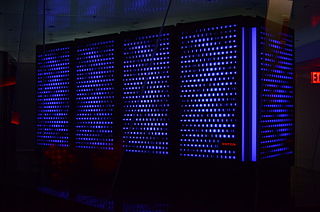

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, supercomputers have existed which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

Scalability is the property of a system to handle a growing amount of work. One definition for software systems specifies that this may be done by adding resources to the system.

The Message Passing Interface (MPI) is a standardized and portable message-passing standard designed to function on parallel computing architectures. The MPI standard defines the syntax and semantics of library routines that are useful to a wide range of users writing portable message-passing programs in C, C++, and Fortran. There are several open-source MPI implementations, which fostered the development of a parallel software industry, and encouraged development of portable and scalable large-scale parallel applications.

Checkpointing is a technique that provides fault tolerance for computing systems. It basically consists of saving a snapshot of the application's state, so that applications can restart from that point in case of failure. This is particularly important for long running applications that are executed in failure-prone computing systems.

The fat tree network is a universal network for provably efficient communication. It was invented by Charles E. Leiserson of the Massachusetts Institute of Technology in 1985. k-ary n-trees, the type of fat-trees commonly used in most high-performance networks, were initially formalized in 1997.

ASCI Red was the first computer built under the Accelerated Strategic Computing Initiative (ASCI), the supercomputing initiative of the United States government created to help the maintenance of the United States nuclear arsenal after the 1992 moratorium on nuclear testing.

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel, distributed algorithm on a cluster.

TeraGrid was an e-Science grid computing infrastructure combining resources at eleven partner sites. The project started in 2001 and operated from 2004 through 2011.

GPFS is high-performance clustered file system software developed by IBM. It can be deployed in shared-disk or shared-nothing distributed parallel modes, or a combination of these. It is used by many of the world's largest commercial companies, as well as some of the supercomputers on the Top 500 List. For example, it is the filesystem of the Summit at Oak Ridge National Laboratory which was the #1 fastest supercomputer in the world in the November 2019 Top 500 List. Summit is a 200 Petaflops system composed of more than 9,000 POWER9 processors and 27,000 NVIDIA Volta GPUs. The storage filesystem is called Alpine.

Edge computing is a distributed computing model that brings computation and data storage closer to the sources of data. More broadly, it refers to any design that pushes computation physically closer to a user, so as to reduce the latency compared to when an application runs on a centralized data centre.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing.

Many-task computing (MTC) in computational science is an approach to parallel computing that aims to bridge the gap between two computing paradigms: high-throughput computing (HTC) and high-performance computing (HPC).

Anton is a massively parallel supercomputer designed and built by D. E. Shaw Research in New York, first running in 2008. It is a special-purpose system for molecular dynamics (MD) simulations of proteins and other biological macromolecules. An Anton machine consists of a substantial number of application-specific integrated circuits (ASICs), interconnected by a specialized high-speed, three-dimensional torus network.

Data-intensive computing is a class of parallel computing applications which use a data parallel approach to process large volumes of data typically terabytes or petabytes in size and typically referred to as big data. Computing applications that devote most of their execution time to computational requirements are deemed compute-intensive, whereas applications are deemed data-intensive require large volumes of data and devote most of their processing time to I/O and manipulation of data.

Tachyon is a parallel/multiprocessor ray tracing software. It is a parallel ray tracing library for use on distributed memory parallel computers, shared memory computers, and clusters of workstations. Tachyon implements rendering features such as ambient occlusion lighting, depth-of-field focal blur, shadows, reflections, and others. It was originally developed for the Intel iPSC/860 by John Stone for his M.S. thesis at University of Missouri-Rolla. Tachyon subsequently became a more functional and complete ray tracing engine, and it is now incorporated into a number of other open source software packages such as VMD, and SageMath. Tachyon is released under a permissive license.

Approaches to supercomputer architecture have taken dramatic turns since the earliest systems were introduced in the 1960s. Early supercomputer architectures pioneered by Seymour Cray relied on compact innovative designs and local parallelism to achieve superior computational peak performance. However, in time the demand for increased computational power ushered in the age of massively parallel systems.

A distributed file system for cloud is a file system that allows many clients to have access to data and supports operations on that data. Each data file may be partitioned into several parts called chunks. Each chunk may be stored on different remote machines, facilitating the parallel execution of applications. Typically, data is stored in files in a hierarchical tree, where the nodes represent directories. There are several ways to share files in a distributed architecture: each solution must be suitable for a certain type of application, depending on how complex the application is. Meanwhile, the security of the system must be ensured. Confidentiality, availability and integrity are the main keys for a secure system.

An AI accelerator, deep learning processor or neural processing unit (NPU) is a class of specialized hardware accelerator or computer system designed to accelerate artificial intelligence and machine learning applications, including artificial neural networks and computer vision. Typical applications include algorithms for robotics, Internet of Things, and other data-intensive or sensor-driven tasks. They are often manycore designs and generally focus on low-precision arithmetic, novel dataflow architectures or in-memory computing capability. As of 2024, a typical AI integrated circuit chip contains tens of billions of MOSFETs.

ACM SIGHPC is the Association for Computing Machinery's Special Interest Group on High Performance Computing, an international community of students, faculty, researchers, and practitioners working on research and in professional practice related to supercomputing, high-end computers, and cluster computing. The organization co-sponsors international conferences related to high performance and scientific computing, including: SC, the International Conference for High Performance Computing, Networking, Storage and Analysis; the Platform for Advanced Scientific Computing (PASC) Conference; Practice and Experience in Advanced Research Computing (PEARC); and PPoPP, the Symposium on Principles and Practice of Parallel Programming.

References

- ↑ Liu, Zhuo; Lofstead, Jay; Wang, Teng; Yu, Weikuan (September 2013). "A Case of System-Wide Power Management for Scientific Applications". 2013 IEEE International Conference on Cluster Computing (CLUSTER). IEEE. pp. 1–8. doi:10.1109/CLUSTER.2013.6702681. ISBN 978-1-4799-0898-1. S2CID 6156410.

- ↑ Wang, Teng; Oral, Sarp; Wang, Yandong; Settlemyer, Brad; Atchley, Scott; Yu, Weikuan (October 2014). "BurstMem: A High-Performance Burst Buffer System for Scientific Applications". 2014 IEEE International Conference on Big Data (Big Data). IEEE. pp. 71–79. doi:10.1109/BigData.2014.7004215. ISBN 978-1-4799-5666-1. OSTI 1150929. S2CID 16764901.

- ↑ Liu, Ning; Cope, Jason; Carns, Philip; Carothers, Christopher; Ross, Robert; Grider, Gary; Crume, Adam; Maltzahn, Carlos (April 2012). "On the Role of Burst Buffers in Leadership-Class Storage systems". 012 IEEE 28th Symposium on Mass Storage Systems and Technologies (MSST). IEEE. pp. 1–11. doi:10.1109/MSST.2012.6232369. ISBN 978-1-4673-1747-4. S2CID 9676920.

- ↑ Wang, Teng; Oral, Sarp; Pritchard, Michael; Wang, Bin; Yu, Weikuan (September 2015). "TRIO: Burst Buffer Based I/O Orchestration". 2015 IEEE International Conference on Cluster Computing. IEEE. pp. 194–203. doi:10.1109/CLUSTER.2015.38. ISBN 978-1-4673-6598-7. OSTI 1265517. S2CID 12482308.

- ↑ Kougkas, Anthony; Dorier, Matthieu; Latham, Rob; Ross, Rob; Sun, Xian-He (March 2017). "Leveraging Burst Buffer Coordination to Prevent I/O Interference". 2016 IEEE 12th International Conference on e-Science (E-Science). IEEE. pp. 371–380. doi:10.1109/eScience.2016.7870922. ISBN 978-1-5090-4273-9. OSTI 1366308. S2CID 14514395.

- ↑ Wang, Teng; Mohror, Kathryn; Moody, Adam; Sato, Kento; Yu, Weikuan (November 2016). "An Ephemeral Burst-Buffer File System for Scientific Applications". SC16: International Conference for High Performance Computing, Networking, Storage and Analysis. IEEE. pp. 807–818. doi:10.1109/SC.2016.68. ISBN 978-1-4673-8815-3. S2CID 260667.

- ↑ "BurstFS: A Distributed Burst Buffer File System for Scientific Applications" (PDF). November 2015.

- ↑ Moody, Adam; Bronevetsky, Greg; Mohror, Kathryn; Supinski, Bronis R. de (November 2010). "Design, Modeling, and Evaluation of a Scalable Multi-level Checkpointing System". 2010 ACM/IEEE International Conference for High Performance Computing, Networking, Storage and Analysis. ACM. pp. 1–11. doi:10.1109/SC.2010.18. ISBN 978-1-4244-7557-5. S2CID 7352923.

- ↑ Rajachandrasekar, Raghunath; Moody, Adam; Mohror, Kathryn; Panda, Dhabaleswar K. (DK) (June 2013). "A 1 PB/s File System to Checkpoint Three Million MPI Tasks" (PDF). Proceedings of the 22nd international symposium on High-performance parallel and distributed computing - HPDC '13. ACM. p. 143. doi:10.1145/2493123.2462908. ISBN 9781450319102.

- ↑ Zhao, Dongfang; Zhang, Zhao; Zhou, Xiaobing; Li, Tonglin; Wang, Ke; Kimpe, Dries; Carns, Philip; Ross, Robert; Raicu, Ioan (October 2014). "FusionFS: Toward supporting data-intensive scientific applications on extreme-scale high-performance computing systems". 2014 IEEE International Conference on Big Data (Big Data). IEEE. pp. 61–70. doi:10.1109/BigData.2014.7004214. ISBN 978-1-4799-5666-1. S2CID 5288472.

- ↑ Wang, Teng; Moody, Adam; Zhu, Yue; Mohror, Kathryn; Sato, Kento; Islam, Tanzima; Yu, Weikuan (May 2017). "MetaKV: A Key-Value Store for Metadata Management of Distributed Burst Buffers". 2017 IEEE International Parallel and Distributed Processing Symposium (IPDPS). IEEE. pp. 1174–1183. doi:10.1109/IPDPS.2017.39. ISBN 978-1-5386-3914-6. S2CID 8148699.

- ↑ Li, Tonglin; Zhou, Xiaobing; Brandstatter, Kevin; Zhao, Dongfang; Wang, Ke; Rajendran, Anupam; Zhang, Zhao; Raicu, Ioan (May 2013). "ZHT: A Light-Weight Reliable Persistent Dynamic Scalable Zero-Hop Distributed Hash Table". 2013 IEEE 27th International Symposium on Parallel and Distributed Processing. IEEE. pp. 775–787. CiteSeerX 10.1.1.365.7329 . doi:10.1109/IPDPS.2013.110. ISBN 978-1-4673-6066-1. S2CID 16614868.

- ↑ Wang, Teng; Byna, Suren; Dong, Bin; Tang, Houjun (Sep 2018). "UniviStor: Integrated Hierarchical and Distributed Storage for HPC". 2018 IEEE International Conference on Cluster Computing (CLUSTER). IEEE. pp. 134–144. doi:10.1109/CLUSTER.2018.00025. ISBN 978-1-5386-8319-4. S2CID 53235423.

- ↑ "Hermes: a heterogeneous-aware multi-tiered distributed I/O buffering system". ACM. June 2018. doi: 10.1145/3208040.3208059 . S2CID 47019714.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Tang, Houjun; Byna, Suren; Tessier, Francois; Wang, Teng; Dong, Bin; Mu, Jingqing; Koziol, Quincey; Soumagne, Jerome; Vishwanath, Venkatram; Liu, Jialin; Warren, Richard (May 2018). "Toward Scalable and Asynchronous Object-centric Data Management for HPC". 2018 18th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGRID). IEEE. pp. 113–122. doi:10.1109/CCGRID.2018.00026. ISBN 978-1-5386-5815-4. S2CID 13811397.

- ↑ He, Jiahua; Jagatheesan, Arun; Gupta, Sandeep; Bennett, Jeffrey; Snavely, Allan (November 2010). "DASH: a Recipe for a Flash-based Data Intensive Supercomputer" (PDF). 2010 ACM/IEEE International Conference for High Performance Computing, Networking, Storage and Analysis. ACM. pp. 1–11. doi:10.1109/SC.2010.16. ISBN 978-1-4244-7557-5. S2CID 7349294.