In mathematical physics and mathematics, the Pauli matrices are a set of three 2 × 2 complex matrices that are traceless, Hermitian, involutory and unitary. Usually indicated by the Greek letter sigma, they are occasionally denoted by tau when used in connection with isospin symmetries.

In linear algebra, an orthogonal matrix, or orthonormal matrix, is a real square matrix whose columns and rows are orthonormal vectors.

In linear algebra, a symmetric matrix is a square matrix that is equal to its transpose. Formally,

In linear algebra, an invertible complex square matrix U is unitary if its matrix inverse U−1 equals its conjugate transpose U*, that is, if

In mechanics and geometry, the 3D rotation group, often denoted SO(3), is the group of all rotations about the origin of three-dimensional Euclidean space under the operation of composition.

In mathematics, particularly in linear algebra, a skew-symmetricmatrix is a square matrix whose transpose equals its negative. That is, it satisfies the condition

In the mathematical discipline of linear algebra, a matrix decomposition or matrix factorization is a factorization of a matrix into a product of matrices. There are many different matrix decompositions; each finds use among a particular class of problems.

In linear algebra, a Hessenberg matrix is a special kind of square matrix, one that is "almost" triangular. To be exact, an upper Hessenberg matrix has zero entries below the first subdiagonal, and a lower Hessenberg matrix has zero entries above the first superdiagonal. They are named after Karl Hessenberg.

In linear algebra, a tridiagonal matrix is a band matrix that has nonzero elements only on the main diagonal, the subdiagonal/lower diagonal, and the supradiagonal/upper diagonal. For example, the following matrix is tridiagonal:

In linear algebra, linear transformations can be represented by matrices. If is a linear transformation mapping to and is a column vector with entries, then there exists an matrix , called the transformation matrix of , such that: Note that has rows and columns, whereas the transformation is from to . There are alternative expressions of transformation matrices involving row vectors that are preferred by some authors.

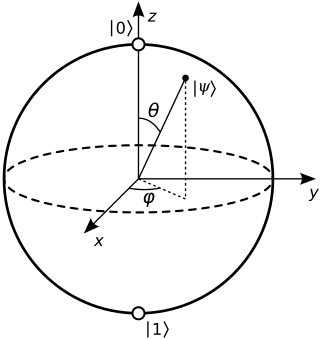

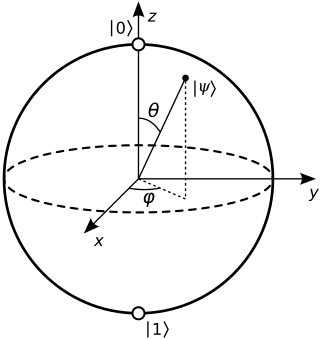

In quantum mechanics and computing, the Bloch sphere is a geometrical representation of the pure state space of a two-level quantum mechanical system (qubit), named after the physicist Felix Bloch.

In linear algebra, a rotation matrix is a transformation matrix that is used to perform a rotation in Euclidean space. For example, using the convention below, the matrix

In geometry, Euler's rotation theorem states that, in three-dimensional space, any displacement of a rigid body such that a point on the rigid body remains fixed, is equivalent to a single rotation about some axis that runs through the fixed point. It also means that the composition of two rotations is also a rotation. Therefore the set of rotations has a group structure, known as a rotation group.

In mathematics, the Cayley transform, named after Arthur Cayley, is any of a cluster of related things. As originally described by Cayley (1846), the Cayley transform is a mapping between skew-symmetric matrices and special orthogonal matrices. The transform is a homography used in real analysis, complex analysis, and quaternionic analysis. In the theory of Hilbert spaces, the Cayley transform is a mapping between linear operators.

The classical XY model is a lattice model of statistical mechanics. In general, the XY model can be seen as a specialization of Stanley's n-vector model for n = 2.

In probability theory and mathematical physics, a random matrix is a matrix-valued random variable—that is, a matrix in which some or all of its entries are sampled randomly from a probability distribution. Random matrix theory (RMT) is the study of properties of random matrices, often as they become large. RMT provides techniques like mean-field theory, diagrammatic methods, the cavity method, or the replica method to compute quantities like traces, spectral densities, or scalar products between eigenvectors. Many physical phenomena, such as the spectrum of nuclei of heavy atoms, the thermal conductivity of a lattice, or the emergence of quantum chaos, can be modeled mathematically as problems concerning large, random matrices.

The Lanczos algorithm is an iterative method devised by Cornelius Lanczos that is an adaptation of power methods to find the "most useful" eigenvalues and eigenvectors of an Hermitian matrix, where is often but not necessarily much smaller than . Although computationally efficient in principle, the method as initially formulated was not useful, due to its numerical instability.

In numerical linear algebra, the Jacobi eigenvalue algorithm is an iterative method for the calculation of the eigenvalues and eigenvectors of a real symmetric matrix. It is named after Carl Gustav Jacob Jacobi, who first proposed the method in 1846, but only became widely used in the 1950s with the advent of computers.

In matrix theory, Sylvester's formula or Sylvester's matrix theorem (named after J. J. Sylvester) or Lagrange−Sylvester interpolation expresses an analytic function f(A) of a matrix A as a polynomial in A, in terms of the eigenvalues and eigenvectors of A. It states that

In the mathematical field of linear algebra and convex analysis, the numerical range or field of values of a complex matrix A is the set