Usability testing is a technique used in user-centered interaction design to evaluate a product by testing it on users. This can be seen as an irreplaceable usability practice, since it gives direct input on how real users use the system. It is more concerned with the design intuitiveness of the product and tested with users who have no prior exposure to it. Such testing is paramount to the success of an end product as a fully functioning application that creates confusion amongst its users will not last for long. This is in contrast with usability inspection methods where experts use different methods to evaluate a user interface without involving users.

Usability engineering is a professional discipline that focuses on improving the usability of interactive systems. It draws on theories from computer science and psychology to define problems that occur during the use of such a system. Usability Engineering involves the testing of designs at various stages of the development process, with users or with usability experts. The history of usability engineering in this context dates back to the 1980s. In 1988, authors John Whiteside and John Bennett—of Digital Equipment Corporation and IBM, respectively—published material on the subject, isolating the early setting of goals, iterative evaluation, and prototyping as key activities. The usability expert Jakob Nielsen is a leader in the field of usability engineering. In his 1993 book Usability Engineering, Nielsen describes methods to use throughout a product development process—so designers can ensure they take into account the most important barriers to learnability, efficiency, memorability, error-free use, and subjective satisfaction before implementing the product. Nielsen’s work describes how to perform usability tests and how to use usability heuristics in the usability engineering lifecycle. Ensuring good usability via this process prevents problems in product adoption after release. Rather than focusing on finding solutions for usability problems—which is the focus of a UX or interaction designer—a usability engineer mainly concentrates on the research phase. In this sense, it is not strictly a design role, and many usability engineers have a background in computer science because of this. Despite this point, its connection to the design trade is absolutely crucial, not least as it delivers the framework by which designers can work so as to be sure that their products will connect properly with their target usership.

A think-aloudprotocol is a method used to gather data in usability testing in product design and development, in psychology and a range of social sciences.

Usability can be described as the capacity of a system to provide a condition for its users to perform the tasks safely, effectively, and efficiently while enjoying the experience. In software engineering, usability is the degree to which a software can be used by specified consumers to achieve quantified objectives with effectiveness, efficiency, and satisfaction in a quantified context of use.

A heuristic evaluation is a usability inspection method for computer software that helps to identify usability problems in the user interface design. It specifically involves evaluators examining the interface and judging its compliance with recognized usability principles. These evaluation methods are now widely taught and practiced in the new media sector, where user interfaces are often designed in a short space of time on a budget that may restrict the amount of money available to provide for other types of interface testing.

User-centered design (UCD) or user-driven development (UDD) is a framework of process in which usability goals, user characteristics, environment, tasks and workflow of a product, service or process are given extensive attention at each stage of the design process. These tests are conducted with/without actual users during each stage of the process from requirements, pre-production models and post production, completing a circle of proof back to and ensuring that "development proceeds with the user as the center of focus." Such testing is necessary as it is often very difficult for the designers of a product to understand intuitively the first-time users of their design experiences, and what each user's learning curve may look like. User-centered design is based on the understanding of a user, their demands, priorities and experiences and when used, is known to lead to an increased product usefulness and usability as it delivers satisfaction to the user. User-centered design applies cognitive science principles to create intuitive, efficient products by understanding users' mental processes, behaviors, and needs.

Interaction design, often abbreviated as IxD, is "the practice of designing interactive digital products, environments, systems, and services." While interaction design has an interest in form, its main area of focus rests on behavior. Rather than analyzing how things are, interaction design synthesizes and imagines things as they could be. This element of interaction design is what characterizes IxD as a design field, as opposed to a science or engineering field.

The following outline is provided as an overview of and topical guide to human–computer interaction:

Task analysis is a fundamental tool of human factors engineering. It entails analyzing how a task is accomplished, including a detailed description of both manual and mental activities, task and element durations, task frequency, task allocation, task complexity, environmental conditions, necessary clothing and equipment, and any other unique factors involved in or required for one or more people to perform a given task.

GOMS is a specialized human information processor model for human-computer interaction observation that describes a user's cognitive structure on four components. In the book The Psychology of Human Computer Interaction. written in 1983 by Stuart K. Card, Thomas P. Moran and Allen Newell, the authors introduce: "a set of Goals, a set of Operators, a set of Methods for achieving the goals, and a set of Selections rules for choosing among competing methods for goals." GOMS is a widely used method by usability specialists for computer system designers because it produces quantitative and qualitative predictions of how people will use a proposed system.

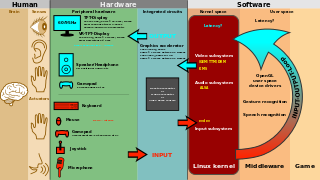

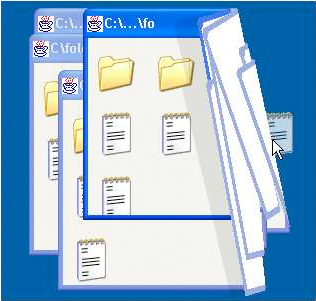

User interface (UI) design or user interface engineering is the design of user interfaces for machines and software, such as computers, home appliances, mobile devices, and other electronic devices, with the focus on maximizing usability and the user experience. In computer or software design, user interface (UI) design primarily focuses on information architecture. It is the process of building interfaces that clearly communicate to the user what's important. UI design refers to graphical user interfaces and other forms of interface design. The goal of user interface design is to make the user's interaction as simple and efficient as possible, in terms of accomplishing user goals.

Cognitive ergonomics is a scientific discipline that studies, evaluates, and designs tasks, jobs, products, environments and systems and how they interact with humans and their cognitive abilities. It is defined by the International Ergonomics Association as "concerned with mental processes, such as perception, memory, reasoning, and motor response, as they affect interactions among humans and other elements of a system. Cognitive ergonomics is responsible for how work is done in the mind, meaning, the quality of work is dependent on the persons understanding of situations. Situations could include the goals, means, and constraints of work. The relevant topics include mental workload, decision-making, skilled performance, human-computer interaction, human reliability, work stress and training as these may relate to human-system design." Cognitive ergonomics studies cognition in work and operational settings, in order to optimize human well-being and system performance. It is a subset of the larger field of human factors and ergonomics.

Human processor model or MHP is a cognitive modeling method developed by Stuart K. Card, Thomas P. Moran, & Allen Newell (1983) used to calculate how long it takes to perform a certain task. Other cognitive modeling methods include parallel design, GOMS, and keystroke-level model (KLM).

Software security assurance is a process that helps design and implement software that protects the data and resources contained in and controlled by that software. Software is itself a resource and thus must be afforded appropriate security.

Cognitive dimensions or cognitive dimensions of notations are design principles for notations, user interfaces and programming languages, described by researcher Thomas R.G. Green and further researched with Marian Petre. The dimensions can be used to evaluate the usability of an existing information artifact, or as heuristics to guide the design of a new one, and are useful in Human-Computer Interaction design.

User experience design defines the experience a user would go through when interacting with a company, its services, and its products. User experience design is a user centered design approach because it considers the user's experience when using a product or platform. Research, data analysis, and test results drive design decisions in UX design rather than aesthetic preferences and opinions. Unlike user interface design, which focuses solely on the design of a computer interface, UX design encompasses all aspects of a user's perceived experience with a product or website, such as its usability, usefulness, desirability, brand perception, and overall performance. UX design is also an element of the customer experience (CX), and encompasses all aspects and stages of a customer's experience and interaction with a company.

The pluralistic walkthrough is a usability inspection method used to identify usability issues in a piece of software or website in an effort to create a maximally usable human-computer interface. The method centers on recruiting a group of users, developers and usability professionals to step through a task scenario, discussing usability issues associated with dialog elements involved in the scenario steps. The group of experts used is asked to assume the role of typical users in the testing. The method is prized for its ability to be utilized at the earliest design stages, enabling the resolution of usability issues quickly and early in the design process. The method also allows for the detection of a greater number of usability problems to be found at one time due to the interaction of multiple types of participants. This type of usability inspection method has the additional objective of increasing developers’ sensitivity to users’ concerns about the product design.

Usability inspection is the name for a set of methods where an evaluator inspects a user interface. This is in contrast to usability testing where the usability of the interface is evaluated by testing it on real users. Usability inspections can generally be used early in the development process by evaluating prototypes or specifications for the system that can't be tested on users. Usability inspection methods are generally considered to be less costly to implement than testing on users.

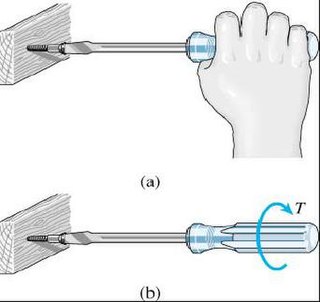

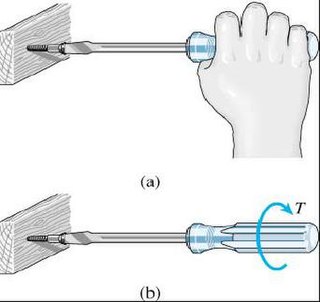

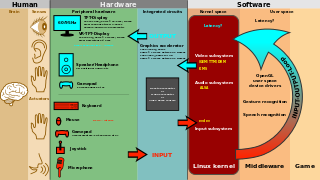

An interaction technique, user interface technique or input technique is a combination of hardware and software elements that provides a way for computer users to accomplish a single task. For example, one can go back to the previously visited page on a Web browser by either clicking a button, pressing a key, performing a mouse gesture or uttering a speech command. It is a widely used term in human-computer interaction. In particular, the term "new interaction technique" is frequently used to introduce a novel user interface design idea.

Tools, devices or software must be evaluated before their release on the market from different points of view such as their technical properties or their usability. Usability evaluation allows assessing whether the product under evaluation is efficient enough, effective enough and sufficiently satisfactory for the users. For this assessment to be objective, there is a need for measurable goals that the system must achieve. That kind of goal is called a usability goal. They are objective criteria against which the results of the usability evaluation are compared to assess the usability of the product under evaluation.