In animation and filmmaking, a key frame is a drawing or shot that defines the starting and ending points of a smooth transition. These are called frames because their position in time is measured in frames on a strip of film or on a digital video editing timeline. A sequence of key frames defines which movement the viewer will see, whereas the position of the key frames on the film, video, or animation defines the timing of the movement. Because only two or three key frames over the span of a second do not create the illusion of movement, the remaining frames are filled with "inbetweens".

MPEG-1 is a standard for lossy compression of video and audio. It is designed to compress VHS-quality raw digital video and CD audio down to about 1.5 Mbit/s without excessive quality loss, making video CDs, digital cable/satellite TV and digital audio broadcasting (DAB) practical.

The Original Chip Set (OCS) is a chipset used in the earliest Commodore Amiga computers and defined the Amiga's graphics and sound capabilities. It was succeeded by the slightly improved Enhanced Chip Set (ECS) and greatly improved Advanced Graphics Architecture (AGA).

The Real-time Transport Protocol (RTP) is a network protocol for delivering audio and video over IP networks. RTP is used in communication and entertainment systems that involve streaming media, such as telephony, video teleconference applications including WebRTC, television services and web-based push-to-talk features.

In telecommunication, frame synchronization or framing is the process by which, while receiving a stream of framed data, incoming frame alignment signals are identified, permitting the data bits within the frame to be extracted for decoding or retransmission.

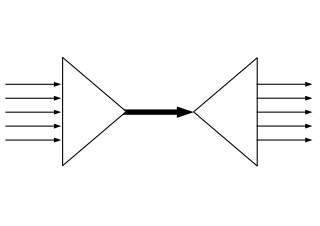

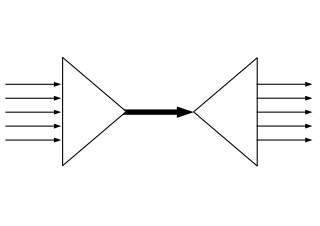

Time-division multiplexing (TDM) is a method of transmitting and receiving independent signals over a common signal path by means of synchronized switches at each end of the transmission line so that each signal appears on the line only a fraction of time in an alternating pattern. This method transmits two or more digital signals or analog signals over a common channel. It can be used when the bit rate of the transmission medium exceeds that of the signal to be transmitted. This form of signal multiplexing was developed in telecommunications for telegraphy systems in the late 19th century, but found its most common application in digital telephony in the second half of the 20th century.

In computing, endianness is the order or sequence of bytes of a word of digital data in computer memory. Endianness is primarily expressed as big-endian (BE) or little-endian (LE). A big-endian system stores the most significant byte of a word at the smallest memory address and the least significant byte at the largest. A little-endian system, in contrast, stores the least-significant byte at the smallest address. Bi-endianness is a feature supported by numerous computer architectures that feature switchable endianness in data fetches and stores or for instruction fetches. Other orderings are generically called middle-endian or mixed-endian.

A bitstream, also known as binary sequence, is a sequence of bits.

High-Level Data Link Control (HDLC) is a bit-oriented code-transparent synchronous data link layer protocol developed by the International Organization for Standardization (ISO). The standard for HDLC is ISO/IEC 13239:2002.

The data link layer, or layer 2, is the second layer of the seven-layer OSI model of computer networking. This layer is the protocol layer that transfers data between nodes on a network segment across the physical layer. The data link layer provides the functional and procedural means to transfer data between network entities and may also provide the means to detect and possibly correct errors that can occur in the physical layer.

Motion JPEG is a video compression format in which each video frame or interlaced field of a digital video sequence is compressed separately as a JPEG image.

AES3 is a standard for the exchange of digital audio signals between professional audio devices. An AES3 signal can carry two channels of pulse-code-modulated digital audio over several transmission media including balanced lines, unbalanced lines, and optical fiber.

Advanced Video Coding (AVC), also referred to as H.264 or MPEG-4 Part 10, is a video compression standard based on block-oriented, motion-compensated coding. It is by far the most commonly used format for the recording, compression, and distribution of video content, used by 91% of video industry developers as of September 2019. It supports resolutions up to and including 8K UHD.

In computer networking and telecommunications, TDM over IP (TDMoIP) is the emulation of time-division multiplexing (TDM) over a packet-switched network (PSN). TDM refers to a T1, E1, T3 or E3 signal, while the PSN is based either on IP or MPLS or on raw Ethernet. A related technology is circuit emulation, which enables transport of TDM traffic over cell-based (ATM) networks.

Α video codec is software or a device that provides encoding and decoding for digital video, and which may or may not include the use of video compression and/or decompression. Most codecs are typically implementations of video coding formats.

Binary Synchronous Communication is an IBM character-oriented, half-duplex link protocol, announced in 1967 after the introduction of System/360. It replaced the synchronous transmit-receive (STR) protocol used with second generation computers. The intent was that common link management rules could be used with three different character encodings for messages. Six-bit Transcode looked backwards to older systems; USASCII with 128 characters and EBCDIC with 256 characters looked forward. Transcode disappeared very quickly but the EBCDIC and USASCII dialects of Bisync continued in use.

Media Foundation (MF) is a COM-based multimedia framework pipeline and infrastructure platform for digital media in Windows Vista, Windows 7, Windows 8, Windows 8.1, Windows 10, and Windows 11. It is the intended replacement for Microsoft DirectShow, Windows Media SDK, DirectX Media Objects (DMOs) and all other so-called "legacy" multimedia APIs such as Audio Compression Manager (ACM) and Video for Windows (VfW). The existing DirectShow technology is intended to be replaced by Media Foundation step-by-step, starting with a few features. For some time there will be a co-existence of Media Foundation and DirectShow. Media Foundation will not be available for previous Windows versions, including Windows XP.

In computer networking, an Ethernet frame is a data link layer protocol data unit and uses the underlying Ethernet physical layer transport mechanisms. In other words, a data unit on an Ethernet link transports an Ethernet frame as its payload.

IEC 60870 part 5 is one of the IEC 60870 set of standards which define systems used for telecontrol in electrical engineering and power system automation applications. Part 5 provides a communication profile for sending basic telecontrol messages between two systems, which uses permanent directly connected data circuits between the systems. The IEC Technical Committee 57 have developed a protocol standard for telecontrol, teleprotection, and associated telecommunications for electric power systems. The result of this work is IEC 60870-5. Five documents specify the base IEC 60870-5:

A ground segment consists of all the ground-based elements of a space system used by operators and support personnel, as opposed to the space segment and user segment. The ground segment enables management of a spacecraft, and distribution of payload data and telemetry among interested parties on the ground. The primary elements of a ground segment are: