In computational complexity theory, bounded-error quantum polynomial time (BQP) is the class of decision problems solvable by a quantum computer in polynomial time, with an error probability of at most 1/3 for all instances. It is the quantum analogue to the complexity class BPP.

In mathematics, specifically category theory, a functor is a mapping between categories. Functors were first considered in algebraic topology, where algebraic objects are associated to topological spaces, and maps between these algebraic objects are associated to continuous maps between spaces. Nowadays, functors are used throughout modern mathematics to relate various categories. Thus, functors are important in all areas within mathematics to which category theory is applied.

In computational complexity theory, the class NC is the set of decision problems decidable in polylogarithmic time on a parallel computer with a polynomial number of processors. In other words, a problem with input size n is in NC if there exist constants c and k such that it can be solved in time O(logc n) using O(nk) parallel processors. Stephen Cook coined the name "Nick's class" after Nick Pippenger, who had done extensive research on circuits with polylogarithmic depth and polynomial size.

In the mathematical discipline of set theory, forcing is a technique for proving consistency and independence results. It was first used by Paul Cohen in 1963, to prove the independence of the axiom of choice and the continuum hypothesis from Zermelo–Fraenkel set theory.

Hamiltonian mechanics emerged in 1833 as a reformulation of Lagrangian mechanics. Introduced by Sir William Rowan Hamilton, Hamiltonian mechanics replaces (generalized) velocities used in Lagrangian mechanics with (generalized) momenta. Both theories provide interpretations of classical mechanics and describe the same physical phenomena.

In topology and related branches of mathematics, the Kuratowski closure axioms are a set of axioms that can be used to define a topological structure on a set. They are equivalent to the more commonly used open set definition. They were first formalized by Kazimierz Kuratowski, and the idea was further studied by mathematicians such as Wacław Sierpiński and António Monteiro, among others.

In computational complexity theory, a complexity class is a set of computational problems of related resource-based complexity. The two most commonly analyzed resources are time and memory.

In computational complexity theory, P, also known as PTIME or DTIME(nO ), is a fundamental complexity class. It contains all decision problems that can be solved by a deterministic Turing machine using a polynomial amount of computation time, or polynomial time.

In mathematics, a norm is a function from a real or complex vector space to the non-negative real numbers that behaves in certain ways like the distance from the origin: it commutes with scaling, obeys a form of the triangle inequality, and is zero only at the origin. In particular, the Euclidean distance of a vector from the origin is a norm, called the Euclidean norm, or 2-norm, which may also be defined as the square root of the inner product of a vector with itself.

The Gauss–Newton algorithm is used to solve non-linear least squares problems, which is equivalent to minimizing a sum of squared function values. It is an extension of Newton's method for finding a minimum of a non-linear function. Since a sum of squares must be nonnegative, the algorithm can be viewed as using Newton's method to iteratively approximate zeroes of the sum, and thus minimizing the sum. It has the advantage that second derivatives, which can be challenging to compute, are not required.

In mathematics, fuzzy measure theory considers generalized measures in which the additive property is replaced by the weaker property of monotonicity. The central concept of fuzzy measure theory is the fuzzy measure which was introduced by Choquet in 1953 and independently defined by Sugeno in 1974 in the context of fuzzy integrals. There exists a number of different classes of fuzzy measures including plausibility/belief measures; possibility/necessity measures; and probability measures which are a subset of classical measures.

In statistics, ordinary least squares (OLS) is a type of linear least squares method for choosing the unknown parameters in a linear regression model by the principle of least squares: minimizing the sum of the squares of the differences between the observed dependent variable in the input dataset and the output of the (linear) function of the independent variable.

In mathematics, a flow formalizes the idea of the motion of particles in a fluid. Flows are ubiquitous in science, including engineering and physics. The notion of flow is basic to the study of ordinary differential equations. Informally, a flow may be viewed as a continuous motion of points over time. More formally, a flow is a group action of the real numbers on a set.

Bayesian linear regression is a type of conditional modeling in which the mean of one variable is described by a linear combination of other variables, with the goal of obtaining the posterior probability of the regression coefficients and ultimately allowing the out-of-sample prediction of the regressandconditional on observed values of the regressors. The simplest and most widely used version of this model is the normal linear model, in which given is distributed Gaussian. In this model, and under a particular choice of prior probabilities for the parameters—so-called conjugate priors—the posterior can be found analytically. With more arbitrarily chosen priors, the posteriors generally have to be approximated.

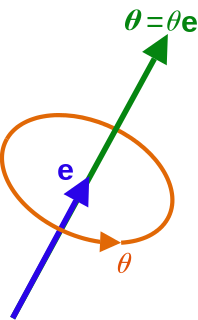

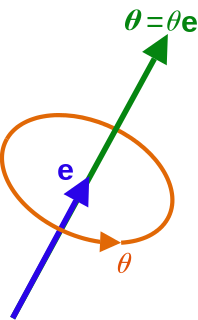

In mathematics, the axis–angle representation of a rotation parameterizes a rotation in a three-dimensional Euclidean space by two quantities: a unit vector e indicating the direction of an axis of rotation, and an angle θ describing the magnitude of the rotation about the axis. Only two numbers, not three, are needed to define the direction of a unit vector e rooted at the origin because the magnitude of e is constrained. For example, the elevation and azimuth angles of e suffice to locate it in any particular Cartesian coordinate frame.

Non-linear least squares is the form of least squares analysis used to fit a set of m observations with a model that is non-linear in n unknown parameters (m ≥ n). It is used in some forms of nonlinear regression. The basis of the method is to approximate the model by a linear one and to refine the parameters by successive iterations. There are many similarities to linear least squares, but also some significant differences. In economic theory, the non-linear least squares method is applied in (i) the probit regression, (ii) threshold regression, (iii) smooth regression, (iv) logistic link regression, (v) Box-Cox transformed regressors.

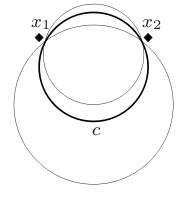

Stokes's theorem, also known as the Kelvin–Stokes theorem after Lord Kelvin and George Stokes, the fundamental theorem for curls or simply the curl theorem, is a theorem in vector calculus on R3. Given a vector field, the theorem relates the integral of the curl of the vector field over some surface, to the line integral of the vector field around the boundary of the surface. The classical Stokes' theorem can be stated in one sentence: The line integral of a vector field over a loop is equal to the flux of its curl through the enclosed surface.

Linear least squares (LLS) is the least squares approximation of linear functions to data. It is a set of formulations for solving statistical problems involved in linear regression, including variants for ordinary (unweighted), weighted, and generalized (correlated) residuals. Numerical methods for linear least squares include inverting the matrix of the normal equations and orthogonal decomposition methods.

In mathematics, a polyadic space is a topological space that is the image under a continuous function of a topological power of an Alexandroff one-point compactification of a discrete space.

Short integer solution (SIS) and ring-SIS problems are two average-case problems that are used in lattice-based cryptography constructions. Lattice-based cryptography began in 1996 from a seminal work by Miklós Ajtai who presented a family of one-way functions based on SIS problem. He showed that it is secure in an average case if the shortest vector problem is hard in a worst-case scenario.