Autocorrelation, sometimes known as serial correlation in the discrete time case, is the correlation of a signal with a delayed copy of itself as a function of delay. Informally, it is the similarity between observations of a random variable as a function of the time lag between them. The analysis of autocorrelation is a mathematical tool for finding repeating patterns, such as the presence of a periodic signal obscured by noise, or identifying the missing fundamental frequency in a signal implied by its harmonic frequencies. It is often used in signal processing for analyzing functions or series of values, such as time domain signals.

In mathematics, an equation is a mathematical formula that expresses the equality of two expressions, by connecting them with the equals sign =. The word equation and its cognates in other languages may have subtly different meanings; for example, in French an équation is defined as containing one or more variables, while in English, any well-formed formula consisting of two expressions related with an equals sign is an equation.

In mathematics, the term linear is used in two distinct senses for two different properties:

In mathematics, an algebra over a field is a vector space equipped with a bilinear product. Thus, an algebra is an algebraic structure consisting of a set together with operations of multiplication and addition and scalar multiplication by elements of a field and satisfying the axioms implied by "vector space" and "bilinear".

In mathematics, a linear differential equation is a differential equation that is defined by a linear polynomial in the unknown function and its derivatives, that is an equation of the form where a0(x), ..., an(x) and b(x) are arbitrary differentiable functions that do not need to be linear, and y′, ..., y(n) are the successive derivatives of an unknown function y of the variable x.

A maximum length sequence (MLS) is a type of pseudorandom binary sequence.

Reed–Muller codes are error-correcting codes that are used in wireless communications applications, particularly in deep-space communication. Moreover, the proposed 5G standard relies on the closely related polar codes for error correction in the control channel. Due to their favorable theoretical and mathematical properties, Reed–Muller codes have also been extensively studied in theoretical computer science.

In mathematics, the correlation immunity of a Boolean function is a measure of the degree to which its outputs are uncorrelated with some subset of its inputs. Specifically, a Boolean function is said to be correlation-immune of order m if every subset of m or fewer variables in is statistically independent of the value of .

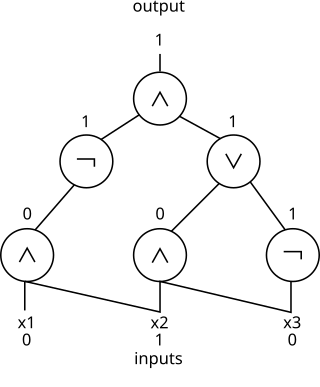

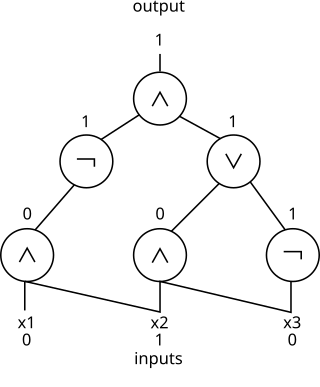

In theoretical computer science, circuit complexity is a branch of computational complexity theory in which Boolean functions are classified according to the size or depth of the Boolean circuits that compute them. A related notion is the circuit complexity of a recursive language that is decided by a uniform family of circuits .

Zhegalkinpolynomials, also known as algebraic normal form, are a representation of functions in Boolean algebra. Introduced by the Russian mathematician Ivan Ivanovich Zhegalkin in 1927, they are the polynomial ring over the integers modulo 2. The resulting degeneracies of modular arithmetic result in Zhegalkin polynomials being simpler than ordinary polynomials, requiring neither coefficients nor exponents. Coefficients are redundant because 1 is the only nonzero coefficient. Exponents are redundant because in arithmetic mod 2, x2 = x. Hence a polynomial such as 3x2y5z is congruent to, and can therefore be rewritten as, xyz.

In algebra, a multilinear polynomial is a multivariate polynomial that is linear in each of its variables separately, but not necessarily simultaneously. It is a polynomial in which no variable occurs to a power of or higher; that is, each monomial is a constant times a product of distinct variables. For example is a multilinear polynomial of degree whereas is not. The degree of a multilinear polynomial is the maximum number of distinct variables occurring in any monomial.

In mathematics, a symmetric Boolean function is a Boolean function whose value does not depend on the order of its input bits, i.e., it depends only on the number of ones in the input. For this reason they are also known as Boolean counting functions.

In Boolean algebra, a parity function is a Boolean function whose value is one if and only if the input vector has an odd number of ones. The parity function of two inputs is also known as the XOR function.

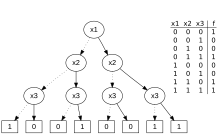

In computational complexity theory, the decision tree model is the model of computation in which an algorithm can be considered to be a decision tree, i.e. a sequence of queries or tests that are done adaptively, so the outcome of previous tests can influence the tests performed next.

In the mathematical field of combinatorics, a bent function is a Boolean function that is maximally non-linear; it is as different as possible from the set of all linear and affine functions when measured by Hamming distance between truth tables. Concretely, this means the maximum correlation between the output of the function and a linear function is minimal. In addition, the derivatives of a bent function are balanced Boolean functions, so for any change in the input variables there is a 50 percent chance that the output value will change.

A locally decodable code (LDC) is an error-correcting code that allows a single bit of the original message to be decoded with high probability by only examining a small number of bits of a possibly corrupted codeword. This property could be useful, say, in a context where information is being transmitted over a noisy channel, and only a small subset of the data is required at a particular time and there is no need to decode the entire message at once. Locally decodable codes are not a subset of locally testable codes, though there is some overlap between the two.

In cryptography, SWIFFT is a collection of provably secure hash functions. It is based on the concept of the fast Fourier transform (FFT). SWIFFT is not the first hash function based on the FFT, but it sets itself apart by providing a mathematical proof of its security. It also uses the LLL basis reduction algorithm. It can be shown that finding collisions in SWIFFT is at least as difficult as finding short vectors in cyclic/ideal lattices in the worst case. By giving a security reduction to the worst-case scenario of a difficult mathematical problem, SWIFFT gives a much stronger security guarantee than most other cryptographic hash functions.

In theoretical computer science, multiparty communication complexity is the study of communication complexity in the setting where there are more than 2 players.

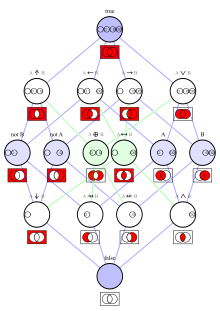

In mathematics and mathematical logic, Boolean algebra is a branch of algebra. It differs from elementary algebra in two ways. First, the values of the variables are the truth values true and false, usually denoted 1 and 0, whereas in elementary algebra the values of the variables are numbers. Second, Boolean algebra uses logical operators such as conjunction (and) denoted as ∧, disjunction (or) denoted as ∨, and negation (not) denoted as ¬. Elementary algebra, on the other hand, uses arithmetic operators such as addition, multiplication, subtraction, and division. Boolean algebra is therefore a formal way of describing logical operations in the same way that elementary algebra describes numerical operations.