In speech

| | This section is empty. You can help by adding to it. (April 2015) |

Concatenative synthesis is a technique for synthesising sounds by concatenating short samples of recorded sound (called units). The duration of the units is not strictly defined and may vary according to the implementation, roughly in the range of 10 milliseconds up to 1 second. It is used in speech synthesis and music sound synthesis to generate user-specified sequences of sound from a database (often called a corpus) built from recordings of other sequences.

In contrast to granular synthesis, concatenative synthesis is driven by an analysis of the source sound, in order to identify the units that best match the specified criterion. [1]

| | This section is empty. You can help by adding to it. (April 2015) |

Concatenative synthesis for music started to develop in the 2000s in particular through the work of Schwarz [2] and Pachet [3] (so-called musaicing). The basic techniques are similar to those for speech, although with differences due to the differing nature of speech and music: for example, the segmentation is not into phonetic units but often into subunits of musical notes or events. [1] [2] [4]

Zero Point, the first full-length album by Rob Clouth (Mesh 2020), features self-made concatenative synthesis software called the 'Reconstructor' which "chops sampled sounds into tiny pieces and rearranges them to replicate a target sound. This allowed Clouth to use and manipulate his own beatboxing, a technique used on 'Into' and 'The Vacuum State'." [5] Clouth's concatenative synthesis algorithm was adapted from 'Let It Bee — Towards NMF-Inspired Audio Mosaicing' by Jonathan Driedger, Thomas Prätzlich, and Meinard Müller. [6] [7]

Clouth's work on Zero Point was cited as an inspiration for recent innovations in concatenative synthesis as outlined in "The Concatenator: A Bayesian Approach to Real Time Concatenative Musaicing" by Chris Tralie and Ben Cantil (ISMIR 2024), which improved on the speed, accuracy, and playability of prior realtime concatenative synthesis methods [8] [9] . The new algorithm serves as the engine behind the Concatenator plugin by DataMind Audio, which is currently still in beta [10] .

Additive synthesis is a sound synthesis technique that creates timbre by adding sine waves together.

Audio signal processing is a subfield of signal processing that is concerned with the electronic manipulation of audio signals. Audio signals are electronic representations of sound waves—longitudinal waves which travel through air, consisting of compressions and rarefactions. The energy contained in audio signals or sound power level is typically measured in decibels. As audio signals may be represented in either digital or analog format, processing may occur in either domain. Analog processors operate directly on the electrical signal, while digital processors operate mathematically on its digital representation.

Computer music is the application of computing technology in music composition, to help human composers create new music or to have computers independently create music, such as with algorithmic composition programs. It includes the theory and application of new and existing computer software technologies and basic aspects of music, such as sound synthesis, digital signal processing, sound design, sonic diffusion, acoustics, electrical engineering, and psychoacoustics. The field of computer music can trace its roots back to the origins of electronic music, and the first experiments and innovations with electronic instruments at the turn of the 20th century.

A vocoder is a category of speech coding that analyzes and synthesizes the human voice signal for audio data compression, multiplexing, voice encryption or voice transformation.

Speech synthesis is the artificial production of human speech. A computer system used for this purpose is called a speech synthesizer, and can be implemented in software or hardware products. A text-to-speech (TTS) system converts normal language text into speech; other systems render symbolic linguistic representations like phonetic transcriptions into speech. The reverse process is speech recognition.

Time stretching is the process of changing the speed or duration of an audio signal without affecting its pitch. Pitch scaling is the opposite: the process of changing the pitch without affecting the speed. Pitch shift is pitch scaling implemented in an effects unit and intended for live performance. Pitch control is a simpler process which affects pitch and speed simultaneously by slowing down or speeding up a recording.

Digital music technology encompasses the use of digital instruments to produce, perform or record music. These instruments vary, including computers, electronic effects units, software, and digital audio equipment. Digital music technology is used in performance, playback, recording, composition, mixing, analysis and editing of music, by professions in all parts of the music industry.

Wavetable synthesis is a sound synthesis technique used to create quasi-periodic waveforms often used in the production of musical tones or notes.

Granular synthesis is a sound synthesis method that operates on the microsound time scale.

IRCAM is a French institute dedicated to the research of music and sound, especially in the fields of avant garde and electro-acoustical art music. It is situated next to, and is organisationally linked with, the Centre Pompidou in Paris. The extension of the building was designed by Renzo Piano and Richard Rogers. Much of the institute is located underground, beneath the fountain to the east of the buildings.

ChucK is a concurrent, strongly timed audio programming language for real-time synthesis, composition, and performance, which runs on Linux, Mac OS X, Microsoft Windows, and iOS. It is designed to favor readability and flexibility for the programmer over other considerations such as raw performance. It natively supports deterministic concurrency and multiple, simultaneous, dynamic control rates. Another key feature is the ability to live code; adding, removing, and modifying code on the fly, while the program is running, without stopping or restarting. It has a highly precise timing/concurrency model, allowing for arbitrarily fine granularity. It offers composers and researchers a powerful and flexible programming tool for building and experimenting with complex audio synthesis programs, and real-time interactive control.

MUSIC-N refers to a family of computer music programs and programming languages descended from or influenced by MUSIC, a program written by Max Mathews in 1957 at Bell Labs. MUSIC was the first computer program for generating digital audio waveforms through direct synthesis. It was one of the first programs for making music on a digital computer, and was certainly the first program to gain wide acceptance in the music research community as viable for that task. The world's first computer-controlled music was generated in Australia by programmer Geoff Hill on the CSIRAC computer which was designed and built by Trevor Pearcey and Maston Beard. However, CSIRAC produced sound by sending raw pulses to the speaker, it did not produce standard digital audio with PCM samples, like the MUSIC-series of programs.

Pure Data (Pd) is a visual programming language developed by Miller Puckette in the 1990s for creating interactive computer music and multimedia works. While Puckette is the main author of the program, Pd is an open-source project with a large developer base working on new extensions. It is released under BSD-3-Clause. It runs on Linux, MacOS, iOS, Android and Windows. Ports exist for FreeBSD and IRIX.

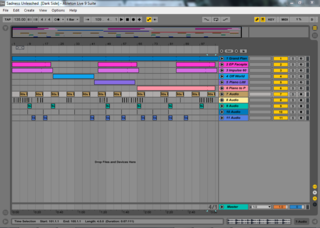

Ableton Live is a digital audio workstation for macOS and Windows developed by the German company Ableton.

The zero-crossing rate (ZCR) is the rate at which a signal changes from positive to zero to negative or from negative to zero to positive. Its value has been widely used in both speech recognition and music information retrieval, being a key feature to classify percussive sounds.

Eduardo Reck Miranda is a Brazilian composer of chamber and electroacoustic pieces but is most notable in the United Kingdom for his scientific research into computer music, particularly in the field of human-machine interfaces where brain waves will replace keyboards and voice commands to permit the disabled to express themselves musically.

Symbolic Sound Corporation was founded by Carla Scaletti and Kurt J. Hebel in 1989 as a spinoff of the CERL Sound Group at the Computer-based Education Research Laboratory of the University of Illinois at Urbana–Champaign. Originally named Kymatics, the company was incorporated as Symbolic Sound Corporation in March 1990. Symbolic Sound's products are being used in sound design for music, film, advertising, television, speech and hearing research, computer games, and other virtual environments. The company is based in Bozeman, Montana.

Sound and music computing (SMC) is a research field that studies the whole sound and music communication chain from a multidisciplinary point of view. By combining scientific, technological and artistic methodologies it aims at understanding, modeling and generating sound and music through computational approaches.

The International Society for Music Information Retrieval (ISMIR) is an international forum for research on the organization of music-related data. It started as an informal group steered by an ad hoc committee in 2000 which established a yearly symposium - whence "ISMIR", which meant International Symposium on Music Information Retrieval. It was turned into a conference in 2002 while retaining the acronym. ISMIR was incorporated in Canada on July 4, 2008.

WaveNet is a deep neural network for generating raw audio. It was created by researchers at London-based AI firm DeepMind. The technique, outlined in a paper in September 2016, is able to generate relatively realistic-sounding human-like voices by directly modelling waveforms using a neural network method trained with recordings of real speech. Tests with US English and Mandarin reportedly showed that the system outperforms Google's best existing text-to-speech (TTS) systems, although as of 2016 its text-to-speech synthesis still was less convincing than actual human speech. WaveNet's ability to generate raw waveforms means that it can model any kind of audio, including music.

{{citation}}: CS1 maint: multiple names: authors list (link){{cite web}}: Missing or empty |title= (help)