Additive synthesis is a sound synthesis technique that creates timbre by adding sine waves together.

In speech science and phonetics, a formant is the broad spectral maximum that results from an acoustic resonance of the human vocal tract. In acoustics, a formant is usually defined as a broad peak, or local maximum, in the spectrum. For harmonic sounds, with this definition, the formant frequency is sometimes taken as that of the harmonic that is most augmented by a resonance. The difference between these two definitions resides in whether "formants" characterise the production mechanisms of a sound or the produced sound itself. In practice, the frequency of a spectral peak differs slightly from the associated resonance frequency, except when, by luck, harmonics are aligned with the resonance frequency.

Linear predictive coding (LPC) is a method used mostly in audio signal processing and speech processing for representing the spectral envelope of a digital signal of speech in compressed form, using the information of a linear predictive model.

Physical modelling synthesis refers to sound synthesis methods in which the waveform of the sound to be generated is computed using a mathematical model, a set of equations and algorithms to simulate a physical source of sound, usually a musical instrument.

Speech synthesis is the artificial production of human speech. A computer system used for this purpose is called a speech computer or speech synthesizer, and can be implemented in software or hardware products. A text-to-speech (TTS) system converts normal language text into speech; other systems render symbolic linguistic representations like phonetic transcriptions into speech. The reverse process is speech recognition.

Digital music technology encompasses digital instruments, computers, electronic effects units, software, or digital audio equipment by a performer, composer, sound engineer, DJ, or record producer to produce, perform or record music. The term refers to electronic devices, instruments, computer hardware, and software used in performance, playback, recording, composition, mixing, analysis, and editing of music.

A software synthesizer or softsynth is a computer program that generates digital audio, usually for music. Computer software that can create sounds or music is not new, but advances in processing speed now allow softsynths to accomplish the same tasks that previously required the dedicated hardware of a conventional synthesizer. Softsynths may be readily interfaced with other music software such as music sequencers typically in the context of a digital audio workstation. Softsynths are usually less expensive and can be more portable than dedicated hardware.

Wavetable synthesis is a sound synthesis technique used to create periodic waveforms. Often used in the production of musical tones or notes, it was first written about by Hal Chamberlin in Byte's September 1977 issue. Wolfgang Palm of Palm Products GmbH (PPG) developed it in the late 1970s and published in 1979. The technique has since been used as the primary synthesis method in synthesizers built by PPG and Waldorf Music and as an auxiliary synthesis method by Ensoniq and Access. It is currently used in hardware synthesizers from Waldorf Music and in software-based synthesizers for PCs and tablets, including apps offered by PPG and Waldorf, among others.

Digital waveguide synthesis is the synthesis of audio using a digital waveguide. Digital waveguides are efficient computational models for physical media through which acoustic waves propagate. For this reason, digital waveguides constitute a major part of most modern physical modeling synthesizers.

The IRCAM Signal Processing Workstation (ISPW) was a hardware DSP platform developed by IRCAM and the Ariel Corporation in the late 1980s. In French, the ISPW is referred to as the SIM. Eric Lindemann was the principal designer of the ISPW hardware as well as manager of the overall hardware/software effort.

Carl Gunnar Michael Fant was a leading researcher in speech science in general and speech synthesis in particular who spent most of his career as a professor at the Swedish Royal Institute of Technology (KTH) in Stockholm. He was a first cousin of George Fant, the actor and director.

Signal processing is an electrical engineering subfield that focuses on analysing, modifying, and synthesizing signals such as sound, images, and scientific measurements. For example, with a filter g, an inverse filterh is one such that the sequence of applying g then h to a signal results in the original signal. Software or electronic inverse filters are often used to compensate for the effect of unwanted environmental filtering of signals.

Kenneth Noble Stevens was the Clarence J. LeBel Professor of Electrical Engineering and Computer Science, and Professor of Health Sciences and Technology at the Research Laboratory of Electronics at MIT. Stevens was head of the Speech Communication Group in MIT's Research Laboratory of Electronics (RLE), and was one of the world's leading scientists in acoustic phonetics.

Haskins Laboratories, Inc. is an independent 501(c) non-profit corporation, founded in 1935 and located in New Haven, Connecticut, since 1970. Upon moving to New Haven, Haskins entered in to formal affiliation agreements with both Yale University and the University of Connecticut; it remains fully independent, administratively and financially, of both Yale and UConn. Haskins is a multidisciplinary and international community of researchers which conducts basic research on spoken and written language. A guiding perspective of their research is to view speech and language as emerging from biological processes, including those of adaptation, response to stimuli, and conspecific interaction. The Laboratories has a long history of technological and theoretical innovation, from creating systems of rules for speech synthesis and development of an early working prototype of a reading machine for the blind to developing the landmark concept of phonemic awareness as the critical preparation for learning to read an alphabetic writing system.

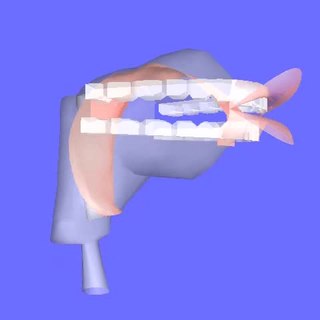

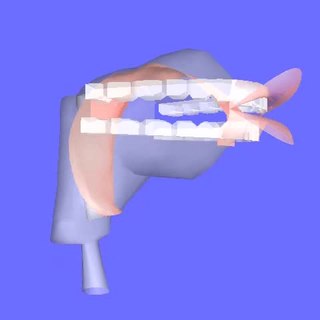

Articulatory synthesis refers to computational techniques for synthesizing speech based on models of the human vocal tract and the articulation processes occurring there. The shape of the vocal tract can be controlled in a number of ways which usually involves modifying the position of the speech articulators, such as the tongue, jaw, and lips. Speech is created by digitally simulating the flow of air through the representation of the vocal tract.

Louis M. Goldstein is an American linguist and cognitive scientist. He was previously a professor and chair of the Department of Linguistics and a professor of psychology at Yale University and is now a professor in the Department of Linguistics at the University of Southern California. He is a senior scientist at Haskins Laboratories in New Haven, Connecticut, and a founding member of the Association for Laboratory Phonology.

The Yamaha FS1R is a sound synthesizer module, manufactured by the Yamaha Corporation from 1998 to 2000. Based on Formant synthesis, it also has FM synthesis capabilities similar to the DX range. Its editing involves 2,000+ parameters in any one 'performance', prompting the creation of a number of third party freeware programming applications. These applications provide the tools needed to program the synth which were missing when it was in production by Yamaha. The synth was discontinued after two years, probably in part due to its complexity, poor front-panel controls, brief manual and limited polyphony.

Cantor was a vocal singing synthesizer software released four months after the original release of Vocaloid by the company VirSyn, and was based on the same idea of synthesizing the human voice. VirSyn released English and German versions of this software. Cantor 2 boasted a variety of voices from near-realistic sounding ones to highly expressive vocals and robotic voices.

The Music Kit was a software package for the NeXT Computer system. First developed by David A. Jaffe and Julius O. Smith, it supported the Motorola 56001 DSP that was included on the NeXT Computer's motherboard. It was also the first architecture to unify the Music-N and MIDI paradigms,. Thus it combined the generality of the former with the interactivity and performance capabilities of the latter. The Music Kit was integrated with the Sound Kit.

Raimo Olavi Toivonen is a Finnish developer of speech analysis, speech synthesis, speech technology, psychoacoustics and digital signal processing.