Related Research Articles

Knowledge representation and reasoning is the field of artificial intelligence (AI) dedicated to representing information about the world in a form that a computer system can use to solve complex tasks such as diagnosing a medical condition or having a dialog in a natural language. Knowledge representation incorporates findings from psychology about how humans solve problems and represent knowledge, in order to design formalisms that will make complex systems easier to design and build. Knowledge representation and reasoning also incorporates findings from logic to automate various kinds of reasoning.

Natural language processing (NLP) is an interdisciplinary subfield of computer science and artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Typically data is collected in text corpora, using either rule-based, statistical or neural-based approaches in machine learning and deep learning.

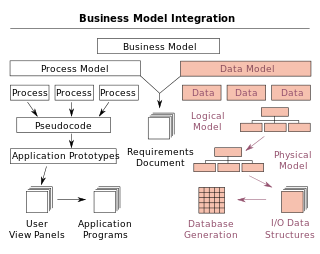

A data model is an abstract model that organizes elements of data and standardizes how they relate to one another and to the properties of real-world entities. For instance, a data model may specify that the data element representing a car be composed of a number of other elements which, in turn, represent the color and size of the car and define its owner.

Natural language understanding (NLU) or natural language interpretation (NLI) is a subset of natural language processing in artificial intelligence that deals with machine reading comprehension. NLU has been considered an AI-hard problem.

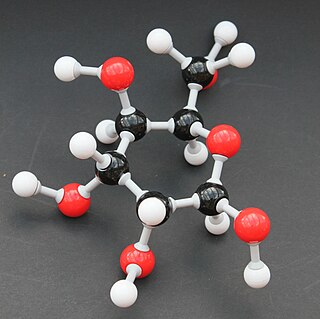

A model is an informative representation of an object, person or system. The term originally denoted the plans of a building in late 16th-century English, and derived via French and Italian ultimately from Latin modulus, a measure.

Natural language generation (NLG) is a software process that produces natural language output. A widely-cited survey of NLG methods describes NLG as "the subfield of artificial intelligence and computational linguistics that is concerned with the construction of computer systems that can produce understandable texts in English or other human languages from some underlying non-linguistic representation of information".

In artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as knowledge-based systems, symbolic mathematics, automated theorem provers, ontologies, the semantic web, and automated planning and scheduling systems. The Symbolic AI paradigm led to seminal ideas in search, symbolic programming languages, agents, multi-agent systems, the semantic web, and the strengths and limitations of formal knowledge and reasoning systems.

An image schema is a recurring structure within our cognitive processes which establishes patterns of understanding and reasoning. As an understudy to embodied cognition, image schemas are formed from our bodily interactions, from linguistic experience, and from historical context. The term is introduced in Mark Johnson's book The Body in the Mind; in case study 2 of George Lakoff's Women, Fire and Dangerous Things: and further explained by Todd Oakley in The Oxford handbook of cognitive linguistics; by Rudolf Arnheim in Visual Thinking; by the collection From Perception to Meaning: Image Schemas in Cognitive Linguistics edited by Beate Hampe and Joseph E. Grady.

The language of thought hypothesis (LOTH), sometimes known as thought ordered mental expression (TOME), is a view in linguistics, philosophy of mind and cognitive science, forwarded by American philosopher Jerry Fodor. It describes the nature of thought as possessing "language-like" or compositional structure. On this view, simple concepts combine in systematic ways to build thoughts. In its most basic form, the theory states that thought, like language, has syntax.

Roger Carl Schank was an American artificial intelligence theorist, cognitive psychologist, learning scientist, educational reformer, and entrepreneur. Beginning in the late 1960s, he pioneered conceptual dependency theory and case-based reasoning, both of which challenged cognitivist views of memory and reasoning. He began his career teaching at Yale University and Stanford University. In 1989, Schank was granted $30 million in a ten-year commitment to his research and development by Andersen Consulting, through which he founded the Institute for the Learning Sciences (ILS) at Northwestern University in Chicago.

Object process methodology (OPM) is a conceptual modeling language and methodology for capturing knowledge and designing systems, specified as ISO/PAS 19450. Based on a minimal universal ontology of stateful objects and processes that transform them, OPM can be used to formally specify the function, structure, and behavior of artificial and natural systems in a large variety of domains.

The term conceptual model refers to any model that is formed after a conceptualization or generalization process. Conceptual models are often abstractions of things in the real world, whether physical or social. Semantic studies are relevant to various stages of concept formation. Semantics is fundamentally a study of concepts, the meaning that thinking beings give to various elements of their experience.

A modeling perspective in information systems is a particular way to represent pre-selected aspects of a system. Any perspective has a different focus, conceptualization, dedication and visualization of what the model is representing.

Interlingual machine translation is one of the classic approaches to machine translation. In this approach, the source language, i.e. the text to be translated is transformed into an interlingua, i.e., an abstract language-independent representation. The target language is then generated from the interlingua. Within the rule-based machine translation paradigm, the interlingual approach is an alternative to the direct approach and the transfer approach.

Frames are an artificial intelligence data structure used to divide knowledge into substructures by representing "stereotyped situations". They were proposed by Marvin Minsky in his 1974 article "A Framework for Representing Knowledge". Frames are the primary data structure used in artificial intelligence frame languages; they are stored as ontologies of sets.

Computational creativity is a multidisciplinary endeavour that is located at the intersection of the fields of artificial intelligence, cognitive psychology, philosophy, and the arts.

Script theory is a psychological theory which posits that human behaviour largely falls into patterns called "scripts" because they function the way a written script does, by providing a program for action. Silvan Tomkins created script theory as a further development of his affect theory, which regards human beings' emotional responses to stimuli as falling into categories called "affects": he noticed that the purely biological response of affect may be followed by awareness and by what we cognitively do in terms of acting on that affect so that more was needed to produce a complete explanation of what he called "human being theory".

The history of natural language processing describes the advances of natural language processing. There is some overlap with the history of machine translation, the history of speech recognition, and the history of artificial intelligence.

The following outline is provided as an overview of and topical guide to natural-language processing:

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

References

- ↑ Roger Schank, 1969, A conceptual dependency parser for natural language Proceedings of the 1969 conference on Computational linguistics, Sång-Säby, Sweden pages 1-3

- ↑ Cardiff University on Conceptual dependency theory

- ↑ Language, mind, and brain by Thomas W. Simon, Robert J. Scholes 1982 ISBN 0-89859-153-8 page 105