In statistics, the concept of a concomitant, also called the induced order statistic, arises when one sorts the members of a random sample according to corresponding values of another random sample.

Statistics is a branch of mathematics dealing with data collection, organization, analysis, interpretation and presentation. In applying statistics to, for example, a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model process to be studied. Populations can be diverse topics such as "all people living in a country" or "every atom composing a crystal". Statistics deals with all aspects of data, including the planning of data collection in terms of the design of surveys and experiments. See glossary of probability and statistics.

Let (Xi, Yi), i = 1, . . ., n be a random sample from a bivariate distribution. If the sample is ordered by the Xi, then the Y-variate associated with Xr:n will be denoted by Y[r:n] and termed the concomitant of the rth order statistic.

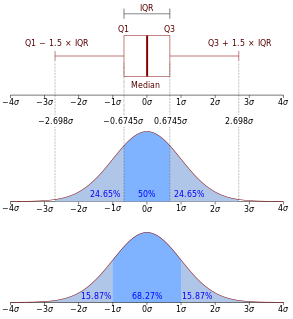

In statistics, the kth order statistic of a statistical sample is equal to its kth-smallest value. Together with rank statistics, order statistics are among the most fundamental tools in non-parametric statistics and inference.

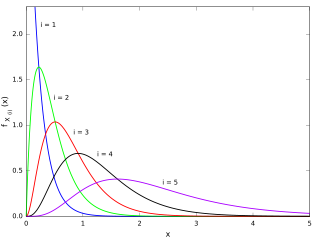

Suppose the parent bivariate distribution having the cumulative distribution function F(x,y) and its probability density function f(x,y), then the probability density function of rthconcomitant for is

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable , or just distribution function of , evaluated at , is the probability that will take a value less than or equal to .

In probability theory, a probability density function (PDF), or density of a continuous random variable, is a function whose value at any given sample in the sample space can be interpreted as providing a relative likelihood that the value of the random variable would equal that sample. In other words, while the absolute likelihood for a continuous random variable to take on any particular value is 0, the value of the PDF at two different samples can be used to infer, in any particular draw of the random variable, how much more likely it is that the random variable would equal one sample compared to the other sample.

If all are assumed to be i.i.d., then for , the joint density for is given by

That is, in general, the joint concomitants of order statistics is dependent, but are conditionally independent given for all k where . The conditional distribution of the joint concomitants can be derived from the above result by comparing the formula in marginal distribution and hence

In probability theory and statistics, the marginal distribution of a subset of a collection of random variables is the probability distribution of the variables contained in the subset. It gives the probabilities of various values of the variables in the subset without reference to the values of the other variables. This contrasts with a conditional distribution, which gives the probabilities contingent upon the values of the other variables.