Tests for constituents in English

Tests for constituents are diagnostics used to identify sentence structure. There are numerous tests for constituents that are commonly used to identify the constituents of English sentences. 15 of the most commonly used tests are listed next: 1) coordination (conjunction), 2) pro-form substitution (replacement), 3) topicalization (fronting), 4) do-so-substitution, 5) one-substitution, 6) answer ellipsis (question test), 7) clefting, 8) VP-ellipsis, 9) pseudoclefting, 10) passivization, 11) omission (deletion), 12) intrusion, 13) wh-fronting, 14) general substitution, 15) right node raising (RNR).

The order in which these 15 tests are listed here corresponds to the frequency of use, coordination being the most frequently used of the 15 tests and RNR being the least frequently used. A general word of caution is warranted when employing these tests, since they often deliver contradictory results. The tests are merely rough-and-ready tools that grammarians employ to reveal clues about syntactic structure. Some syntacticians even arrange the tests on a scale of reliability, with less-reliable tests treated as useful to confirm constituency though not sufficient on their own. Failing to pass a single test does not mean that the test string is not a constituent, and conversely, passing a single test does not necessarily mean the test string is a constituent. It is best to apply as many tests as possible to a given string in order to prove or to rule out its status as a constituent.

The 15 tests are introduced, discussed, and illustrated below mainly relying on the same one sentence: [2]

- Drunks could put off the customers.

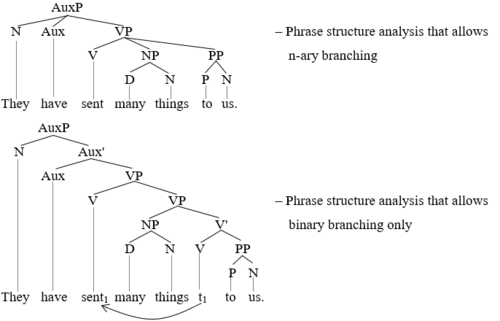

By restricting the introduction and discussion of the tests for constituents below mainly to this one sentence, it becomes possible to compare the results of the tests. To aid the discussion and illustrations of the constituent structure of this sentence, the following two sentence diagrams are employed (D = determiner, N = noun, NP = noun phrase, Pa = particle, S = sentence, V = Verb, VP = verb phrase):

These diagrams show two potential analyses of the constituent structure of the sentence. A given node in a tree diagram is understood as marking a constituent, that is, a constituent is understood as corresponding to a given node and everything that that node exhaustively dominates. Hence the first tree, which shows the constituent structure according to dependency grammar, marks the following words and word combinations as constituents: Drunks, off, the, the customers, and put off the customers. [3] The second tree, which shows the constituent structure according to phrase structure grammar, marks the following words and word combinations as constituents: Drunks, could, put, off, the, customers, the customers, put off the customers, and could put off the customers. The analyses in these two tree diagrams provide orientation for the discussion of tests for constituents that now follows.

Coordination

The coordination test assumes that only constituents can be coordinated, i.e., joined by means of a coordinator such as and, or, or but: [4] The next examples demonstrate that coordination identifies individual words as constituents:

- Drunks could put off the customers.

- (a) [Drunks] and [bums] could put off the customers.

- (b) Drunks [could] and [would] put off the customers.

- (c) Drunks could [put off] and [drive away] the customers.

- (d) Drunks could put off the [customers] and [neighbors].

The square brackets mark the conjuncts of the coordinate structures. Based on these data, one might assume that drunks, could, put off, and customers are constituents in the test sentence because these strings can be coordinated with bums, would, drive away, and neighbors, respectively. Coordination also identifies multi-word strings as constituents:

- (e) Drunks could put off [the customers] and [the neighbors].

- (f) Drunks could [put off the customers] and [drive away the neighbors].

- (g) Drunks [could put off the customers] and [would drive away the neighbors].

These data suggest that the customers, put off the customers, and could put off the customers are constituents in the test sentence.

Examples such as (a-g) are not controversial insofar as many theories of sentence structure readily view the strings tested in sentences (a-g) as constituents. However, additional data are problematic, since they suggest that certain strings are also constituents even though most theories of syntax do not acknowledge them as such, e.g.

- (h) Drunks [could put off] and [would really annoy] the customers.

- (i) Drunks could [put off these] and [piss off those] customers.

- (j) [Drunks could], and [they probably would], put off the customers.

These data suggest that could put off, put off these, and Drunks could are constituents in the test sentence. Most theories of syntax reject the notion that these strings are constituents, though. Data such as (h-j) are sometimes addressed in terms of the right node raising (RNR) mechanism.

The problem for the coordination test represented by examples (h-j) is compounded when one looks beyond the test sentence, for one quickly finds that coordination suggests that a wide range of strings are constituents that most theories of syntax do not acknowledge as such, e.g.

- (k) Sam leaves [from home on Tuesday] and [from work on Wednesday].

- (l) Sam leaves [from home on Tuesday on his bicycle] and [from work on Wednesday in his car].

- (m) Sam leaves [from home on Tuesday], and [from work].

The strings from home on Tuesday and from home on Tuesday on his bicycle are not viewed as constituents in most theories of syntax, and concerning sentence (m), it is very difficult there to even discern how one should delimit the conjuncts of the coordinate structure. The coordinate structures in (k-l) are sometimes characterized in terms of non-constituent conjuncts (NCC), and the instance of coordination in sentence (m) is sometimes discussed in terms of stripping and/or gapping.

Due to the difficulties suggested with examples (h-m), many grammarians view coordination skeptically regarding its value as a test for constituents. The discussion of the other tests for constituents below reveals that this skepticism is warranted, since coordination identifies many more strings as constituents than the other tests for constituents. [5]

Proform substitution (replacement)

Proform substitution, or replacement, involves replacing the test string with the appropriate proform (e.g. pronoun, pro-verb, pro-adjective, etc.). Substitution normally involves using a definite proform like it, he, there, here, etc. in place of a phrase or a clause. If such a change yields a grammatical sentence where the general structure has not been altered, then the test string is likely a constituent: [6]

- Drunks could put off the customers.

- (a) They could put off the customers. (They = Drunks)

- (b) Drunks could put them off. (them = the customers; note that shifting of them and off has occurred here.)

- (c) Drunks could do it. (do it = put off the customers)

These examples suggest that Drunks, the customers, and put off the customers in the test sentence are constituents. An important aspect of the proform test is the fact that it fails to identify most subphrasal strings as constituents, e.g.

- (d) *Drunks do so/it put off the customers (do so/it = could)

- (e) *Drunks could do so/it off the customers (do so/it = put)

- (f) *Drunks could put so/it the customers (so/it = off)

- (g) *Drunks could put off the them. (them = customers)

These examples suggest that the individual words could, put, off, and customers should not be viewed as constituents. This suggestion is of course controversial, since most theories of syntax assume that individual words are constituents by default. The conclusion one can reach based on such examples, however, is that proform substitution using a definite proform identifies phrasal constituents only; it fails to identify sub-phrasal strings as constituents.

Topicalization (fronting)

Topicalization involves moving the test string to the front of the sentence. It is a simple movement operation. [7] Many instances of topicalization seem only marginally acceptable when taken out of context. Hence to suggest a context, an instance of topicalization can be preceded by ...and and a modal adverb can be added as well (e.g. certainly):

- Drunks could put off the customers.

- (a) ...and the customers, drunks certainly could put off.

- (b) ...and put off the customers, drunks certainly could.

These examples suggest that the customers and put off the customers are constituents in the test sentence. Topicalization is like many of the other tests in that it identifies phrasal constituents only. When the test sequence is a sub-phrasal string, topicalization fails:

- (c) *...and customers, drunks certainly could put off the.

- (d) *...and could, drunks certainly put off the customers.

- (e) *...and put, drunks certainly could off the customers.

- (f) *...and off, drunks certainly could put the customers.

- (g) *...and the, drunks certainly could put off customers.

These examples demonstrate that customers, could, put, off, and the fail the topicalization test. Since these strings are all sub-phrasal, one can conclude that topicalization is unable to identify sub-phrasal strings as constituents.

Do-so-substitution

Do-so-substitution is a test that substitutes a form of do so (does so, did so, done so, doing so) into the test sentence for the target string. This test is widely used to probe the structure of strings containing verbs (because do is a verb). [8] The test is limited in its applicability, though, precisely because it is only applicable to strings containing verbs:

- Drunks could put off the customers.

- (a) Drunks could do so. (do so = put off the customers)

- (b) Drunks do so. (do so ≠ could put off the customers)

The 'a' example suggests that put off the customers is a constituent in the test sentence, whereas the b example fails to suggest that could put off the customers is a constituent, for do so cannot include the meaning of the modal verb could. To illustrate more completely how the do so test is employed, another test sentence is now used, one that contains two post-verbal adjunct phrases:

- We met them in the pub because we had time.

- (c) We did so in the pub because we had time. (did so = met them)

- (d) We did so because we had time. (did so = met them in the pub)

- (e) We did so. (did so = met them in the pub because we had time)

These data suggest that met them, met them in the pub, and met them in the pub because we had time are constituents in the test sentence. Taken together, such examples seem to motivate a structure for the test sentence that has a left-branching verb phrase, because only a left-branching verb phrase can view each of the indicated strings as a constituent. There is a problem with this sort of reasoning, however, as the next example illustrates:

- (f) We did so in the pub. (did so = met them because we had time)

In this case, did so appears to stand in for the discontinuous word combination consisting of met them and because we had time. Such a discontinuous combination of words cannot be construed as a constituent. That such an interpretation of did so is indeed possible is seen in a fuller sentence such as You met them in the cafe because you had time, and we did so in the pub. In this case, the preferred reading of did so is that it indeed simultaneously stands in for both met them and because we had time.

One-substitution

The one-substitution test replaces the test string with the indefinite pronoun one or ones. [9] If the result is acceptable, then the test string is deemed a constituent. Since one is a type of pronoun, one-substitution is only of value when probing the structure of noun phrases. In this regard, the test sentence from above is expanded in order to better illustrate the manner in which one-substitution is generally employed:

- Drunks could put off the loyal customers around here who we rely on.

- (a) Drunks could put off the loyal ones around here who we rely on. (ones = customers)

- (b) Drunks could put off the ones around here who we rely on. (ones = loyal customers)

- (c) Drunks could put off the loyal ones who we rely on. (ones = customers around here)

- (d) Drunks could put off the ones who we rely on. (ones = loyal customers around here)

- (e) Drunks could put off the loyal ones. (ones = customers around here who we rely on)

These examples suggest that customers, loyal customers, customers around here, loyal customers around here, and customers around here who we rely on are constituents in the test sentence. Some have pointed to a problem associated with the one-substitution in this area, however. This problem is that it is impossible to produce a single constituent structure of the noun phrase the loyal customers around here who we rely on that could simultaneous view all of the indicated strings as constituents. [10] Another problem that has been pointed out concerning the one-substitution as a test for constituents is the fact that it at times suggests that non-string word combinations are constituents, [11] e.g.

- (f) Drunks would put off the ones around here. (ones = loyal customers who we rely on)

The word combination consisting of both loyal customers and who we rely on is discontinuous in the test sentence, a fact that should motivate one to generally question the value of one-substitution as a test for constituents.

Answer fragments (answer ellipsis, question test, standalone test)

The answer fragment test involves forming a question that contains a single wh-word (e.g. who, what, where, etc.). If the test string can then appear alone as the answer to such a question, then it is likely a constituent in the test sentence: [12]

- Drunks could put off the customers.

- (a) Who could put off the customers? - Drunks.

- (b) Who could drunks put off? - The customers.

- (c) What would drunks do? - Put off the customers.

These examples suggest that Drunks, the customers, and put off the customers are constituents in the test sentence. The answer fragment test is like most of the other tests for constituents in that it does not identify sub-phrasal strings as constituents:

- (d) What about putting off the customers? - *Could.

- (e) What could drunks do about the customers? - *Put.

- (f) *What could drunks do about putting the customers? - *Off.

- (g) *Who could drunks put off the? - *Customers.

These answer fragments are all grammatically unacceptable, suggesting that could, put, off, and customers are not constituents. Note as well that the latter two questions themselves are ungrammatical. It is apparently often impossible to form the question in a way that could successfully elicit the indicated strings as answer fragments. The conclusion, then, is that the answer fragment test is like most of the other tests in that it fails to identify sub-phrasal strings as constituents.

Clefting

Clefting involves placing the test string X within the structure beginning with It is/was: It was X that.... [13] The test string appears as the pivot of the cleft sentence:

- Drunks could put off the customers.

- (a) It is drunks that could put off the customers.

- (b) It is the customers that drunks could put off.

- (c) ??It is put off the customers that drunks could do.

These examples suggest that Drunks and the customers are constituents in the test sentence. Example c is of dubious acceptability, suggesting that put off the customers may not be constituent in the test string. Clefting is like most of the other tests for constituents in that it fails to identify most individual words as constituents:

- (d) *It is could that drunks put off the customers.

- (e) *It is put that drunks could off the customers.

- (f) *It is off that drunks could put the customers.

- (g) *It is the that drunks could put off customers.

- (h) *It is customers that drunks could put off the.

The examples suggest that each of the individual words could, put, off, the, and customers are not constituents, contrary to what most theories of syntax assume. In this respect, clefting is like many of the other tests for constituents in that it only succeeds at identifying certain phrasal strings as constituents.

VP-ellipsis (verb phrase ellipsis)

The VP-ellipsis test checks to see which strings containing one or more predicative elements (usually verbs) can be elided from a sentence. Strings that can be elided are deemed constituents: [14] The symbol ∅ is used in the following examples to mark the position of ellipsis:

- Beggars could immediately put off the customers when they arrive, and

- (a) *drunks could immediately also ∅ the customers when they arrive. (∅ = put off)

- (b) ?drunks could immediately also ∅ when they arrive. (∅ = put off the customers)

- (c) drunks could also ∅ when they arrive. (∅ = immediately put off the customers)

- (d) drunks could immediately also ∅. (∅ = put off the customers when they arrive)

- (e) drunks could also ∅. (∅ = immediately put off the customers when they arrive)

These examples suggest that put off is not a constituent in the test sentence, but that immediately put off the customers, put off the customers when they arrive, and immediately put off the customers when they arrive are constituents. Concerning the string put off the customers in (b), marginal acceptability makes it difficult to draw a conclusion about put off the customers.

There are various difficulties associated with this test. The first of these is that it can identify too many constituents, such as in this case here where it is impossible to produce a single constituent structure that could simultaneously view each of the three acceptable examples (c-e) as having elided a constituent. Another problem is that the test can at times suggest that a discontinuous word combination is a constituent, e.g.:

- (f) Frank will help tomorrow in the office, and Susan will ∅ today. (∅ = help...in the office)

In this case, it appears as though the elided material corresponds to the discontinuous word combination including help and in the office.

Pseudoclefting

Pseudoclefting is similar to clefting in that it puts emphasis on a certain phrase in a sentence. There are two variants of the pseudocleft test. One variant inserts the test string X in a sentence starting with a free relative clause: What.....is/are X; the other variant inserts X at the start of the sentence followed by the it/are and then the free relative clause: X is/are what/who... Only the latter of these two variants is illustrated here. [15]

- Drunks would put off the customers.

- (a) Drunks are who could put off the customers.

- (b) The customers are who drunks could put off.

- (c) Put off the customers is what drunks could do.

These examples suggest that Drunks, the customers, and put off the customers are constituents in the test sentence. Pseudoclefting fails to identify most individual words as constituents:

- (d) *Could is what drunks put off the customers.

- (e) *Put is what drunks could off the customers.

- (f) *Off is what drunks could put the customers.

- (g) *The is who drunks could put off customers.

- (h) *Customers is who drunks could put off the.

The pseudoclefting test is hence like most of the other tests insofar as it identifies phrasal strings as constituents, but does not suggest that sub-phrasal strings are constituents.

Passivization

Passivization involves changing an active sentence to a passive sentence, or vice versa. The object of the active sentence is changed to the subject of the corresponding passive sentence: [16]

- (a) Drunks could put off the customers.

- (b) The customers could be put off by drunks.

The fact that sentence (b), the passive sentence, is acceptable, suggests that Drunks and the customers are constituents in sentence (a). The passivization test used in this manner is only capable of identifying subject and object words, phrases, and clauses as constituents. It does not help identify other phrasal or sub-phrasal strings as constituents. In this respect, the value of passivization as test for constituents is very limited.

Omission (deletion)

Omission checks whether the target string can be omitted without influencing the grammaticality of the sentence. In most cases, local and temporal adverbials, attributive modifiers, and optional complements can be safely omitted and thus qualify as constituents. [17]

- Drunks could put off the customers.

- (a) Drunks could put off customers. (the has been omitted.)

This sentence suggests that the definite article the is a constituent in the test sentence. Regarding the test sentence, however, the omission test is very limited in its ability to identify constituents, since the strings that one wants to check do not appear optionally. Therefore, the test sentence is adapted to better illustrate the omission test:

- The obnoxious drunks could immediately put off the customers when they arrive.

- (b) The drunks could immediately put off the customers when they arrive. (obnoxious has been successfully omitted.)

- (c) The obnoxious drunks could put off the customers when they arrive. (immediately has been successfully omitted.)

- (d) The obnoxious drunks could put off the customers. (when they arrive has been successfully omitted.)

The ability to omit obnoxious, immediately, and when they arrive suggests that these strings are constituents in the test sentence. Omission used in this manner is of limited applicability, since it is incapable of identifying any constituent that appears obligatorily. Hence there are many target strings that most accounts of sentence structure take to be constituents but that fail the omission test because these constituents appear obligatorily, such as subject phrases.

Intrusion

Intrusion probes sentence structure by having an adverb "intrude" into parts of the sentence. The idea is that the strings on either side of the adverb are constituents. [18]

- Drunks could put off the customers.

- (a) Drunks definitely could put off the customers.

- (b) Drunks could definitely put off the customers.

- (c) *Drunks could put definitely off the customers.

- (d) *Drunks could put off definitely the customers.

- (e) *Drunks could put off the definitely customers.

Example (a) suggests that Drunks and could put off the customers are constituents. Example (b) suggests that Drunks could and put off the customers are constituents. The combination of (a) and (b) suggest in addition that could is a constituent. Sentence (c) suggests that Drunks could put and off the customers are not constituents. Example (d) suggests that Drunks could put off and the customers are not constituents. And example (e) suggests that Drunks could put off the and customers are not constituents.

Those that employ the intrusion test usually use a modal adverb like definitely. This aspect of the test is problematic, though, since the results of the test can vary based upon the choice of adverb. For instance, manner adverbs distribute differently than modal adverbs and will hence suggest a distinct constituent structure from that suggested by modal adverbs.

Wh-fronting

Wh-fronting checks to see if the test string can be fronted as a wh-word. [19] This test is similar to the answer fragment test insofar it employs just the first half of that test, disregarding the potential answer to the question.

- Drunks would put off the customers.

- (a) Who would put off the customers? (Who ↔ Drunks)

- (b) Who would drunks put off? (Who ↔ the customers)

- (c) What would drunks do? (What...do ↔ put off the customers)

These examples suggest that Drunks, the customers, and put off the customers are constituents in the test sentence. Wh-fronting is like a number of the other tests in that it fails to identify many subphrasal strings as constituents:

- (d) *Do what drunks put off the customers? (Do what ↔ would)

- (e) *Do what drunks would off the customers? (Do what ↔ put)

- (f) *What would drunks put the customers? (What ↔ off)

- (g) *What would drunks put off customers? (What ↔ the)

- (h) *Who would drunks put off the? (Who ↔ customers)

These examples demonstrate a lack of evidence for viewing the individual words would, put, off, the, and customers as constituents.

General substitution

The general substitution test replaces the test string with some other word or phrase. [20] It is similar to proform substitution, the only difference being that the replacement word or phrase is not a proform, e.g.

- Drunks could put off the customers.

- (a) Beggars could put off the customers. (Beggars ↔ Drunks)

- (b) Drunks could put off our guests. (our guests ↔ the customers)

- (c) Drunks would put off the customers. (would ↔ could)

These examples suggest that the strings Drunks, the customers, and could are constituents in the test sentence. There is a major problem with this test, for it is easily possible to find a replacement word for strings that the other tests suggest are clearly not constituents, e.g.

- (d) Drunks piss off the customers. (piss ↔ could put)

- (e) Beggars put off the customers. (Beggars ↔ Drunks could)

- (f) Drunks like customers. (like ↔ could put off the)

These examples suggest that could put, Drunks could, and could put off the are constituents in the test sentence. This is contrary to what the other tests reveal and to what most theories of sentence structure assume. The value of general substitution as test for constituents is therefore suspect. It is like the coordination test in that it suggests that too many strings are constituents.

Right node raising (RNR)

Right node raising, abbreviated as RNR, is a test that isolates the test string on the right side of a coordinate structure. [21] The assumption is that only constituents can be shared by the conjuncts of a coordinate structure, e.g.

- Drunks could put off the customers.

- (a) [Drunks] and [beggars] could put off the customers.

- (b) [Drunks could], and [they probably would], put off the customers.

- (c) [Drunks could approach] and [they would then put off] the customers.

These examples suggest that could put off the customers, put off the customers, and the customers are constituents in the test sentence. There are two problems with the RNR diagnostic as a test for constituents. The first is that it is limited in its applicability, since it is only capable of identifying strings as constituents if they appear on the right side of the test sentence. The second is that it can suggest strings to be constituents that most of the other tests suggest are not constituents. To illustrate this point, a different example must be used:

- Frank has given his bicycle to us to use if need be.

- (d) [Frank has offered], and [Susan has already loaned], their bicycles to us to use if need be.

- (e) [Frank has offered his bicycle] and [Susan has already loaned her bicycle] to us to use if need be.

- (f) [Frank has offered his bicycle to us] and [Susan has already loaned her bicycle to us] to use if need be.

These examples suggest that their bicycles (his bicycle) to us to use if need be, to us to use if need be, and to use if need be are constituents in the test sentence. Most theories of syntax do not view these strings as constituents, and more importantly, most of the other tests suggest that they are not constituents. In short, these tests are not taken for granted because a constituent may pass one test and fail to pass many others. We need to consult our intuitive thinking when judging the constituency of any set of words.