Related Research Articles

Econometrics is an application of statistical methods to economic data in order to give empirical content to economic relationships. More precisely, it is "the quantitative analysis of actual economic phenomena based on the concurrent development of theory and observation, related by appropriate methods of inference." An introductory economics textbook describes econometrics as allowing economists "to sift through mountains of data to extract simple relationships." Jan Tinbergen is one of the two founding fathers of econometrics. The other, Ragnar Frisch, also coined the term in the sense in which it is used today.

In microeconomics, supply and demand is an economic model of price determination in a market. It postulates that, holding all else equal, in a competitive market, the unit price for a particular good or other traded item such as labor or liquid financial assets, will vary until it settles at a point where the quantity demanded will equal the quantity supplied, resulting in an economic equilibrium for price and quantity transacted. The concept of supply and demand forms the theoretical basis of modern economics.

In regression analysis, a dummy variable is one that takes a binary value to indicate the absence or presence of some categorical effect that may be expected to shift the outcome. For example, if we were studying the relationship between biological sex and income, we could use a dummy variable to represent the sex of each individual in the study. The variable could take on a value of 1 for males and 0 for females. In machine learning this is known as one-hot encoding.

Economic data are data describing an actual economy, past or present. These are typically found in time-series form, that is, covering more than one time period or in cross-sectional data in one time period. Data may also be collected from surveys of for example individuals and firms or aggregated to sectors and industries of a single economy or for the international economy. A collection of such data in table form comprises a data set.

A variable is considered dependent if it depends on an independent variable. Dependent variables are studied under the supposition or demand that they depend, by some law or rule, on the values of other variables. Independent variables, in turn, are not seen as depending on any other variable in the scope of the experiment in question. In this sense, some common independent variables are time, space, density, mass, fluid flow rate, and previous values of some observed value of interest to predict future values.

Linear trend estimation is a statistical method used to analyze data patterns. When a series of measurements of a process are treated as a sequence or time series, trend estimation can be used to make and justify statements about tendencies in the data by relating the measurements to the times at which they occurred. This model can then be used to describe the behavior of the observed data.

A macroeconomic model is an analytical tool designed to describe the operation of the problems of economy of a country or a region. These models are usually designed to examine the comparative statics and dynamics of aggregate quantities such as the total amount of goods and services produced, total income earned, the level of employment of productive resources, and the level of prices.

The Profit Impact of Market Strategy (PIMS) program is a project that uses empirical data to try to determine which business strategies make the difference between success and failure. It is used to develop strategies for resource allocation and marketing. Some of the most important strategic metrics are market share, product quality, investment intensity and service quality. One of the emphasized principles is that the same factors work identically across different industries.

In medical research, social science, and biology, a cross-sectional study is a type of observational study that analyzes data from a population, or a representative subset, at a specific point in time—that is, cross-sectional data.

Articles in economics journals are usually classified according to JEL classification codes, which derive from the Journal of Economic Literature. The JEL is published quarterly by the American Economic Association (AEA) and contains survey articles and information on recently published books and dissertations. The AEA maintains EconLit, a searchable data base of citations for articles, books, reviews, dissertations, and working papers classified by JEL codes for the years from 1969. A recent addition to EconLit is indexing of economics journal articles from 1886 to 1968 parallel to the print series Index of Economic Articles.

Panel (data) analysis is a statistical method, widely used in social science, epidemiology, and econometrics to analyze two-dimensional panel data. The data are usually collected over time and over the same individuals and then a regression is run over these two dimensions. Multidimensional analysis is an econometric method in which data are collected over more than two dimensions.

In statistics and econometrics, panel data and longitudinal data are both multi-dimensional data involving measurements over time. Panel data is a subset of longitudinal data where observations are for the same subjects each time.

In statistics and econometrics, cross-sectional data is a type of data collected by observing many subjects at a single point or period of time. Analysis of cross-sectional data usually consists of comparing the differences among selected subjects, typically with no regard to differences in time.

RATS, an abbreviation of Regression Analysis of Time Series, is a statistical package for time series analysis and econometrics. RATS is developed and sold by Estima, Inc., located in Evanston, IL.

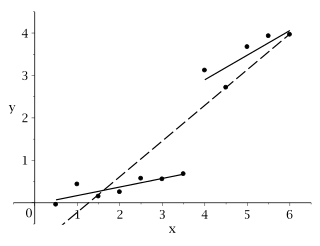

In econometrics and statistics, a structural break is an unexpected change over time in the parameters of regression models, which can lead to huge forecasting errors and unreliability of the model in general. This issue was popularised by David Hendry, who argued that lack of stability of coefficients frequently caused forecast failure, and therefore we must routinely test for structural stability. Structural stability − i.e., the time-invariance of regression coefficients − is a central issue in all applications of linear regression models.

In statistics, econometrics, political science, epidemiology, and related disciplines, a regression discontinuity design (RDD) is a quasi-experimental pretest–posttest design that aims to determine the causal effects of interventions by assigning a cutoff or threshold above or below which an intervention is assigned. By comparing observations lying closely on either side of the threshold, it is possible to estimate the average treatment effect in environments in which randomisation is unfeasible. However, it remains impossible to make true causal inference with this method alone, as it does not automatically reject causal effects by any potential confounding variable. First applied by Donald Thistlethwaite and Donald Campbell (1960) to the evaluation of scholarship programs, the RDD has become increasingly popular in recent years. Recent study comparisons of randomised controlled trials (RCTs) and RDDs have empirically demonstrated the internal validity of the design.

Demand forecasting refers to the process of predicting the quantity of goods and services that will be demanded by consumers at a future point in time. More specifically, the methods of demand forecasting entail using predictive analytics to estimate customer demand in consideration of key economic conditions. This is an important tool in optimizing business profitability through efficient supply chain management. Demand forecasting methods are divided into two major categories, qualitative and quantitative methods. Qualitative methods are based on expert opinion and information gathered from the field. This method is mostly used in situations when there is minimal data available for analysis such as when a business or product has recently been introduced to the market. Quantitative methods, however, use available data, and analytical tools in order to produce predictions. Demand forecasting may be used in resource allocation, inventory management, assessing future capacity requirements, or making decisions on whether to enter a new market.

An error correction model (ECM) belongs to a category of multiple time series models most commonly used for data where the underlying variables have a long-run common stochastic trend, also known as cointegration. ECMs are a theoretically-driven approach useful for estimating both short-term and long-term effects of one time series on another. The term error-correction relates to the fact that last-period's deviation from a long-run equilibrium, the error, influences its short-run dynamics. Thus ECMs directly estimate the speed at which a dependent variable returns to equilibrium after a change in other variables.

The methodology of econometrics is the study of the range of differing approaches to undertaking econometric analysis.

Following the development of Keynesian economics, applied economics began developing forecasting models based on economic data including national income and product accounting data. In contrast with typical textbook models, these large-scale macroeconometric models used large amounts of data and based forecasts on past correlations instead of theoretical relations. These models estimated the relations between different macroeconomic variables using regression analysis on time series data. These models grew to include hundreds or thousands of equations describing the evolution of hundreds or thousands of prices and quantities over time, making computers essential for their solution. While the choice of which variables to include in each equation was partly guided by economic theory, variable inclusion was mostly determined on purely empirical grounds. Large-scale macroeconometric model consists of systems of dynamic equations of the economy with the estimation of parameters using time-series data on a quarterly to yearly basis.

References

- Andrews, D. W. K. (2005). "Cross-Section Regression with Common Shocks" (PDF). Econometrica. 73 (5): 1551. doi:10.1111/j.1468-0262.2005.00629.x. Preprint

- Wooldridge, Jeffrey M. (2009). "Part 1: Regression Analysis with Cross Sectional Data". Introductory econometrics: a modern approach (4th ed.). Cengage Learning. ISBN 978-0-324-66054-8.