A router is a networking device that forwards data packets between computer networks. Routers perform the traffic directing functions between networks and on the global Internet. Data sent through a network, such as a web page or email, is in the form of data packets. A packet is typically forwarded from one router to another router through the networks that constitute an internetwork until it reaches its destination node.

Routing is the process of selecting a path for traffic in a network or between or across multiple networks. Broadly, routing is performed in many types of networks, including circuit-switched networks, such as the public switched telephone network (PSTN), and computer networks, such as the Internet.

A network switch is networking hardware that connects devices on a computer network by using packet switching to receive and forward data to the destination device.

In electronics and telecommunications, jitter is the deviation from true periodicity of a presumably periodic signal, often in relation to a reference clock signal. In clock recovery applications it is called timing jitter. Jitter is a significant, and usually undesired, factor in the design of almost all communications links.

In telecommunication and computer engineering, the queuing delay or queueing delay is the time a job waits in a queue until it can be executed. It is a key component of network delay. In a switched network, queuing delay is the time between the completion of signaling by the call originator and the arrival of a ringing signal at the call receiver. Queuing delay may be caused by delays at the originating switch, intermediate switches, or the call receiver servicing switch. In a data network, queuing delay is the sum of the delays between the request for service and the establishment of a circuit to the called data terminal equipment (DTE). In a packet-switched network, queuing delay is the sum of the delays encountered by a packet between the time of insertion into the network and the time of delivery to the address.

Wormhole flow control, also called wormhole switching or wormhole routing, is a system of simple flow control in computer networking based on known fixed links. It is a subset of flow control methods called Flit-Buffer Flow Control.

Packet forwarding is the relaying of packets from one network segment to another by nodes in a computer network. The network layer in the OSI model is responsible for packet forwarding.

NetFlow is a feature that was introduced on Cisco routers around 1996 that provides the ability to collect IP network traffic as it enters or exits an interface. By analyzing the data provided by NetFlow, a network administrator can determine things such as the source and destination of traffic, class of service, and the causes of congestion. A typical flow monitoring setup consists of three main components:

In electronics, a banyan switch is a complex crossover switch used in electrical or optical switches.

The RapidIO architecture is a high-performance packet-switched electrical connection technology. It supports messaging, read/write and cache coherency semantics. Based on industry-standard electrical specifications such as those for Ethernet, RapidIO can be used as a chip-to-chip, board-to-board, and chassis-to-chassis interconnect.

A load-balanced switch is a switch architecture which guarantees 100% throughput with no central arbitration at all, at the cost of sending each packet across the crossbar twice. Load-balanced switches are a subject of research for large routers scaled past the point of practical central arbitration.

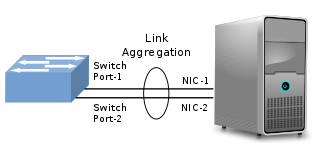

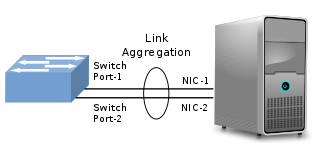

In computer networking, link aggregation is the combining of multiple network connections in parallel by any of several methods. Link aggregation increases total throughput beyond what a single connection could sustain, and provides redundancy where all but one of the physical links may fail without losing connectivity. A link aggregation group (LAG) is the combined collection of physical ports.

In telecommunications, an optical buffer is a device that is capable of temporarily storing light. Just as in the case of a regular buffer, it is a storage medium that enables compensation for a difference in time of occurrence of events. More specifically, an optical buffer serves to store data that was transmitted optically, without converting it to the electrical domain.

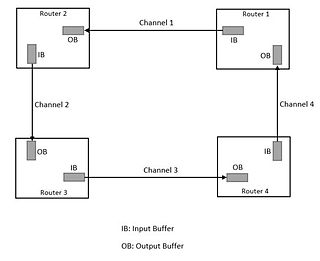

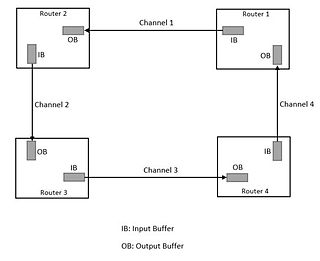

Head-of-line blocking in computer networking is a performance-limiting phenomenon that occurs when a line of packets is held up in a queue by a first packet. Examples include input buffered network switches, out-of-order delivery and multiple requests in HTTP pipelining.

CobraNet is a combination of software, hardware, and network protocols designed to deliver uncompressed, multi-channel, low-latency digital audio over a standard Ethernet network. Developed in the 1990s, CobraNet is widely regarded as the first commercially successful audio-over-Ethernet implementation.

Bufferbloat is a cause of high latency and jitter in packet-switched networks caused by excess buffering of packets. Bufferbloat can also cause packet delay variation, as well as reduce the overall network throughput. When a router or switch is configured to use excessively large buffers, even very high-speed networks can become practically unusable for many interactive applications like voice over IP (VoIP), audio streaming, online gaming, and even ordinary web browsing.

A network scheduler, also called packet scheduler, queueing discipline (qdisc) or queueing algorithm, is an arbiter on a node in a packet switching communication network. It manages the sequence of network packets in the transmit and receive queues of the protocol stack and network interface controller. There are several network schedulers available for the different operating systems, that implement many of the existing network scheduling algorithms.

In computer networking, a flit is a link-level atomic piece that forms a network packet or stream. The first flit, called the header flit holds information about this packet's route and sets up the routing behavior for all subsequent flits associated with the packet. The header flit is followed by zero or more body flits, containing the actual payload of data. The final flit, called the tail flit, performs some book keeping to close the connection between the two nodes.

The STC104 switch, also known as the C104 switch in its early phases, is an asynchronous packet-routing chip that was designed for building high-performance point-to-point computer communication networks. It was developed by INMOS in the 1990s and was the first example of a general-purpose production packet routing chip. It was also the first routing chip to implement wormhole routing, to decouple packet size from the flow-control protocol, and to implement interval and two-phase randomized routing.

A routing algorithm decides the path followed by a packet from the source to destination routers in a network. An important aspect to be considered while designing a routing algorithm is avoiding a deadlock. Turn restriction routing is a routing algorithm for mesh-family of topologies which avoids deadlocks by restricting the types of turns that are allowed in the algorithm while determining the route from source node to destination node in a network.