A web server is server software, or hardware dedicated to running said software, that can satisfy World Wide Web client requests. A web server can, in general, contain one or more websites. A web server processes incoming network requests over HTTP and several other related protocols.

In computing, load balancing improves the distribution of workloads across multiple computing resources, such as computers, a computer cluster, network links, central processing units, or disk drives. Load balancing aims to optimize resource use, maximize throughput, minimize response time, and avoid overload of any single resource. Using multiple components with load balancing instead of a single component may increase reliability and availability through redundancy. Load balancing usually involves dedicated software or hardware, such as a multilayer switch or a Domain Name System server process.

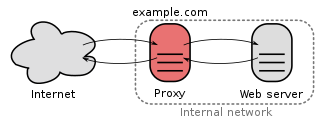

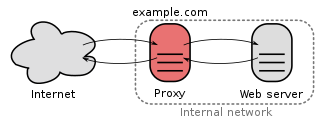

In computer networks, a proxy server is a server that acts as an intermediary for requests from clients seeking resources from other servers. A client connects to the proxy server, requesting some service, such as a file, connection, web page, or other resource available from a different server and the proxy server evaluates the request as a way to simplify and control its complexity. Proxies were invented to add structure and encapsulation to distributed systems.

SOCKS is an Internet protocol that exchanges network packets between a client and server through a proxy server. SOCKS5 additionally provides authentication so only authorized users may access a server. Practically, a SOCKS server proxies TCP connections to an arbitrary IP address, and provides a means for UDP packets to be forwarded.

A web cache is an information technology for the temporary storage (caching) of Web documents, such as web pages, images, and other types of Web multimedia, to reduce server lag. A web cache system stores copies of documents passing through it; subsequent requests may be satisfied from the cache if certain conditions are met. A web cache system can refer either to an appliance, or to a computer program.

A content delivery network or content distribution network (CDN) is a geographically distributed network of proxy servers and their data centers. The goal is to provide high availability and high performance by distributing the service spatially relative to end-users. CDNs serve a large portion of the Internet content today, including web objects, downloadable objects, applications, live streaming media, on-demand streaming media, and social media sites.

Linux Virtual Server (LVS) is load balancing software for Linux kernel–based operating systems.

Microsoft DNS is the name given to the implementation of domain name system services provided in Microsoft Windows operating systems.

In computer networks, a reverse proxy is a type of proxy server that retrieves resources on behalf of a client from one or more servers. These resources are then returned to the client, appearing as if they originated from the proxy server itself. Unlike a forward proxy, which is an intermediary for its associated clients to contact any server, a reverse proxy is an intermediary for its associated servers to be contacted by any client.

Microsoft Forefront Threat Management Gateway, formerly known as Microsoft Internet Security and Acceleration Server, is a network router, firewall, antivirus program, VPN server and web cache from Microsoft Corporation. It runs on Windows Server and works by inspecting all network traffic that passes through it.

A proxy auto-config (PAC) file defines how web browsers and other user agents can automatically choose the appropriate proxy server for fetching a given URL.

The X-Forwarded-For (XFF) HTTP header field is a common method for identifying the originating IP address of a client connecting to a web server through an HTTP proxy or load balancer.

In the context of computer networking, an application-level gateway consists of a security component that augments a firewall or NAT employed in a computer network. It allows customized NAT traversal filters to be plugged into the gateway to support address and port translation for certain application layer "control/data" protocols such as FTP, BitTorrent, SIP, RTSP, file transfer in IM applications, etc. In order for these protocols to work through NAT or a firewall, either the application has to know about an address/port number combination that allows incoming packets, or the NAT has to monitor the control traffic and open up port mappings dynamically as required. Legitimate application data can thus be passed through the security checks of the firewall or NAT that would have otherwise restricted the traffic for not meeting its limited filter criteria.

Varnish is an HTTP accelerator designed for content-heavy dynamic web sites as well as APIs. In contrast to other web accelerators, such as Squid, which began life as a client-side cache, or Apache and nginx, which are primarily origin servers, Varnish was designed as an HTTP accelerator. Varnish is focused exclusively on HTTP, unlike other proxy servers that often support FTP, SMTP and other network protocols.

An application delivery network (ADN) is a suite of technologies that, when deployed together, provide application availability, security, visibility, and acceleration. Gartner defines application delivery networking as the combination of WAN optimization controllers (WOCs) and application delivery controllers (ADCs). At the data center end of an ADN is the application delivery controller, an advanced traffic management device that is often also referred to as a web switch, content switch, or multilayer switch, the purpose of which is to distribute traffic among a number of servers or geographically dislocated sites based on application specific criteria. In the branch office portion of an ADN is the WAN optimization controller, which works to reduce the number of bits that flow over the network using caching and compression, and shapes TCP traffic using prioritization and other optimization techniques. Some WOC components are installed on PCs or mobile clients, and there is typically a portion of the WOC installed in the data center. Application delivery networks are also offered by some CDN vendors.

Nginx is a web server which can also be used as a reverse proxy, load balancer, mail proxy and HTTP cache. The software was created by Igor Sysoev and first publicly released in 2004. A company of the same name was founded in 2011 to provide support and Nginx plus paid software.

Web Cache Communication Protocol (WCCP) is a Cisco-developed content-routing protocol that provides a mechanism to redirect traffic flows in real-time. It has built-in load balancing, scaling, fault tolerance, and service-assurance (failsafe) mechanisms. Cisco IOS Release 12.1 and later releases allow the use of either Version 1 (WCCPv1) or Version 2 (WCCPv2) of the protocol.

SoftEther VPN is free open-source, cross-platform, multi-protocol VPN client and VPN server software, developed as part of Daiyuu Nobori's master's thesis research at the University of Tsukuba. VPN protocols such as SSL VPN, L2TP/IPsec, OpenVPN, and Microsoft Secure Socket Tunneling Protocol are provided in a single VPN server. It was released using the GPLv2 license on January 4, 2014. The license was switched to Apache License 2.0 on January 21, 2019.

Dynamic Site Acceleration (DSA) is a group of technologies which make the delivery of dynamic websites more efficient. Manufacturers of application delivery controllers and content delivery networks (CDNs) use a host of techniques to accelerate dynamic sites, including:

Endian Firewall is an open-source router, firewall and gateway security Linux distribution developed by the South Tyrolean company Endian. The product is available as either free software, commercial software with guaranteed support services, or as a hardware appliance.