Related Research Articles

Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and uses learning and intelligence to take actions that maximize their chances of achieving defined goals. Such machines may be called AIs.

Inductive logic programming (ILP) is a subfield of symbolic artificial intelligence which uses logic programming as a uniform representation for examples, background knowledge and hypotheses. The term "inductive" here refers to philosophical rather than mathematical induction. Given an encoding of the known background knowledge and a set of examples represented as a logical database of facts, an ILP system will derive a hypothesised logic program which entails all the positive and none of the negative examples.

Douglas Bruce Lenat was an American computer scientist and researcher in artificial intelligence who was the founder and CEO of Cycorp, Inc. in Austin, Texas.

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of statistical algorithms that can learn from data and generalize to unseen data, and thus perform tasks without explicit instructions. Recently, artificial neural networks have been able to surpass many previous approaches in performance.

The Automated Mathematician (AM) is one of the earliest successful discovery systems. It was created by Douglas Lenat in Lisp, and in 1977 led to Lenat being awarded the IJCAI Computers and Thought Award.

In artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as knowledge-based systems, symbolic mathematics, automated theorem provers, ontologies, the semantic web, and automated planning and scheduling systems. The Symbolic AI paradigm led to seminal ideas in search, symbolic programming languages, agents, multi-agent systems, the semantic web, and the strengths and limitations of formal knowledge and reasoning systems.

Eurisko is a discovery system written by Douglas Lenat in RLL-1, a representation language itself written in the Lisp programming language. A sequel to Automated Mathematician, it consists of heuristics, i.e. rules of thumb, including heuristics describing how to use and change its own heuristics. Lenat was frustrated by Automated Mathematician's constraint to a single domain and so developed Eurisko; his frustration with the effort of encoding domain knowledge for Eurisko led to Lenat's subsequent development of Cyc. Lenat envisioned ultimately coupling the Cyc knowledgebase with the Eurisko discovery engine.

Evolutionary robotics is an embodied approach to Artificial Intelligence (AI) in which robots are automatically designed using Darwinian principles of natural selection. The design of a robot, or a subsystem of a robot such as a neural controller, is optimized against a behavioral goal. Usually, designs are evaluated in simulations as fabricating thousands or millions of designs and testing them in the real world is prohibitively expensive in terms of time, money, and safety.

Logic in computer science covers the overlap between the field of logic and that of computer science. The topic can essentially be divided into three main areas:

Woodrow Wilson "Woody" Bledsoe was an American mathematician, computer scientist, and prominent educator. He is one of the founders of artificial intelligence (AI), making early contributions in pattern recognition, facial recognition, and automated theorem proving. He continued to make significant contributions to AI throughout his long career. One of his influences was Frank Rosenblatt.

Artificial intelligence (AI) has been used in applications throughout industry and academia. Similar to electricity or computers, AI serves as a general-purpose technology that has numerous applications. Its applications span language translation, image recognition, decision-making, credit scoring, e-commerce and various other domains. AI which accommodates such technologies as machines being equipped perceive, understand, act and learning a scientific discipline.

Eric Joel Horvitz is an American computer scientist, and Technical Fellow at Microsoft, where he serves as the company's first Chief Scientific Officer. He was previously the director of Microsoft Research Labs, including research centers in Redmond, WA, Cambridge, MA, New York, NY, Montreal, Canada, Cambridge, UK, and Bangalore, India.

Ross Donald King is a Professor of Machine Intelligence at Chalmers University of Technology.

Inductive programming (IP) is a special area of automatic programming, covering research from artificial intelligence and programming, which addresses learning of typically declarative and often recursive programs from incomplete specifications, such as input/output examples or constraints.

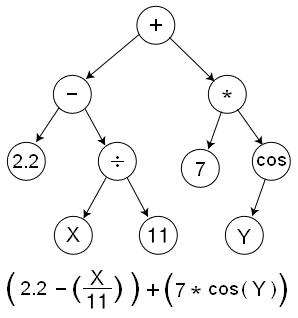

Symbolic regression (SR) is a type of regression analysis that searches the space of mathematical expressions to find the model that best fits a given dataset, both in terms of accuracy and simplicity.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

Explainable AI (XAI), often overlapping with Interpretable AI, or Explainable Machine Learning (XML), either refers to an artificial intelligence (AI) system over which it is possible for humans to retain intellectual oversight, or refers to the methods to achieve this. The main focus is usually on the reasoning behind the decisions or predictions made by the AI which are made more understandable and transparent. XAI counters the "black box" tendency of machine learning, where even the AI's designers cannot explain why it arrived at a specific decision.

Neuro-symbolic AI is a type of artificial intelligence that integrates neural and symbolic AI architectures to address the weaknesses of each, providing a robust AI capable of reasoning, learning, and cognitive modeling. As argued by Leslie Valiant and others, the effective construction of rich computational cognitive models demands the combination of symbolic reasoning and efficient machine learning. Gary Marcus, argued, "We cannot construct rich cognitive models in an adequate, automated way without the triumvirate of hybrid architecture, rich prior knowledge, and sophisticated techniques for reasoning." Further, "To build a robust, knowledge-driven approach to AI we must have the machinery of symbol manipulation in our toolkit. Too much useful knowledge is abstract to proceed without tools that represent and manipulate abstraction, and to date, the only known machinery that can manipulate such abstract knowledge reliably is the apparatus of symbol manipulation."

Deepak Kapur is a Distinguished Professor in the Department of Computer Science at the University of New Mexico.

The QLattice is a software library which provides a framework for symbolic regression in Python. It works on Linux, Windows, and macOS. The QLattice algorithm is developed by the Danish/Spanish AI research company Abzu. Since its creation, the QLattice has attracted significant attention, mainly for the inherent explainability of the models it produces.

References

- ↑ Shen, Wei-Min (1990). "Functional transformations in AI discovery systems". Artificial Intelligence. 41 (3): 257–272. doi:10.1016/0004-3702(90)90045-2. S2CID 7219589.

- ↑ Gil, Yolanda; Greaves, Mark; Hendler, James; Hirsh, Haym (2014-10-10). "Amplify scientific discovery with artificial intelligence". Science. 346 (6206): 171–172. Bibcode:2014Sci...346..171G. doi:10.1126/science.1259439. PMID 25301606. S2CID 206561353.

- 1 2 Cheeseman, Peter; Kelly, James; Self, Matthew; Stutz, John; Taylor, Will; Freeman, Don (1988-01-01). Laird, John (ed.). AutoClass: A Bayesian Classification System. San Francisco: Morgan Kaufmann. pp. 54–64. doi:10.1016/b978-0-934613-64-4.50011-6. ISBN 978-0-934613-64-4 . Retrieved 2022-07-24.

{{cite book}}:|work=ignored (help) - ↑ Ritchie, G.D.; Hanna, F.K. (August 1984). "AM: A case study in AI methodology". Artificial Intelligence. 23 (3): 249–268. doi:10.1016/0004-3702(84)90015-8.

- ↑ Lenat, Douglas Bruce (1976). Am: An artificial intelligence approach to discovery in mathematics as heuristic search (Thesis).

- ↑ Henderson, Harry (2007). "The Automated Mathematician". Artificial Intelligence: Mirrors for the Mind. Milestones in Discovery and Invention. Infobase Publishing. pp. 93–94. ISBN 9781604130591.

- ↑ Anderson, Chris. "The End of Theory: The Data Deluge Makes the Scientific Method Obsolete". Wired. Retrieved 2022-07-24.

- ↑ Vutha, Amar (2 August 2018). "Could machine learning mean the end of understanding in science?". The Conversation. Retrieved 2022-07-24.

- ↑ Canca, Cansu (2018-08-28). "Machine Learning as the Enemy of Science? Not Really". Bill of Health. Retrieved 2022-07-24.

- ↑ Wilstrup, Casper Skern (2022-01-30). "Are we replacing science with an AI oracle?". Medium. Retrieved 2022-07-24.

- ↑ Christiansen, Michael; Wilstrup, Casper; Hedley, Paula L. (2022-06-28). "Explainable "white-box" machine learning is the way forward in preeclampsia screening". American Journal of Obstetrics & Gynecology. 227 (5): 791. doi: 10.1016/j.ajog.2022.06.057 . PMID 35779588. S2CID 250160871.

- ↑ Schmidt, Michael; Lipson, Hod (2009-04-03). "Distilling Free-Form Natural Laws from Experimental Data". Science. 324 (5923): 81–85. Bibcode:2009Sci...324...81S. doi:10.1126/science.1165893. PMID 19342586. S2CID 7366016.

- ↑ Udrescu, Silviu-Marian; Tegmark, Max (2020-04-17). "AI Feynman: A physics-inspired method for symbolic regression". Science Advances. 6 (16): eaay2631. arXiv: 1905.11481 . Bibcode:2020SciA....6.2631U. doi:10.1126/sciadv.aay2631. PMC 7159912 . PMID 32426452.

- ↑ Broløs, Kevin René; Machado, Meera Vieira; Cave, Chris; Kasak, Jaan; Stentoft-Hansen, Valdemar; Batanero, Victor Galindo; Jelen, Tom; Wilstrup, Casper (2021-04-12). "An Approach to Symbolic Regression Using Feyn". arXiv: 2104.05417 [cs.LG].