The client–server model is a distributed application structure that partitions tasks or workloads between the providers of a resource or service, called servers, and service requesters, called clients. Often clients and servers communicate over a computer network on separate hardware, but both client and server may reside in the same system. A server host runs one or more server programs, which share their resources with clients. A client usually does not share any of its resources, but it requests content or service from a server. Clients, therefore, initiate communication sessions with servers, which await incoming requests. Examples of computer applications that use the client–server model are email, network printing, and the World Wide Web.

The Lightweight Directory Access Protocol is an open, vendor-neutral, industry standard application protocol for accessing and maintaining distributed directory information services over an Internet Protocol (IP) network. Directory services play an important role in developing intranet and Internet applications by allowing the sharing of information about users, systems, networks, services, and applications throughout the network. As examples, directory services may provide any organized set of records, often with a hierarchical structure, such as a corporate email directory. Similarly, a telephone directory is a list of subscribers with an address and a phone number.

In software engineering, multitier architecture is a client–server architecture in which presentation, application processing and data management functions are physically separated. The most widespread use of multitier architecture is the three-tier architecture.

Peer-to-peer (P2P) computing or networking is a distributed application architecture that partitions tasks or workloads between peers. Peers are equally privileged, equipotent participants in the network, forming a peer-to-peer network of nodes.

A web server is computer software and underlying hardware that accepts requests via HTTP or its secure variant HTTPS. A user agent, commonly a web browser or web crawler, initiates communication by making a request for a web page or other resource using HTTP, and the server responds with the content of that resource or an error message. A web server can also accept and store resources sent from the user agent if configured to do so.

In computing, a file server is a computer attached to a network that provides a location for shared disk access, i.e. storage of computer files that can be accessed by workstations within a computer network. The term server highlights the role of the machine in the traditional client–server scheme, where the clients are the workstations using the storage. A file server does not normally perform computational tasks or run programs on behalf of its client workstations.

In computing, a server is a piece of computer hardware or software that provides functionality for other programs or devices, called "clients". This architecture is called the client–server model. Servers can provide various functionalities, often called "services", such as sharing data or resources among multiple clients or performing computations for a client. A single server can serve multiple clients, and a single client can use multiple servers. A client process may run on the same device or may connect over a network to a server on a different device. Typical servers are database servers, file servers, mail servers, print servers, web servers, game servers, and application servers.

Uploading refers to transmitting data from one computer system to another through means of a network. Common methods of uploading include: uploading via web browsers, FTP clients], and terminals (SCP/SFTP). Uploading can be used in the context of clients that send files to a central server. While uploading can also be defined in the context of sending files between distributed clients, such as with a peer-to-peer (P2P) file-sharing protocol like BitTorrent, the term file sharing is more often used in this case. Moving files within a computer system, as opposed to over a network, is called file copying.

In computer networking, a proxy server is a server application that acts as an intermediary between a client requesting a resource and the server providing that resource. It improves privacy, security, and performance in the process.

In software engineering, the terms frontend and backend refer to the separation of concerns between the presentation layer (frontend), and the data access layer (backend) of a piece of software, or the physical infrastructure or hardware. In the client–server model, the client is usually considered the frontend and the server is usually considered the backend, even when some presentation work is actually done on the server itself.

Network-attached storage (NAS) is a file-level computer data storage server connected to a computer network providing data access to a heterogeneous group of clients. The term "NAS" can refer to both the technology and systems involved, or a specialized device built for such functionality.

A Laboratory management system (LIMS), sometimes referred to as a laboratory information system (LIS) or laboratory management system (LMS), is a software-based solution with features that support a modern laboratory's operations. Key features include—but are not limited to—workflow and data tracking support, flexible architecture, and data exchange interfaces, which fully "support its use in regulated environments". The features and uses of a LIMS have evolved over the years from simple sample tracking to an enterprise resource planning tool that manages multiple aspects of laboratory informatics.

In computing, a client is a piece of computer hardware or software that accesses a service made available by a server as part of the client–server model of computer networks. The server is often on another computer system, in which case the client accesses the service by way of a network.

REST is a software architectural style that was created to guide the design and development of the architecture for the World Wide Web. REST defines a set of constraints for how the architecture of a distributed, Internet-scale hypermedia system, such as the Web, should behave. The REST architectural style emphasises uniform interfaces, independent deployment of components, the scalability of interactions between them, and creating a layered architecture to promote caching to reduce user-perceived latency, enforce security, and encapsulate legacy systems.

Bandwidth throttling consists in the limitation of the communication speed, of the ingoing (received) or outgoing (sent) data in a network node or in a network device.

A dedicated hosting service, dedicated server, or managed hosting service is a type of Internet hosting in which the client leases an entire server not shared with anyone else. This is more flexible than shared hosting, as organizations have full control over the server(s), including choice of operating system, hardware, etc.

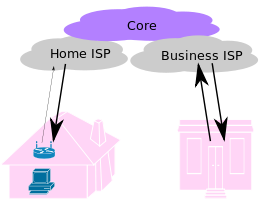

Cloud computing is the on-demand availability of computer system resources, especially data storage and computing power, without direct active management by the user. Large clouds often have functions distributed over multiple locations, each of which is a data center. Cloud computing relies on sharing of resources to achieve coherence and typically uses a pay-as-you-go model, which can help in reducing capital expenses but may also lead to unexpected operating expenses for users.

In computers, lag is delay (latency) between the action of the user (input) and the reaction of the server supporting the task, which has to be sent back to the client.

In computer networking, upstream refers to the direction in which data can be transferred from the client to the server (uploading). This differs greatly from downstream not only in theory and usage, but also in that upstream speeds are usually at a premium. Whereas downstream speed is important to the average home user for purposes of downloading content, uploads are used mainly for web server applications and similar processes where the sending of data is critical. Upstream speeds are also important to users of peer-to-peer software.

Firebase Cloud Messaging (FCM), formerly known as Google Cloud Messaging (GCM), is a cross-platform cloud service for messages and notifications for Android, iOS, and web applications, which as of May 2023 can be used at no cost. Firebase Cloud Messaging allows third-party application developers to send notifications or messages from servers hosted by FCM to users of the platform or end users.