This article needs additional citations for verification .(July 2018) |

Error level analysis (ELA) is the analysis of compression artifacts in digital data with lossy compression such as JPEG.

This article needs additional citations for verification .(July 2018) |

Error level analysis (ELA) is the analysis of compression artifacts in digital data with lossy compression such as JPEG.

When used, lossy compression is normally applied uniformly to a set of data, such as an image, resulting in a uniform level of compression artifacts.

Alternatively, the data may consist of parts with different levels of compression artifacts. This difference may arise from the different parts having been repeatedly subjected to the same lossy compression a different number of times, or the different parts having been subjected to different kinds of lossy compression. A difference in the level of compression artifacts in different parts of the data may therefore indicate that the data has been edited.

In the case of JPEG, even a composite with parts subjected to matching compressions will have a difference in the compression artifacts. [1]

In order to make the typically faint compression artifacts more readily visible, the data to be analyzed is subjected to an additional round of lossy compression, this time at a known, uniform level, and the result is subtracted from the original data under investigation. The resulting difference image is then inspected manually for any variation in the level of compression artifacts. In 2007, N. Krawetz denoted this method "error level analysis". [1]

Additionally, digital data formats such as JPEG sometimes include metadata describing the specific lossy compression used. If in such data the observed compression artifacts differ from those expected from the given metadata description, then the metadata may not describe the actual compressed data, and thus indicate that the data have been edited.

By its nature, data without lossy compression, such as a PNG image, cannot be subjected to error level analysis. Consequently, since editing could have been performed on data without lossy compression with lossy compression applied uniformly to the edited, composite data, the presence of a uniform level of compression artifacts does not rule out editing of the data.

Additionally, any non-uniform compression artifacts in a composite may be removed by subjecting the composite to repeated, uniform lossy compression. [2] Also, if the image color space is reduced to 256 colors or less, for example, by conversion to GIF, then error level analysis will generate useless results. [3]

More significant, the actual interpretation of the level of compression artifacts in a given segment of the data is subjective, and the determination of whether editing has occurred is therefore not robust. [1]

In May 2013, Dr Neal Krawetz used error level analysis on the 2012 World Press Photo of the Year and concluded on his Hacker Factor blog that it was "a composite" with modifications that "fail to adhere to the acceptable journalism standards used by Reuters, Associated Press, Getty Images, National Press Photographer's Association, and other media outlets". The World Press Photo organizers responded by letting two independent experts analyze the image files of the winning photographer and subsequently confirmed the integrity of the files. One of the experts, Hany Farid, said about error level analysis that "It incorrectly labels altered images as original and incorrectly labels original images as altered with the same likelihood". Krawetz responded by clarifying that "It is up to the user to interpret the results. Any errors in identification rest solely on the viewer". [4]

In May 2015, the citizen journalism team Bellingcat wrote that error level analysis revealed that the Russian Ministry of Defense had edited satellite images related to the Malaysia Airlines Flight 17 disaster. [5] In a reaction to this, image forensics expert Jens Kriese said about error level analysis: "The method is subjective and not based entirely on science", and that it is "a method used by hobbyists". [6] On his Hacker Factor Blog, the inventor of error level analysis Neal Krawetz criticized both Bellingcat's use of error level analysis as "misinterpreting the results" but also on several points Jens Kriese's "ignorance" regarding error level analysis. [7]

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder.

JPEG is a commonly used method of lossy compression for digital images, particularly for those images produced by digital photography. The degree of compression can be adjusted, allowing a selectable tradeoff between storage size and image quality. JPEG typically achieves 10:1 compression with little perceptible loss in image quality. Since its introduction in 1992, JPEG has been the most widely used image compression standard in the world, and the most widely used digital image format, with several billion JPEG images produced every day as of 2015.

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size for storing, handling, and transmitting content. The different versions of the photo of the cat on this page show how higher degrees of approximation create coarser images as more details are removed. This is opposed to lossless data compression which does not degrade the data. The amount of data reduction possible using lossy compression is much higher than using lossless techniques.

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved compression rates.

Portable Network Graphics is a raster-graphics file format that supports lossless data compression. PNG was developed as an improved, non-patented replacement for Graphics Interchange Format (GIF)—unofficially, the initials PNG stood for the recursive acronym "PNG's not GIF".

Image compression is a type of data compression applied to digital images, to reduce their cost for storage or transmission. Algorithms may take advantage of visual perception and the statistical properties of image data to provide superior results compared with generic data compression methods which are used for other digital data.

JPEG 2000 (JP2) is an image compression standard and coding system. It was developed from 1997 to 2000 by a Joint Photographic Experts Group committee chaired by Touradj Ebrahimi, with the intention of superseding their original JPEG standard, which is based on a discrete cosine transform (DCT), with a newly designed, wavelet-based method. The standardized filename extension is .jp2 for ISO/IEC 15444-1 conforming files and .jpx for the extended part-2 specifications, published as ISO/IEC 15444-2. The registered MIME types are defined in RFC 3745. For ISO/IEC 15444-1 it is image/jp2.

A compression artifact is a noticeable distortion of media caused by the application of lossy compression. Lossy data compression involves discarding some of the media's data so that it becomes small enough to be stored within the desired disk space or transmitted (streamed) within the available bandwidth. If the compressor cannot store enough data in the compressed version, the result is a loss of quality, or introduction of artifacts. The compression algorithm may not be intelligent enough to discriminate between distortions of little subjective importance and those objectionable to the user.

ICER is a wavelet-based image compression file format used by the NASA Mars rovers. ICER has both lossy and lossless compression modes.

Steganalysis is the study of detecting messages hidden using steganography; this is analogous to cryptanalysis applied to cryptography.

Generation loss is the loss of quality between subsequent copies or transcodes of data. Anything that reduces the quality of the representation when copying, and would cause further reduction in quality on making a copy of the copy, can be considered a form of generation loss. File size increases are a common result of generation loss, as the introduction of artifacts may actually increase the entropy of the data through each generation.

An image file format is a file format for a digital image. There are many formats that can be used, such as JPEG, PNG, and GIF. Most formats up until 2022 were for storing 2D images, not 3D ones. The data stored in an image file format may be compressed or uncompressed. If the data is compressed, it may be done so using lossy compression or lossless compression. For graphic design applications, vector formats are often used. Some image file formats support transparency.

A camera raw image file contains unprocessed or minimally processed data from the image sensor of either a digital camera, a motion picture film scanner, or other image scanner. Raw files are so named because they are not yet processed, and contain large amounts of potentially redundant data. Normally, the image is processed by a raw converter, in a wide-gamut internal color space where precise adjustments can be made before conversion to a viewable file format such as JPEG or PNG for storage, printing, or further manipulation. There are dozens of raw formats in use by different manufacturers of digital image capture equipment.

JBIG2 is an image compression standard for bi-level images, developed by the Joint Bi-level Image Experts Group. It is suitable for both lossless and lossy compression. According to a press release from the Group, in its lossless mode JBIG2 typically generates files 3–5 times smaller than Fax Group 4 and 2–4 times smaller than JBIG, the previous bi-level compression standard released by the Group. JBIG2 was published in 2000 as the international standard ITU T.88, and in 2001 as ISO/IEC 14492.

Lossless JPEG is a 1993 addition to JPEG standard by the Joint Photographic Experts Group to enable lossless compression. However, the term may also be used to refer to all lossless compression schemes developed by the group, including JPEG 2000 and JPEG-LS.

JPEG XR is an image compression standard for continuous tone photographic images, based on the HD Photo specifications that Microsoft originally developed and patented. It supports both lossy and lossless compression, and is the preferred image format for Ecma-388 Open XML Paper Specification documents.

PGF is a wavelet-based bitmapped image format that employs lossless and lossy data compression. PGF was created to improve upon and replace the JPEG format. It was developed at the same time as JPEG 2000 but with a focus on speed over compression ratio.

WebP is a raster graphics file format developed by Google intended as a replacement for JPEG, PNG, and GIF file formats. It supports both lossy and lossless compression, as well as animation and alpha transparency.

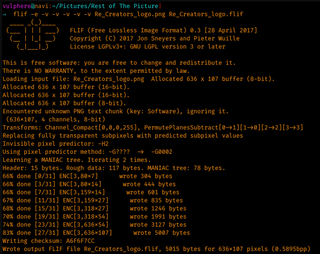

Free Lossless Image Format (FLIF) is a lossless image format claiming to outperform PNG, lossless WebP, lossless BPG and lossless JPEG 2000 in terms of compression ratio on a variety of inputs.

JPEG XT is an image compression standard which specifies backward-compatible extensions of the base JPEG standard.

We are hardly able to tell the tampered region from the unchanged one sometimes just by human visual perception of JPEG compression noise

If an image is resaved multiple times, then it may be entirely at a minimum error level, where more resaves do not alter the image. In this case, the ELA will return a black image and no modifications can be identified using this algorithm

Error level analysis of the images also reveal the images have been edited