Related Research Articles

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm.

Structured programming is a programming paradigm aimed at improving the clarity, quality, and development time of a computer program by making extensive use of the structured control flow constructs of selection (if/then/else) and repetition, block structures, and subroutines.

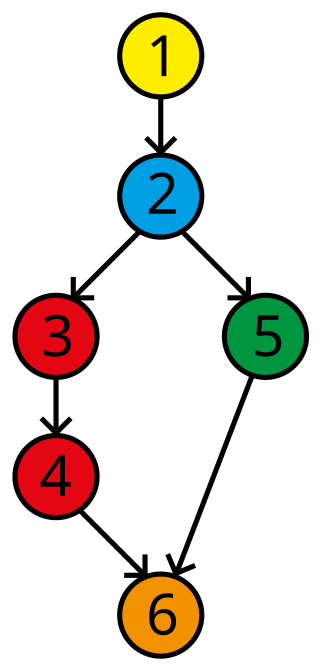

In computer science, a control-flow graph (CFG) is a representation, using graph notation, of all paths that might be traversed through a program during its execution. The control-flow graph was discovered by Frances E. Allen, who noted that Reese T. Prosser used boolean connectivity matrices for flow analysis before.

In computer science, control flow is the order in which individual statements, instructions or function calls of an imperative program are executed or evaluated. The emphasis on explicit control flow distinguishes an imperative programming language from a declarative programming language.

Computability theory, also known as recursion theory, is a branch of mathematical logic, computer science, and the theory of computation that originated in the 1930s with the study of computable functions and Turing degrees. The field has since expanded to include the study of generalized computability and definability. In these areas, computability theory overlaps with proof theory and effective descriptive set theory.

In computational complexity theory, the Cook–Levin theorem, also known as Cook's theorem, states that the Boolean satisfiability problem is NP-complete. That is, it is in NP, and any problem in NP can be reduced in polynomial time by a deterministic Turing machine to the Boolean satisfiability problem.

In the mathematical theory of directed graphs, a graph is said to be strongly connected if every vertex is reachable from every other vertex. The strongly connected components of an arbitrary directed graph form a partition into subgraphs that are themselves strongly connected. It is possible to test the strong connectivity of a graph, or to find its strongly connected components, in linear time (that is, Θ(V + E)).

Data-flow analysis is a technique for gathering information about the possible set of values calculated at various points in a computer program. A program's control-flow graph (CFG) is used to determine those parts of a program to which a particular value assigned to a variable might propagate. The information gathered is often used by compilers when optimizing a program. A canonical example of a data-flow analysis is reaching definitions.

Cyclomatic complexity is a software metric used to indicate the complexity of a program. It is a quantitative measure of the number of linearly independent paths through a program's source code. It was developed by Thomas J. McCabe, Sr. in 1976.

The structured program theorem, also called the Böhm–Jacopini theorem, is a result in programming language theory. It states that a class of control-flow graphs can compute any computable function if it combines subprograms in only three specific ways. These are

- Executing one subprogram, and then another subprogram (sequence)

- Executing one of two subprograms according to the value of a boolean expression (selection)

- Repeatedly executing a subprogram as long as a boolean expression is true (iteration)

In compiler theory, a reaching definition for a given instruction is an earlier instruction whose target variable can reach the given one without an intervening assignment. For example, in the following code:

d1 : y := 3 d2 : x := y

Programming complexity is a term that includes software properties that affect internal interactions. Several commentators distinguish between the terms "complex" and "complicated". Complicated implies being difficult to understand, but ultimately knowable. Complex, by contrast, describes the interactions between entities. As the number of entities increases, the number of interactions between them increases exponentially, making it impossible to know and understand them all. Similarly, higher levels of complexity in software increase the risk of unintentionally interfering with interactions, thus increasing the risk of introducing defects when changing the software. In more extreme cases, it can make modifying the software virtually impossible.

In computational complexity theory, a gadget is a subunit of a problem instance that simulates the behavior of one of the fundamental units of a different computational problem. Gadgets are typically used to construct reductions from one computational problem to another, as part of proofs of NP-completeness or other types of computational hardness. The component design technique is a method for constructing reductions by using gadgets.

A decision-to-decision path, or DD-path, is a path of execution between two decisions. More recent versions of the concept also include the decisions themselves in their own DD-paths.

A signal-flow graph or signal-flowgraph (SFG), invented by Claude Shannon, but often called a Mason graph after Samuel Jefferson Mason who coined the term, is a specialized flow graph, a directed graph in which nodes represent system variables, and branches represent functional connections between pairs of nodes. Thus, signal-flow graph theory builds on that of directed graphs, which includes as well that of oriented graphs. This mathematical theory of digraphs exists, of course, quite apart from its applications.

The #P-completeness of 01-permanent, sometimes known as Valiant's theorem, is a mathematical proof about the permanent of matrices, considered a seminal result in computational complexity theory. In a 1979 scholarly paper, Leslie Valiant proved that the computational problem of computing the permanent of a matrix is #P-hard, even if the matrix is restricted to have entries that are all 0 or 1. In this restricted case, computing the permanent is even #P-complete, because it corresponds to the #P problem of counting the number of permutation matrices one can get by changing ones into zeroes.

A Software Defect Indicator is a pattern that can be found in source code that is strongly correlated with a software defect, an error or omission in the source code of a computer program that may cause it to malfunction. When inspecting the source code of computer programs, it is not always possible to identify defects directly, but there are often patterns, sometimes called anti-patterns, indicating that defects are present.

Goto is a statement found in many computer programming languages. It performs a one-way transfer of control to another line of code; in contrast a function call normally returns control. The jumped-to locations are usually identified using labels, though some languages use line numbers. At the machine code level, a goto is a form of branch or jump statement, in some cases combined with a stack adjustment. Many languages support the goto statement, and many do not.

In computational complexity theory, a problem is NP-complete when:

- It is a decision problem, meaning that for any input to the problem, the output is either "yes" or "no".

- When the answer is "yes", this can be demonstrated through the existence of a short solution.

- The correctness of each solution can be verified quickly and a brute-force search algorithm can find a solution by trying all possible solutions.

- The problem can be used to simulate every other problem for which we can verify quickly that a solution is correct. In this sense, NP-complete problems are the hardest of the problems to which solutions can be verified quickly. If we could find solutions of some NP-complete problem quickly, we could quickly find the solutions of every other problem to which a given solution can be easily verified.

In software engineering, basis path testing, or structured testing, is a white box method for designing test cases. The method analyzes the control-flow graph of a program to find a set of linearly independent paths of execution. The method normally uses McCabe cyclomatic complexity to determine the number of linearly independent paths and then generates test cases for each path thus obtained. Basis path testing guarantees complete branch coverage, but achieves that without covering all possible paths of the control-flow graph – the latter is usually too costly. Basis path testing has been widely used and studied.

References

- 1 2 3 4 5 6 7 McCabe (December 1976). "A Complexity Measure". IEEE Transactions on Software Engineering (4): 308–320. doi:10.1109/tse.1976.233837. S2CID 9116234.

- ↑ http://www.mccabe.com/pdf/mccabe-nist235r.pdf [ bare URL PDF ]

- ↑ David Anthony Watt; William Findlay (2004). Programming language design concepts. John Wiley & Sons. p. 228. ISBN 978-0-470-85320-7.

- 1 2 S. Rao Kosaraju (December 1974). "Analysis of structured programs". Journal of Computer and System Sciences. 9 (3): 232–255. doi:10.1016/S0022-0000(74)80043-7.

- ↑ For more modern treatment of the same results see: Kozen, The Böhm–Jacopini Theorem is False, Propositionally

- ↑ McCabe footnotes the two definitions of on pages 315 and 317.

- ↑ Steven S. Muchnick (1997). Advanced Compiler Design Implementation . Morgan Kaufmann. pp. 196–197 and 215. ISBN 978-1-55860-320-2.