In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events.

In probability theory and related fields, a stochastic or random process is a mathematical object usually defined as a family of random variables. Many stochastic processes can be represented by time series. However, a stochastic process is by nature continuous while a time series is a set of observations indexed by integers. A stochastic process may involve several related random variables.

A Markov chain is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). It is named after the Russian mathematician Andrey Markov.

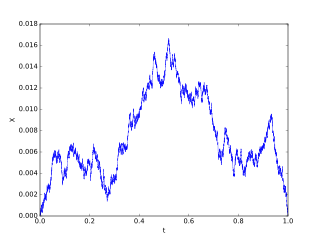

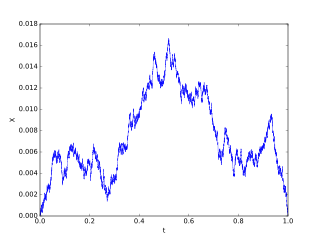

In mathematics, the Wiener process is a real valued continuous-time stochastic process named in honor of American mathematician Norbert Wiener for his investigations on the mathematical properties of the one-dimensional Brownian motion. It is often also called Brownian motion due to its historical connection with the physical process of the same name originally observed by Scottish botanist Robert Brown. It is one of the best known Lévy processes and occurs frequently in pure and applied mathematics, economics, quantitative finance, evolutionary biology, and physics.

In physics and mathematics, a random field is a random function over an arbitrary domain. That is, it is a function that takes on a random value at each point (or some other domain). It is also sometimes thought of as a synonym for a stochastic process with some restriction on its index set. That is, by modern definitions, a random field is a generalization of a stochastic process where the underlying parameter need no longer be real or integer valued "time" but can instead take values that are multidimensional vectors or points on some manifold.

Renewal theory is the branch of probability theory that generalizes the Poisson process for arbitrary holding times. Instead of exponentially distributed holding times, a renewal process may have any independent and identically distributed (IID) holding times that have finite mean. A renewal-reward process additionally has a random sequence of rewards incurred at each holding time, which are IID but need not be independent of the holding times.

In probability theory, an empirical process is a stochastic process that describes the proportion of objects in a system in a given state. For a process in a discrete state space a population continuous time Markov chain or Markov population model is a process which counts the number of objects in a given state . In mean field theory, limit theorems are considered and generalise the central limit theorem for empirical measures. Applications of the theory of empirical processes arise in non-parametric statistics.

In the theory of probability, the Glivenko–Cantelli theorem, named after Valery Ivanovich Glivenko and Francesco Paolo Cantelli, determines the asymptotic behaviour of the empirical distribution function as the number of independent and identically distributed observations grows.

In probability theory, random element is a generalization of the concept of random variable to more complicated spaces than the simple real line. The concept was introduced by Maurice Fréchet (1948) who commented that the “development of probability theory and expansion of area of its applications have led to necessity to pass from schemes where (random) outcomes of experiments can be described by number or a finite set of numbers, to schemes where outcomes of experiments represent, for example, vectors, functions, processes, fields, series, transformations, and also sets or collections of sets.”

In the theory of probability and statistics, the Dvoretzky–Kiefer–Wolfowitz–Massart inequality bounds how close an empirically determined distribution function will be to the distribution function from which the empirical samples are drawn. It is named after Aryeh Dvoretzky, Jack Kiefer, and Jacob Wolfowitz, who in 1956 proved the inequality with an unspecified multiplicative constant C in front of the exponent on the right-hand side. In 1990, Pascal Massart proved the inequality with the sharp constant C = 2, confirming a conjecture due to Birnbaum and McCarty.

In mathematics, uniform integrability is an important concept in real analysis, functional analysis and measure theory, and plays a vital role in the theory of martingales. The definition used in measure theory is closely related to, but not identical to, the definition typically used in probability.

A random function – of either one variable, or two or more variables (a random field) – is called Gaussian if every finite-dimensional distribution is a multivariate normal distribution. Gaussian random fields on the sphere are useful when analysing

In statistics, the Khmaladze transformation is a mathematical tool used in constructing convenient goodness of fit tests for hypothetical distribution functions. More precisely, suppose are i.i.d., possibly multi-dimensional, random observations generated from an unknown probability distribution. A classical problem in statistics is to decide how well a given hypothetical distribution function , or a given hypothetical parametric family of distribution functions , fits the set of observations. The Khmaladze transformation allows us to construct goodness of fit tests with desirable properties. It is named after Estate V. Khmaladze.

In probability theory a Brownian excursion process is a stochastic process that is closely related to a Wiener process. Realisations of Brownian excursion processes are essentially just realizations of a Wiener process selected to satisfy certain conditions. In particular, a Brownian excursion process is a Wiener process conditioned to be positive and to take the value 0 at time 1. Alternatively, it is a Brownian bridge process conditioned to be positive. BEPs are important because, among other reasons, they naturally arise as the limit process of a number of conditional functional central limit theorems.

In theory of probability, the Komlós–Major–Tusnády approximation is an approximation of the empirical process by a Gaussian process constructed on the same probability space. It is named after Hungarian mathematicians János Komlós, Gábor Tusnády, and Péter Major.

In queueing theory, a discipline within the mathematical theory of probability, a heavy traffic approximation is the matching of a queueing model with a diffusion process under some limiting conditions on the model's parameters. The first such result was published by John Kingman who showed that when the utilisation parameter of an M/M/1 queue is near 1 a scaled version of the queue length process can be accurately approximated by a reflected Brownian motion.

In probability theory, Rice's formula counts the average number of times an ergodic stationary process X(t) per unit time crosses a fixed level u. Adler and Taylor describe the result as "one of the most important results in the applications of smooth stochastic processes." The formula is often used in engineering.

In probability and statistics, point process notation comprises the range of mathematical notation used to symbolically represent random objects known as point processes, which are used in related fields such as stochastic geometry, spatial statistics and continuum percolation theory and frequently serve as mathematical models of random phenomena, representable as points, in time, space or both.

In probability, statistics and related fields, a Poisson point process is a type of random mathematical object that consists of points randomly located on a mathematical space. The Poisson point process is often called simply the Poisson process, but it is also called a Poisson random measure, Poisson random point field or Poisson point field. This point process has convenient mathematical properties, which has led to it being frequently defined in Euclidean space and used as a mathematical model for seemingly random processes in numerous disciplines such as astronomy, biology, ecology, geology, seismology, physics, economics, image processing, and telecommunications.

In mathematics and probability, the Borell–TIS inequality is a result bounding the probability of a deviation of the uniform norm of a centred Gaussian stochastic process above its expected value. The result is named for Christer Borell and its independent discoverers Boris Tsirelson, Ildar Ibragimov, and Vladimir Sudakov. The inequality has been described as “the single most important tool in the study of Gaussian processes.”