A cognitive bias is a systematic pattern of deviation from norm or rationality in judgment. Individuals create their own "subjective reality" from their perception of the input. An individual's construction of reality, not the objective input, may dictate their behavior in the world. Thus, cognitive biases may sometimes lead to perceptual distortion, inaccurate judgment, illogical interpretation, or what is broadly called irrationality.

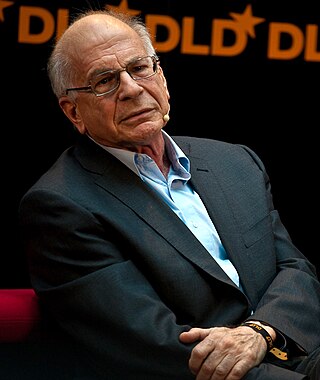

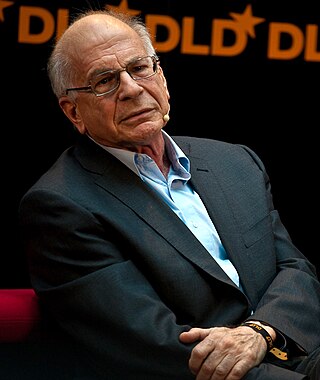

Daniel Kahneman is an Israeli-American psychologist and economist notable for his work on the psychology of judgment and decision-making, as well as behavioral economics, for which he was awarded the 2002 Nobel Memorial Prize in Economic Sciences. His empirical findings challenge the assumption of human rationality prevailing in modern economic theory.

The availability heuristic, also known as availability bias, is a mental shortcut that relies on immediate examples that come to a given person's mind when evaluating a specific topic, concept, method, or decision. This heuristic, operating on the notion that, if something can be recalled, it must be important, or at least more important than alternative solutions not as readily recalled, is inherently biased toward recently acquired information.

The representativeness heuristic is used when making judgments about the probability of an event under uncertainty. It is one of a group of heuristics proposed by psychologists Amos Tversky and Daniel Kahneman in the early 1970s as "the degree to which [an event] (i) is similar in essential characteristics to its parent population, and (ii) reflects the salient features of the process by which it is generated". Heuristics are described as "judgmental shortcuts that generally get us where we need to go – and quickly – but at the cost of occasionally sending us off course." Heuristics are useful because they use effort-reduction and simplification in decision-making.

The clustering illusion is the tendency to erroneously consider the inevitable "streaks" or "clusters" arising in small samples from random distributions to be non-random. The illusion is caused by a human tendency to underpredict the amount of variability likely to appear in a small sample of random or pseudorandom data.

The peak–end rule is a psychological heuristic in which people judge an experience largely based on how they felt at its peak and at its end, rather than based on the total sum or average of every moment of the experience. The effect occurs regardless of whether the experience is pleasant or unpleasant. To the heuristic, other information aside from that of the peak and end of the experience is not lost, but it is not used. This includes net pleasantness or unpleasantness and how long the experience lasted. The peak–end rule is thereby a specific form of the more general extension neglect and duration neglect.

The conjunction fallacy is an inference from an array of particulars, in violation of the laws of probability, that a conjoint set of two or more conclusions is likelier than any single member of that same set. It is a type of formal fallacy.

The base rate fallacy, also called base rate neglect or base rate bias, is a type of fallacy in which people tend to ignore the base rate in favor of the individuating information . Base rate neglect is a specific form of the more general extension neglect.

The anchoring effect is a cognitive bias whereby an individual's decisions are influenced by a particular reference point or 'anchor'. Both numeric and non-numeric anchoring have been reported in research. In numeric anchoring, once the value of the anchor is set, subsequent arguments, estimates, etc. made by an individual may change from what they would have otherwise been without the anchor. For example, an individual may be more likely to purchase a car if it is placed alongside a more expensive model. Prices discussed in negotiations that are lower than the anchor may seem reasonable, perhaps even cheap to the buyer, even if said prices are still relatively higher than the actual market value of the car. Another example may be when estimating the orbit of Mars, one might start with the Earth's orbit and then adjust upward until they reach a value that seems reasonable.

The planning fallacy is a phenomenon in which predictions about how much time will be needed to complete a future task display an optimism bias and underestimate the time needed. This phenomenon sometimes occurs regardless of the individual's knowledge that past tasks of a similar nature have taken longer to complete than generally planned. The bias affects predictions only about one's own tasks; when outside observers predict task completion times, they tend to exhibit a pessimistic bias, overestimating the time needed. The planning fallacy involves estimates of task completion times more optimistic than those encountered in similar projects in the past.

In psychology, a heuristic is an easy-to-compute procedure or rule of thumb that people use when forming beliefs, judgments or decisions. The familiarity heuristic was developed based on the discovery of the availability heuristic by psychologists Amos Tversky and Daniel Kahneman; it happens when the familiar is favored over novel places, people, or things. The familiarity heuristic can be applied to various situations that individuals experience in day-to-day life. When these situations appear similar to previous situations, especially if the individuals are experiencing a high cognitive load, they may regress to the state of mind in which they have felt or behaved before. This heuristic is useful in most situations and can be applied to many fields of knowledge; however, there are both positives and negatives to this heuristic as well.

In psychology, the human mind is considered to be a cognitive miser due to the tendency of humans to think and solve problems in simpler and less effortful ways rather than in more sophisticated and effortful ways, regardless of intelligence. Just as a miser seeks to avoid spending money, the human mind often seeks to avoid spending cognitive effort. The cognitive miser theory is an umbrella theory of cognition that brings together previous research on heuristics and attributional biases to explain when and why people are cognitive misers.

Attribute substitution is a psychological process thought to underlie a number of cognitive biases and perceptual illusions. It occurs when an individual has to make a judgment that is computationally complex, and instead substitutes a more easily calculated heuristic attribute. This substitution is thought of as taking place in the automatic intuitive judgment system, rather than the more self-aware reflective system. Hence, when someone tries to answer a difficult question, they may actually answer a related but different question, without realizing that a substitution has taken place. This explains why individuals can be unaware of their own biases, and why biases persist even when the subject is made aware of them. It also explains why human judgments often fail to show regression toward the mean.

Heuristics is the process by which humans use mental short cuts to arrive at decisions. Heuristics are simple strategies that humans, animals, organizations, and even machines use to quickly form judgments, make decisions, and find solutions to complex problems. Often this involves focusing on the most relevant aspects of a problem or situation to formulate a solution. While heuristic processes are used to find the answers and solutions that are most likely to work or be correct, they are not always right or the most accurate. Judgments and decisions based on heuristics are simply good enough to satisfy a pressing need in situations of uncertainty, where information is incomplete. In that sense they can differ from answers given by logic and probability.

Thinking, Fast and Slow is a 2011 book by psychologist Daniel Kahneman.

Insensitivity to sample size is a cognitive bias that occurs when people judge the probability of obtaining a sample statistic without respect to the sample size. For example, in one study subjects assigned the same probability to the likelihood of obtaining a mean height of above six feet [183 cm] in samples of 10, 100, and 1,000 men. In other words, variation is more likely in smaller samples, but people may not expect this.

Scope neglect or scope insensitivity is a cognitive bias that occurs when the valuation of a problem is not valued with a multiplicative relationship to its size. Scope neglect is a specific form of extension neglect.

In cognitive psychology and decision science, conservatism or conservatism bias is a bias which refers to the tendency to revise one's belief insufficiently when presented with new evidence. This bias describes human belief revision in which people over-weigh the prior distribution and under-weigh new sample evidence when compared to Bayesian belief-revision.

Intuitive statistics, or folk statistics, refers to the cognitive phenomenon where organisms use data to make generalizations and predictions about the world. This can be a small amount of sample data or training instances, which in turn contribute to inductive inferences about either population-level properties, future data, or both. Inferences can involve revising hypotheses, or beliefs, in light of probabilistic data that inform and motivate future predictions. The informal tendency for cognitive animals to intuitively generate statistical inferences, when formalized with certain axioms of probability theory, constitutes statistics as an academic discipline.