Description

One of the characteristics of the H.264/AVC standard is the possibility of dividing an image into regions called slices, each of which contains a sequence of macroblocks and can be decoded independently of other slices. These macroblocks are processed in a scan order, normally left to right, beginning at the top. A frame can be composed of a single slice, or multiple slices for parallel processing and error-resilience, because errors in a slice only propagate within that slice.

Flexible Macroblock Ordering enhances this by allowing macroblocks to be grouped and sent in any direction and order, and can be used to create shaped and non-contiguous slice groups. [1] This way, FMO allows more flexibly deciding what slice macroblocks belong to, in order to spread out errors [2] and keep errors in one part of the frame from compromising another part of the frame. FMO builds on top of another error-resilience tool, Arbitrary slice ordering, because each slice group can be sent in any order and can optionally be decoded in order of receipt, instead of in the usual scan order.

Individual slices still have to be continuous horizontal regions of macroblocks, but with FMO's slice groups, motion compensation can take place within any contiguous macroblocks through the entire group; effectively, each slice group is treated as one or more contiguous shaped slices for the purposes of motion compensation.

Nearly all video codecs allow Region of Interest coding, in which specific macroblocks are targeted to receive more or less quality, the canonical example being a newscaster's head being given a higher ratio of bits than the background. FMO's primary benefit when combined with RoI coding is the ability to prevent errors in one region from propagating into another region. For example, if a background slice is lost, the background may be corrupted for some time but the newscaster's face will not be affected, and it becomes simpler to send regular refreshes of the most important slice to make up for any errors there.

Slices used with FMO are not static, and can change as circumstances change, such as tracking a moving object. A structure called the MBAmap maps each macroblock to a slice group, and can be updated at any time, with a few default patterns defined, such as Slice Interleaving (groups alternate every scanline) or Scattered Slices (groups alternate every block). [3] With these patterns, FMO allows one retain a better localized visual context so that error-concealment algorithms can reconstruct missing content. [3]

Certain advanced encoding techniques can simulate some of FMO's benefits. In H.264/AVC, P (predicted) and B (bipredicted) frames may contain I (intra) blocks, which store independent picture. Rather than create a slice in order to periodically refresh entirely with I or IDR frames, I-blocks can be sent in any desired pattern while predicted blocks make up the rest of the picture. Although errors will still propagate horizontally, I-blocks can be sent in patterns, such as favoring a region of interest or a scattered checkerboard, to simulate shaped slice refreshes. With bidirectional communication to the client, lost slices can be refreshed as soon as detected, but this is not feasible for wider broadcast.

Tradeoffs

FMO is only allowed within the Baseline and Extended profiles. The much more common Constrained Baseline, Main, and all High profiles do not support it, and software that can create or decode it is rare. Some videoconferencing units use it; otherwise, the JM reference software is the primary support. [4]

Using multiple slices per picture always lowers coding efficiency, and FMO can further impact it. The more spread out the slices are, the worse it becomes, with checkerboard patterns (see Scattered Slices below) being the worst. The goals of spreading out errors and coding efficiency are directly in conflict. FMO allows inter prediction for immediate neighboring slices in the same group, effectively making a contiguous region nearly act like a single slice; in some situations, where slice groups are shaped into a Region of Interest, it can actually slightly improve efficiency over simple standard slices, but the benefit is rare and small. Due to this, FMO should only be used where packet losses are common and expected.

Aside from increased complexity in encoding and decoding, and lower efficiency, in-loop deblocking also creates a problem: Slices can be sent in any order, but the deblocker requires all . Either the deblocker has to run in multiple passes whenever another slice is received, or an entire picture needs to be buffered before beginning the deblocking, possibly creating additional latency if slices are delayed long enough that the next picture's slices start coming in first. [3]

Implementation details

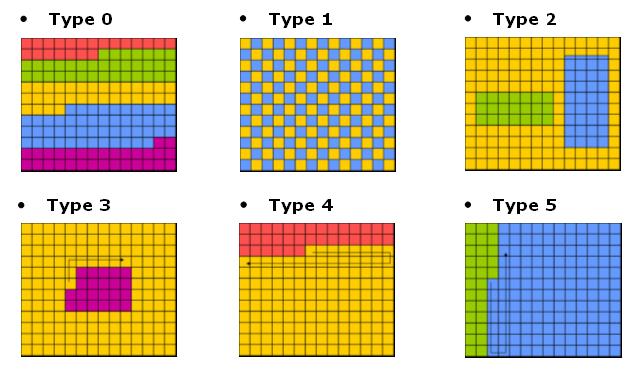

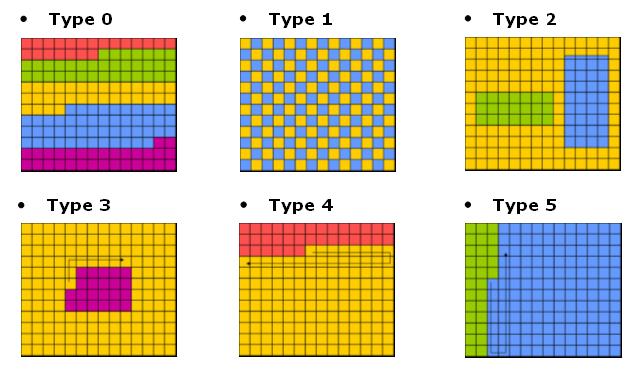

When using FMO, the image can be divided in different scan patterns of the macroblocks, with several built-in patterns defined in the spec, signaled as 0–5 in the unit slice_group_map_type, and one option to include an entire explicitly assigned MBAmap, signaled as 6. The map type and a new MBAmap can be sent at any time. [5]

- Interleaved slice groups, type 0: Every row is a different slice, alternating as many times as slice groups. Only horizontal prediction vectors are allowed.

- Scattered or dispersed slice groups, type 1: Every macroblock is a different slice. With two slice groups, it creates a checkerboard pattern; four or more groups also interleave rows, and with six slice groups, no macroblock will ever touch another from the same slice group in any direction, maximizing error concealment opportunities. No vector prediction is possible.

- Foreground groups, type 2: Specifying only the top-left and bottom-right of static rectangles to create regions of interest. All areas not covered are assigned to a final group. Vector prediction is possible within each rectangle and within the background. The behavior of overlapping rectangles is undefined, but in the reference software the last slice group to define it is used.

- Changing groups, types 3-5: Similar to type 2, but dynamic types that grow and shrink in a cyclic way. Only the growth rate, the direction and the position in the cycle have to be known.

- Explicit groups, type 6: An entire MBAmap is transmitted with groups arranged in any way the encoder wishes. Vector prediction is possible within any contiguous regions of the same group.

(In the above image, "Type 0" shows standard H.264 slices, not interleaved slice groups.)

This page is based on this

Wikipedia article Text is available under the

CC BY-SA 4.0 license; additional terms may apply.

Images, videos and audio are available under their respective licenses.