A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry that executes instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions in the program. This contrasts with external components such as main memory and I/O circuitry, and specialized processors such as graphics processing units (GPUs).

In computing, a cache is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs.

MPEG-1 is a standard for lossy compression of video and audio. It is designed to compress VHS-quality raw digital video and CD audio down to about 1.5 Mbit/s without excessive quality loss, making video CDs, digital cable/satellite TV and digital audio broadcasting (DAB) practical.

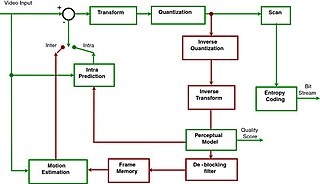

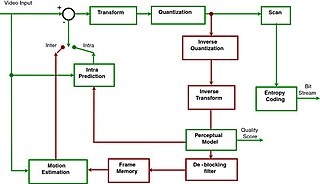

A video codec is software or hardware that compresses and decompresses digital video. In the context of video compression, codec is a portmanteau of encoder and decoder, while a device that only compresses is typically called an encoder, and one that only decompresses is a decoder.

Dynamic random-access memory is a type of random-access semiconductor memory that stores each bit of data in a memory cell consisting of a tiny capacitor and a transistor, both typically based on metal-oxide-semiconductor (MOS) technology. The capacitor can either be charged or discharged; these two states are taken to represent the two values of a bit, conventionally called 0 and 1. The electric charge on the capacitors slowly leaks off, so without intervention the data on the chip would soon be lost. To prevent this, DRAM requires an external memory refresh circuit which periodically rewrites the data in the capacitors, restoring them to their original charge. This refresh process is the defining characteristic of dynamic random-access memory, in contrast to static random-access memory (SRAM) which does not require data to be refreshed. Unlike flash memory, DRAM is volatile memory, since it loses its data quickly when power is removed. However, DRAM does exhibit limited data remanence.

Advanced Video Coding (AVC), also referred to as H.264 or MPEG-4 Part 10, Advanced Video Coding, is a video compression standard based on block-oriented, motion-compensated integer-DCT coding. It is by far the most commonly used format for the recording, compression, and distribution of video content, used by 91% of video industry developers as of September 2019. It supports resolutions up to and including 8K UHD.

In the field of video compression a video frame is compressed using different algorithms with different advantages and disadvantages, centered mainly around amount of data compression. These different algorithms for video frames are called picture types or frame types. The three major picture types used in the different video algorithms are I, P and B. They are different in the following characteristics:

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels, with separate instruction-specific and data-specific caches at level 1.

An inter frame is a frame in a video compression stream which is expressed in terms of one or more neighboring frames. The "inter" part of the term refers to the use of Inter frame prediction. This kind of prediction tries to take advantage from temporal redundancy between neighboring frames enabling higher compression rates.

H.262 or MPEG-2 Part 2 is a video coding format standardised and jointly maintained by ITU-T Study Group 16 Video Coding Experts Group (VCEG) and ISO/IEC Moving Picture Experts Group (MPEG), and developed with the involvement of many companies. It is the second part of the ISO/IEC MPEG-2 standard. The ITU-T Recommendation H.262 and ISO/IEC 13818-2 documents are identical.

x264 is a free and open-source software library and a command-line utility developed by VideoLAN for encoding video streams into the H.264/MPEG-4 AVC video coding format. It is released under the terms of the GNU General Public License.

Α video codec is software or a device that provides encoding and decoding for digital video, and which may or may not include the use of video compression and/or decompression. Most codecs are typically implementations of video coding formats.

A deblocking filter is a video filter applied to decoded compressed video to improve visual quality and prediction performance by smoothing the sharp edges which can form between macroblocks when block coding techniques are used. The filter aims to improve the appearance of decoded pictures. It is a part of the specification for both the SMPTE VC-1 codec and the ITU H.264 codec.

Scalable Video Coding: (SVC) is the name for the Annex G extension of the H.264/MPEG-4 AVC video compression standard. SVC standardizes the encoding of a high-quality video bitstream that also contains one or more subset bitstreams. A subset video bitstream is derived by dropping packets from the larger video to reduce the bandwidth required for the subset bitstream. The subset bitstream can represent a lower spatial resolution, lower temporal resolution, or lower quality video signal. H.264/MPEG-4 AVC was developed jointly by ITU-T and ISO/IEC JTC 1. These two groups created the Joint Video Team (JVT) to develop the H.264/MPEG-4 AVC standard.

Flexible Macroblock Ordering or FMO is one of several error resilience tools defined in the Baseline profile of the H.264/MPEG-4 AVC video compression standard.

The Network Abstraction Layer (NAL) is a part of the H.264/AVC and HEVC video coding standards. The main goal of the NAL is the provision of a "network-friendly" video representation addressing "conversational" and "non conversational" applications. NAL has achieved a significant improvement in application flexibility relative to prior video coding standards.

High Efficiency Video Coding (HEVC), also known as H.265 and MPEG-H Part 2, is a video compression standard designed as part of the MPEG-H project as a successor to the widely used Advanced Video Coding. In comparison to AVC, HEVC offers from 25% to 50% better data compression at the same level of video quality, or substantially improved video quality at the same bit rate. It supports resolutions up to 8192×4320, including 8K UHD, and unlike the primarily 8-bit AVC, HEVC's higher fidelity Main10 profile has been incorporated into nearly all supporting hardware.

Michael J. Horowitz is an American electrical engineer who actively participated in the creation of the H.264/MPEG-4 AVC and H.265/HEVC video coding standards. He is co-inventor of flexible macroblock ordering (FMO) and tiles, essential features in H.264/MPEG-4 AVC and H.265/HEVC, respectively. He is Managing Partner of Applied Video Compression and has served on the Technical Advisory Boards of Vivox, Inc., Vidyo, Inc., and RipCode, Inc.

A video coding format is a content representation format for storage or transmission of digital video content. It typically uses a standardized video compression algorithm, most commonly based on discrete cosine transform (DCT) coding and motion compensation. Examples of video coding formats include H.262, MPEG-4 Part 2, H.264, HEVC (H.265), Theora, RealVideo RV40, VP9, and AV1. A specific software or hardware implementation capable of compression or decompression to/from a specific video coding format is called a video codec; an example of a video codec is Xvid, which is one of several different codecs which implements encoding and decoding videos in the MPEG-4 Part 2 video coding format in software.

Coding tree unit (CTU) is the basic processing unit of the High Efficiency Video Coding (HEVC) video standard and conceptually corresponds in structure to macroblock units that were used in several previous video standards. CTU is also referred to as largest coding unit (LCU).