Related Research Articles

In statistical mechanics and mathematics, a Boltzmann distribution is a probability distribution or probability measure that gives the probability that a system will be in a certain state as a function of that state's energy and the temperature of the system. The distribution is expressed in the form:

A gluon is a type of massless elementary particle that mediates the strong interaction between quarks, acting as the exchange particle for the interaction. Gluons are massless vector bosons, thereby having a spin of 1. Through the strong interaction, gluons bind quarks into groups according to quantum chromodynamics (QCD), forming hadrons such as protons and neutrons.

In quantum mechanics, the particle in a box model describes the movement of a free particle in a small space surrounded by impenetrable barriers. The model is mainly used as a hypothetical example to illustrate the differences between classical and quantum systems. In classical systems, for example, a particle trapped inside a large box can move at any speed within the box and it is no more likely to be found at one position than another. However, when the well becomes very narrow, quantum effects become important. The particle may only occupy certain positive energy levels. Likewise, it can never have zero energy, meaning that the particle can never "sit still". Additionally, it is more likely to be found at certain positions than at others, depending on its energy level. The particle may never be detected at certain positions, known as spatial nodes.

Asymmetry is the absence of, or a violation of, symmetry. Symmetry is an important property of both physical and abstract systems and it may be displayed in precise terms or in more aesthetic terms. The absence of or violation of symmetry that are either expected or desired can have important consequences for a system.

Hadronization is the process of the formation of hadrons out of quarks and gluons. There are two main branches of hadronization: quark-gluon plasma (QGP) transformation and colour string decay into hadrons. The transformation of quark-gluon plasma into hadrons is studied in lattice QCD numerical simulations, which are explored in relativistic heavy-ion experiments. Quark-gluon plasma hadronization occurred shortly after the Big Bang when the quark–gluon plasma cooled down to the Hagedorn temperature when free quarks and gluons cannot exist. In string breaking new hadrons are forming out of quarks, antiquarks and sometimes gluons, spontaneously created from the vacuum.

Perturbative quantum chromodynamics is a subfield of particle physics in which the theory of strong interactions, Quantum Chromodynamics (QCD), is studied by using the fact that the strong coupling constant is small in high energy or short distance interactions, thus allowing perturbation theory techniques to be applied. In most circumstances, making testable predictions with QCD is extremely difficult, due to the infinite number of possible topologically-inequivalent interactions. Over short distances, the coupling is small enough that this infinite number of terms can be approximated accurately by a finite number of terms. Although only applicable at high energies, this approach has resulted in the most precise tests of QCD to date.

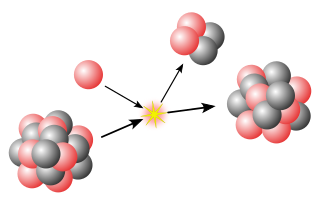

Cluster decay, also named heavy particle radioactivity, heavy ion radioactivity or heavy cluster decay, is a rare type of nuclear decay in which an atomic nucleus emits a small "cluster" of neutrons and protons, more than in an alpha particle, but less than a typical binary fission fragment. Ternary fission into three fragments also produces products in the cluster size.

A jet is a narrow cone of hadrons and other particles produced by the hadronization of quarks and gluons in a particle physics or heavy ion experiment. Particles carrying a color charge, i.e. quarks and gluons, cannot exist in free form because of quantum chromodynamics (QCD) confinement which only allows for colorless states. When protons collide at high energies, their color charged components each carry away some of the color charge. In accordance with confinement, these fragments create other colored objects around them to form colorless hadrons. The ensemble of these objects is called a jet, since the fragments all tend to travel in the same direction, forming a narrow "jet" of particles. Jets are measured in particle detectors and studied in order to determine the properties of the original quarks.

In particle physics, the Lund string model is a phenomenological model of hadronization. It treats all but the highest-energy gluons as field lines, which are attracted to each other due to the gluon self-interaction and so form a narrow tube of strong color field. Compared to electric or magnetic field lines, which are spread out because the carrier of the electromagnetic force, the photon, does not interact with itself.

ALICE is one of nine detector experiments at the Large Hadron Collider at CERN. The experiment is designed to study the conditions that are thought to have existed immediately after the Big Bang by measuring properties of quark-gluon plasma.

Event generators are software libraries that generate simulated high-energy particle physics events. They randomly generate events as those produced in particle accelerators, collider experiments or the early universe. Events come in different types called processes as discussed in the Automatic calculation of particle interaction or decay article.

In particle physics, the parton model is a model of hadrons, such as protons and neutrons, proposed by Richard Feynman. It is useful for interpreting the cascades of radiation produced from quantum chromodynamics (QCD) processes and interactions in high-energy particle collisions.

In particle physics, particle decay is the spontaneous process of one unstable subatomic particle transforming into multiple other particles. The particles created in this process must each be less massive than the original, although the total mass of the system must be conserved. A particle is unstable if there is at least one allowed final state that it can decay into. Unstable particles will often have multiple ways of decaying, each with its own associated probability. Decays are mediated by one or several fundamental forces. The particles in the final state may themselves be unstable and subject to further decay.

PYTHIA is a computer simulation program for particle collisions at very high energies in particle accelerators.

In high-energy physics, jet quenching is a phenomenon that can occur in the collision of ultra-high-energy particles. In general, the collision of high-energy particles can produce jets of elementary particles that emerge from these collisions. Collisions of ultra-relativistic heavy-ion particle beams create a hot and dense medium comparable to the conditions in the early universe, and then these jets interact strongly with the medium, leading to a marked reduction of their energy. This energy reduction is called "jet quenching".

The automatic calculation of particle interaction or decay is part of the computational particle physics branch. It refers to computing tools that help calculating the complex particle interactions as studied in high-energy physics, astroparticle physics and cosmology. The goal of the automation is to handle the full sequence of calculations in an automatic (programmed) way: from the Lagrangian expression describing the physics model up to the cross-sections values and to the event generator software.

In quantum physics, a quantum state is a mathematical entity that embodies the knowledge of a quantum system. Quantum mechanics specifies the construction, evolution, and measurement of a quantum state. The result is a prediction for the system represented by the state. Knowledge of the quantum state, and the rules for the system's evolution in time, exhausts all that can be known about a quantum system.

Victor Sergeevich Fadin is a Russian physicist, well known for his contributions to theoretical physics and particle physics. He is a principal researcher at Budker Institute of Nuclear Physics (BINP) and professor of theoretical physics at Novosibirsk State University (NSU).

The structure function, like the fragmentation function, is a probability density function in physics. It is somewhat analogous to the structure factor in solid-state physics, and the form factor.

In high energy particle physics, specifically in hadron-beam scattering experiments, transverse momentum distributions (TMDs) are the distributions of the hadron's quark or gluon momenta that are perpendicular to the momentum transfer between the beam and the hadron. Specifically, they are probability distributions to find inside the hadron a parton with a transverse momentum and longitudinal momentum fraction . TMDs provide information on the confined motion of quarks and gluons inside the hadron and complement the information on the hadron structure provided by parton distribution functions (PDFs) and generalized parton distributions (GPDs). In all, TMDs and PDFs provide the information of the momentum distribution of the quarks, and the GPDs, the information on their spatial distribution.

References

- ↑ Metz, A.; Vossen, A. (November 2016). "Parton fragmentation functions". Progress in Particle and Nuclear Physics. 91: 136–202. arXiv: 1607.02521 . Bibcode:2016PrPNP..91..136M. doi:10.1016/j.ppnp.2016.08.003. ISSN 0146-6410.