Related Research Articles

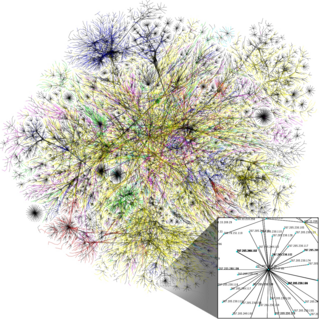

The history of the Internet has its origin in the efforts of scientists and engineers to build and interconnect computer networks. The Internet Protocol Suite, the set of rules used to communicate between networks and devices on the Internet, arose from research and development in the United States and involved international collaboration, particularly with researchers in the United Kingdom and France.

The Internet protocol suite, commonly known as TCP/IP, is a framework for organizing the set of communication protocols used in the Internet and similar computer networks according to functional criteria. The foundational protocols in the suite are the Transmission Control Protocol (TCP), the User Datagram Protocol (UDP), and the Internet Protocol (IP). Early versions of this networking model were known as the Department of Defense (DoD) model because the research and development were funded by the United States Department of Defense through DARPA.

A router is a computer and networking device that forwards data packets between computer networks, including internetworks such as the global Internet.

The Transmission Control Protocol (TCP) is one of the main protocols of the Internet protocol suite. It originated in the initial network implementation in which it complemented the Internet Protocol (IP). Therefore, the entire suite is commonly referred to as TCP/IP. TCP provides reliable, ordered, and error-checked delivery of a stream of octets (bytes) between applications running on hosts communicating via an IP network. Major internet applications such as the World Wide Web, email, remote administration, and file transfer rely on TCP, which is part of the transport layer of the TCP/IP suite. SSL/TLS often runs on top of TCP.

A datagram is a basic transfer unit associated with a packet-switched network. Datagrams are typically structured in header and payload sections. Datagrams provide a connectionless communication service across a packet-switched network. The delivery, arrival time, and order of arrival of datagrams need not be guaranteed by the network.

In telecommunications, packet switching is a method of grouping data into short messages in fixed format, i.e. packets, that are transmitted over a digital network. Packets are made of a header and a payload. Data in the header is used by networking hardware to direct the packet to its destination, where the payload is extracted and used by an operating system, application software, or higher layer protocols. Packet switching is the primary basis for data communications in computer networks worldwide.

The National Science Foundation Network (NSFNET) was a program of coordinated, evolving projects sponsored by the National Science Foundation (NSF) from 1985 to 1995 to promote advanced research and education networking in the United States. The program created several nationwide backbone computer networks in support of these initiatives. It was created to link researchers to the NSF-funded supercomputing centers. Later, with additional public funding and also with private industry partnerships, the network developed into a major part of the Internet backbone.

The Advanced Research Projects Agency Network (ARPANET) was the first wide-area packet-switched network with distributed control and one of the first computer networks to implement the TCP/IP protocol suite. Both technologies became the technical foundation of the Internet. The ARPANET was established by the Advanced Research Projects Agency of the United States Department of Defense.

Robert Elliot Kahn is an American electrical engineer who, along with Vint Cerf, first proposed the Transmission Control Protocol (TCP) and the Internet Protocol (IP), the fundamental communication protocols at the heart of the Internet.

Network congestion in data networking and queueing theory is the reduced quality of service that occurs when a network node or link is carrying more data than it can handle. Typical effects include queueing delay, packet loss or the blocking of new connections. A consequence of congestion is that an incremental increase in offered load leads either only to a small increase or even a decrease in network throughput.

The Computer Science Network (CSNET) was a computer network that began operation in 1981 in the United States. Its purpose was to extend networking benefits, for computer science departments at academic and research institutions that could not be directly connected to ARPANET, due to funding or authorization limitations. It played a significant role in spreading awareness of, and access to, national networking and was a major milestone on the path to development of the global Internet. CSNET was funded by the National Science Foundation for an initial three-year period from 1981 to 1984.

Van Jacobson is an American computer scientist, renowned for his work on TCP/IP network performance and scaling. He is one of the primary contributors to the TCP/IP protocol stack—the technological foundation of today’s Internet. Since 2013, Jacobson is an adjunct professor at the University of California, Los Angeles (UCLA) working on Named Data Networking.

A middlebox is a computer networking device that transforms, inspects, filters, and manipulates traffic for purposes other than packet forwarding. Examples of middleboxes include firewalls, network address translators (NATs), load balancers, and deep packet inspection (DPI) devices.

In computer networks, network traffic measurement is the process of measuring the amount and type of traffic on a particular network. This is especially important with regard to effective bandwidth management.

Yakov Rekhter is a well-known network protocol designer and software programmer. He was heavily involved in internet protocol development, and its predecessors, from their early stages.

Jonathan Andrew Crowcroft is the Marconi Professor of Communications Systems in the Department of Computer Science and Technology, University of Cambridge, a visiting professor at the Department of Computing at Imperial College London, and the chair of the programme committee at the Alan Turing Institute.

Bufferbloat is the undesirable latency that comes from a router or other network equipment buffering too many data packets. Bufferbloat can also cause packet delay variation, as well as reduce the overall network throughput. When a router or switch is configured to use excessively large buffers, even very high-speed networks can become practically unusable for many interactive applications like voice over IP (VoIP), audio streaming, online gaming, and even ordinary web browsing.

Multipath TCP (MPTCP) is an ongoing effort of the Internet Engineering Task Force's (IETF) Multipath TCP working group, that aims at allowing a Transmission Control Protocol (TCP) connection to use multiple paths to maximize throughput and increase redundancy.

The Protocol Wars were a long-running debate in computer science that occurred from the 1970s to the 1990s, when engineers, organizations and nations became polarized over the issue of which communication protocol would result in the best and most robust networks. This culminated in the Internet–OSI Standards War in the 1980s and early 1990s, which was ultimately "won" by the Internet protocol suite (TCP/IP) by the mid-1990s when it became the dominant protocol suite through rapid adoption of the Internet.

References

- ↑ Malamud, Carl (1992). "Round 1: from INTEROP to IETF". Exploring the Internet: a technical travelogue. Prentice Hall. p. 88. ISBN 0-13-296898-3.

- ↑ "Fuzzball: The Innovative Router". The Internet: Changing the Way We Communicate. NSF. Archived from the original on 2 February 2017.

- 1 2 3 Mills, D.L. (August 1988). The Fuzzball (PDF). ACM SIGCOMM 88 Symposium. Palo Alto, CA. pp. 115–122.

- ↑ Mills, David L. (1976). "An overview of the distributed computer network". Proceedings of the June 7-10, 1976, national computer conference and exposition on - AFIPS '76. pp. 523–531. doi:10.1145/1499799.1499874. S2CID 13375745.

- ↑ Mills, D.L.; Braun, H.-W. (August 1987). The NSFNET Backbone Network (PDF). ACM SIGCOMM 87 Symposium. Stoweflake, VT. pp. 191–196.

- ↑ David L. Mills (29 November 2007). "The NSFnet Phase-I Backbone and The Fuzzball Router" (PDF). Presentation at the NSFNET Legacy event, 2007. pp. 38–48.

- ↑ Mills, D.L. (December 1983). DCN Local-Network Protocols. IETF. doi: 10.17487/RFC0891 . RFC 891 . Retrieved 7 December 2022.

- ↑ Kozierok, Charles M. (2005). The TCP/IP guide: a comprehensive, illustrated Internet protocols reference. No Starch Press. pp. 679–681. ISBN 1-59327-047-X.

- ↑ Mills, David L. (2010). "Technical History of NTP". Computer Network Time Synchronization: the Network Time Protocol on Earth and in Space (2nd ed.). CRC Press. pp. 377–396. doi:10.1201/b10282-20 (inactive 2024-11-11). ISBN 978-1-4398-1463-5.

{{cite book}}: CS1 maint: DOI inactive as of November 2024 (link) - ↑ Moy, John T. (1998). OSPF: anatomy of an Internet routing protocol. Addison-Wesley Professional. p. 20. ISBN 978-0-201-63472-3.