Related Research Articles

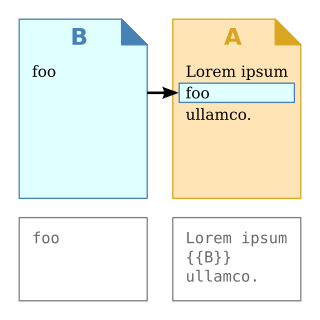

In computer science, transclusion is the inclusion of part or all of an electronic document into one or more other documents by reference via hypertext. Transclusion is usually performed when the referencing document is displayed, and is normally automatic and transparent to the end user. The result of transclusion is a single integrated document made of parts assembled dynamically from separate sources, possibly stored on different computers in disparate places.

New Technology File System (NTFS) is a proprietary journaling file system developed by Microsoft. Starting with Windows NT 3.1, it is the default file system of the Windows NT family. It superseded File Allocation Table (FAT) as the preferred filesystem on Windows and is supported in Linux and BSD as well. NTFS reading and writing support is provided using a free and open-source kernel implementation known as NTFS3 in Linux and the NTFS-3G driver in BSD. By using the convert command, Windows can convert FAT32/16/12 into NTFS without the need to rewrite all files. NTFS uses several files typically hidden from the user to store metadata about other files stored on the drive which can help improve speed and performance when reading data. Unlike FAT and High Performance File System (HPFS), NTFS supports access control lists (ACLs), filesystem encryption, transparent compression, sparse files and file system journaling. NTFS also supports shadow copy to allow backups of a system while it is running, but the functionality of the shadow copies varies between different versions of Windows.

In software engineering, version control is a class of systems responsible for managing changes to computer programs, documents, large web sites, or other collections of information. Version control is a component of software configuration management.

Apache Subversion is a software versioning and revision control system distributed as open source under the Apache License. Software developers use Subversion to maintain current and historical versions of files such as source code, web pages, and documentation. Its goal is to be a mostly compatible successor to the widely used Concurrent Versions System (CVS).

The software release life cycle is the process of developing, testing, and distributing a software product. It typically consists of several stages, such as pre-alpha, alpha, beta, and release candidate, before the final version, or "gold", is released to the public.

Rational ClearCase is a family of computer software tools that supports software configuration management (SCM) of source code and other software development assets. It also supports design-data management of electronic design artifacts, thus enabling hardware and software co-development. ClearCase includes revision control and forms the basis for configuration management at large and medium-sized businesses, accommodating projects with hundreds or thousands of developers. It is developed by IBM.

Record linkage is the task of finding records in a data set that refer to the same entity across different data sources. Record linkage is necessary when joining different data sets based on entities that may or may not share a common identifier, which may be due to differences in record shape, storage location, or curator style or preference. A data set that has undergone RL-oriented reconciliation may be referred to as being cross-linked.

A system of record (SOR) or source system of record (SSoR) is a data management term for an information storage system that is the authoritative data source for a given data element or piece of information, like for example a row in a table. In data vault it is referred to as the record source.

Software as a service is a software licensing and delivery model in which software is licensed on a subscription basis and is centrally hosted. SaaS is also known as on-demand software, web-based software, or web-hosted software.

Product information management (PIM) is the process of managing all the information required to market and sell products through distribution channels. This product data is created by an internal organization to support a multichannel marketing strategy. A central hub of product data can be used to distribute information to sales channels such as e-commerce websites, print catalogues, marketplaces such as Amazon and Google Shopping, social media platforms like Instagram and electronic data feeds to trading partners. Moreover, the significant role that PIM plays is reducing the abandonment rate by giving better product information.

SAP NetWeaver Master Data Management (SAP NW MDM) is a component of SAP's NetWeaver product group and is used as a platform to consolidate, cleanse and synchronise a single version of the truth for master data within a heterogeneous application landscape. It has the ability to distribute internally and externally to SAP and non-SAP applications. SAP MDM is a key enabler of SAP Service-Oriented Architecture. Standard system architecture would consist of a single central MDM server connected to client systems through SAP Exchange Infrastructure using XML documents, although connectivity without SAP XI can also be achieved. There are five standard implementation scenarios:

- Content Consolidation - centralised cleansing, de-duplication and consolidation, enabling key mapping and consolidated group reporting in SAP BI. No re-distribution of cleansed data.

- Master Data Harmonisation - as for Content Consolidation, plus re-distribution of cleansed, consolidated master data.

- Central Master data management - as for Master Data Harmonisation, but all master data is maintained in the central MDM system. No maintenance of master data occurs in the connected client systems.

- Rich Product Content Management - Catalogue management and publishing. Uses elements of Content Consolidation to centrally store rich content (images, PDF files, video, sound etc.) together with standard content in order to produce product catalogues (web or print). Has standard adapters to export content to Desktop Publishing packages.

- Global Data Synchronization - provides consistent trade item information exchange with retailers through data hubs (e.g. 1SYNC)

In information science and information technology, single source of truth (SSOT) architecture, or single point of truth (SPOT) architecture, for information systems is the practice of structuring information models and associated data schemas such that every data element is mastered in only one place, providing data normalization to a canonical form.

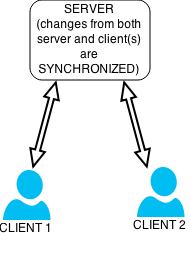

Data synchronization is the process of establishing consistency between source and target data stores, and the continuous harmonization of the data over time. It is fundamental to a wide variety of applications, including file synchronization and mobile device synchronization. Data synchronization can also be useful in encryption for synchronizing public key servers.

Master data represents "data about the business entities that provide context for business transactions". The most commonly found categories of master data are parties, products, financial structures and locational concepts.

In computerized business management, single version of the truth (SVOT), is a technical concept describing the data warehousing ideal of having either a single centralised database, or at least a distributed synchronised database, which stores all of an organisation's data in a consistent and non-redundant form. This contrasts with the related concept of single source of truth (SSOT), which refers to a data storage principle to always source a particular piece of information from one place.

Mobile device management (MDM) is the administration of mobile devices, such as smartphones, tablet computers, and laptops. MDM is usually implemented with the use of a third-party product that has management features for particular vendors of mobile devices. Though closely related to Enterprise Mobility Management and Unified Endpoint Management, MDM differs slightly from both: unlike MDM, EMM includes mobile information management, BYOD, mobile application management and mobile content management, whereas UEM provides device management for endpoints like desktops, printers, IoT devices, and wearables as well.

Microsoft SQL Server Master Data Services (MDS) is a Master Data Management (MDM) product from Microsoft that ships as a part of the Microsoft SQL Server relational database management system. Master data management (MDM) allows an organization to discover and define non-transactional lists of data, and compile maintainable, reliable master lists. Master Data Services first shipped with Microsoft SQL Server 2008 R2. Microsoft SQL Server 2016 introduced enhancements to Master Data Services, such as improved performance and security, and the ability to clear transaction logs, create custom indexes, share entity data between different models, and support for many-to-many relationships.

Master data management (MDM) is a technology-enabled discipline in which business and information technology work together to ensure the uniformity, accuracy, stewardship, semantic consistency and accountability of the enterprise's official shared master data assets.

A staging area, or landing zone, is an intermediate storage area used for data processing during the extract, transform and load (ETL) process. The data staging area sits between the data source(s) and the data target(s), which are often data warehouses, data marts, or other data repositories.

Pimcore is an open-source enterprise PHP software platform for product information management (PIM), master data management (MDM), customer data management (CDP), digital asset management (DAM), content management (CMS), and digital commerce.

References

- ↑ "What is a Golden Record in MDM". Informatica. Retrieved 2023-03-13.

- ↑ "The power of the golden record for data". PensionBee . Retrieved 2023-03-13.

- ↑ Nwaneri, Chinenye (2019-02-14). "System of Record, System of Reference, Golden Records, and other confusing terms in Master Data…". All Things Data!. Retrieved 2023-03-13.

- ↑ IVPMarketing (2020-04-14). "Back to Basics: Golden Copy of Data Explained" . Retrieved 2022-01-21.