In optics, aberration is a property of optical systems, such as lenses, that causes light to be spread out over some region of space rather than focused to a point. Aberrations cause the image formed by a lens to be blurred or distorted, with the nature of the distortion depending on the type of aberration. Aberration can be defined as a departure of the performance of an optical system from the predictions of paraxial optics. In an imaging system, it occurs when light from one point of an object does not converge into a single point after transmission through the system. Aberrations occur because the simple paraxial theory is not a completely accurate model of the effect of an optical system on light, rather than due to flaws in the optical elements.

A lens is a transmissive optical device that focuses or disperses a light beam by means of refraction. A simple lens consists of a single piece of transparent material, while a compound lens consists of several simple lenses (elements), usually arranged along a common axis. Lenses are made from materials such as glass or plastic and are ground, polished, or molded to the required shape. A lens can focus light to form an image, unlike a prism, which refracts light without focusing. Devices that similarly focus or disperse waves and radiation other than visible light are also called "lenses", such as microwave lenses, electron lenses, acoustic lenses, or explosive lenses.

Optics is the branch of physics that studies the behaviour and properties of light, including its interactions with matter and the construction of instruments that use or detect it. Optics usually describes the behaviour of visible, ultraviolet, and infrared light. Light is a type of electromagnetic radiation, and other forms of electromagnetic radiation such as X-rays, microwaves, and radio waves exhibit similar properties.

In optics, the aperture of an optical system is a hole or an opening that primarily limits light propagated through the system. More specifically, the entrance pupil as the front side image of the aperture and focal length of an optical system determine the cone angle of a bundle of rays that comes to a focus in the image plane.

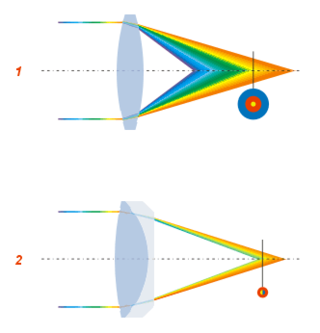

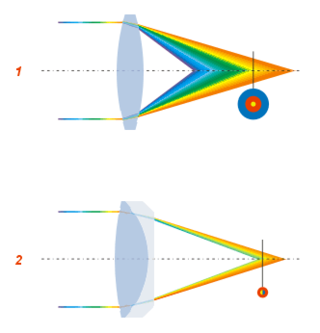

In optics, chromatic aberration (CA), also called chromatic distortion, color aberration, color fringing, or purple fringing, is a failure of a lens to focus all colors to the same point. It is caused by dispersion: the refractive index of the lens elements varies with the wavelength of light. The refractive index of most transparent materials decreases with increasing wavelength. Since the focal length of a lens depends on the refractive index, this variation in refractive index affects focusing. Since the focal length of the lens varies with the color of the light different colors of light are brought to focus at different distances from the lens or with different levels of magnification. Chromatic aberration manifests itself as "fringes" of color along boundaries that separate dark and bright parts of the image.

An f-number is a measure of the light-gathering ability of an optical system such as a camera lens. It is calculated by dividing the system's focal length by the diameter of the entrance pupil. The f-number is also known as the focal ratio, f-ratio, or f-stop, and it is key in determining the depth of field, diffraction, and exposure of a photograph. The f-number is dimensionless and is usually expressed using a lower-case hooked f with the format f/N, where N is the f-number.

In optics, a circle of confusion (CoC) is an optical spot caused by a cone of light rays from a lens not coming to a perfect focus when imaging a point source. It is also known as disk of confusion, circle of indistinctness, blur circle, or blur spot.

A camera lens is an optical lens or assembly of lenses used in conjunction with a camera body and mechanism to make images of objects either on photographic film or on other media capable of storing an image chemically or electronically.

Angular resolution describes the ability of any image-forming device such as an optical or radio telescope, a microscope, a camera, or an eye, to distinguish small details of an object, thereby making it a major determinant of image resolution. It is used in optics applied to light waves, in antenna theory applied to radio waves, and in acoustics applied to sound waves. The colloquial use of the term "resolution" sometimes causes confusion; when an optical system is said to have a high resolution or high angular resolution, it means that the perceived distance, or actual angular distance, between resolved neighboring objects is small. The value that quantifies this property, θ, which is given by the Rayleigh criterion, is low for a system with a high resolution. The closely related term spatial resolution refers to the precision of a measurement with respect to space, which is directly connected to angular resolution in imaging instruments. The Rayleigh criterion shows that the minimum angular spread that can be resolved by an image-forming system is limited by diffraction to the ratio of the wavelength of the waves to the aperture width. For this reason, high-resolution imaging systems such as astronomical telescopes, long distance telephoto camera lenses and radio telescopes have large apertures.

An optical telescope is a telescope that gathers and focuses light mainly from the visible part of the electromagnetic spectrum, to create a magnified image for direct visual inspection, to make a photograph, or to collect data through electronic image sensors.

In optics, the Airy disk and Airy pattern are descriptions of the best-focused spot of light that a perfect lens with a circular aperture can make, limited by the diffraction of light. The Airy disk is of importance in physics, optics, and astronomy.

The science of photography is the use of chemistry and physics in all aspects of photography. This applies to the camera, its lenses, physical operation of the camera, electronic camera internals, and the process of developing film in order to take and develop pictures properly.

A catadioptric optical system is one where refraction and reflection are combined in an optical system, usually via lenses (dioptrics) and curved mirrors (catoptrics). Catadioptric combinations are used in focusing systems such as searchlights, headlamps, early lighthouse focusing systems, optical telescopes, microscopes, and telephoto lenses. Other optical systems that use lenses and mirrors are also referred to as "catadioptric", such as surveillance catadioptric sensors.

A telecentric lens is a special optical lens that has its entrance or exit pupil, or both, at infinity. The size of images produced by a telecentric lens is insensitive to either the distance between an object being imaged and the lens, or the distance between the image plane and the lens, or both, and such an optical property is called telecentricity. Telecentric lenses are used for precision optical two-dimensional measurements, reproduction, and other applications that are sensitive to the image magnification or the angle of incidence of light.

The optical transfer function (OTF) of an optical system such as a camera, microscope, human eye, or projector specifies how different spatial frequencies are captured or transmitted. It is used by optical engineers to describe how the optics project light from the object or scene onto a photographic film, detector array, retina, screen, or simply the next item in the optical transmission chain. A variant, the modulation transfer function (MTF), neglects phase effects, but is equivalent to the OTF in many situations.

In Gaussian optics, the cardinal points consist of three pairs of points located on the optical axis of a rotationally symmetric, focal, optical system. These are the focal points, the principal points, and the nodal points; there are two of each. For ideal systems, the basic imaging properties such as image size, location, and orientation are completely determined by the locations of the cardinal points; in fact, only four points are necessary: the two focal points and either the principal points or the nodal points. The only ideal system that has been achieved in practice is a plane mirror, however the cardinal points are widely used to approximate the behavior of real optical systems. Cardinal points provide a way to analytically simplify an optical system with many components, allowing the imaging characteristics of the system to be approximately determined with simple calculations.

In digital photography, the image sensor format is the shape and size of the image sensor.

The design of photographic lenses for use in still or cine cameras is intended to produce a lens that yields the most acceptable rendition of the subject being photographed within a range of constraints that include cost, weight and materials. For many other optical devices such as telescopes, microscopes and theodolites where the visual image is observed but often not recorded the design can often be significantly simpler than is the case in a camera where every image is captured on film or image sensor and can be subject to detailed scrutiny at a later stage. Photographic lenses also include those used in enlargers and projectors.

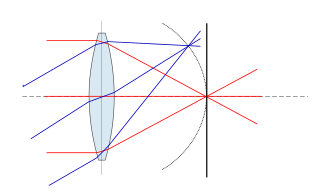

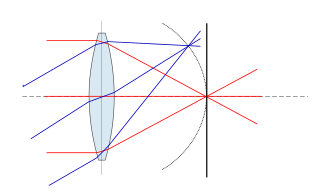

Petzval field curvature, named for Joseph Petzval, describes the optical aberration in which a flat object normal to the optical axis cannot be brought properly into focus on a flat image plane. Field curvature can be corrected with the use of a field flattener, designs can also incorporate a curved focal plane like in the case of the human eye in order to improve image quality at the focal surface.

A flat lens is a lens whose flat shape allows it to provide distortion-free imaging, potentially with arbitrarily-large apertures. The term is also used to refer to other lenses that provide a negative index of refraction. Flat lenses require a refractive index close to −1 over a broad angular range. In recent years, flat lenses based on metasurfaces were also demonstrated.